Generative AI for healthcare promises to reduce administrative burden and help clinical teams work more efficiently. But adopting generative AI presents steep challenges in the healthcare industry as well. When patient health information is on the line, there’s little room for error.

At Aloa, we work with healthcare leaders who want to move faster without adding risk. Whether you’re interested in reducing documentation time or improving day-to-day operations through technology, we’ll help you create a solution that meets both clinical and compliance requirements.

In this guide, we'll break down how generative AI can be used in healthcare and highlight the risks associated with such adoption.

TL;DR

- Generative AI for healthcare leverages large language models to draft notes, summaries, patient messages, and research text, with more applications rising everyday..

- In healthcare settings, GenAI is great in high-volume, language-heavy work like documentation, chart prep, portal replies, claims, and denial letters.

- You need PHI safe design and steady monitoring to reduce the risks of hallucinations, privacy and PHI leaks, and bias, etc.

- To scale a system built with generative AI, you would need further integration with your existing workflow such as EHR systems.

- Aloa can help you prototype fast and build a production-ready system that solves real pain points.

What Is Generative AI for Healthcare?

Generative AI for healthcare uses large language models to turn raw clinical data into useful text your team can review. It drafts reports and visit notes, summarizes long charts, and explains labs in clear language. It also supports imaging reads and assistants that answer questions or route work, while clinicians keep final say.

In practice, this is software that can read and write natural language. It pulls from things like patient history, meds, labs, and medical imaging scans, then creates notes, summaries, or structured fields. It can also help with research tasks, like reading a stack of papers and drafting a short, clear summary for your team.

That doesn't mean the model is always correct. It can generate, summarize, and extract, but it still needs clinical governance, strong data rules, and human review, especially for diagnosis or anything sent to patients. For a wider operations view, you can explore how AI is already changing healthcare operations in our guide.

Traditional predictive artificial intelligence predicts or scores things, like readmission risk or no-show risk. Generative AI creates new text or structured outputs, so you need to test every use for truth, safety, and clear links back to source data.

Today, teams tend to use it most in areas like:

- Documentation drafts and chart summaries

- Patient messages and call center replies

- Coding, claims, and denial support

- Drug development/discovery, clinical trial support, and research summaries

- Imaging notes and medical image analysis support

Generative AI adoption keeps growing because pilots are easy to start and language-heavy work is painful. Scaling takes more effort, which is why many guides now focus on governance, workflow fit, and integration, not only on the models themselves.

Benefits of Adopting Generative AI in Healthcare

Generative AI pays off when it turns into fewer clicks, delays, and “we need three more people” moments. You feel that most in clinical support, admin work, and medical research. Each area still needs solid data, a clean workflow fit, and a human on the hook for final judgment.

Clinical Quality Support

Here, generative AI works like a co-writer and a fast reader for healthcare professionals. It can scan a long medical history and hand you a tight summary with key meds, recent labs, and open problems. It can also turn visit audio into a structured note so clinicians spend more time with patients and less time typing.

Strong use cases tend to be small, clear tasks you can double-check fast:

- Pre-visit chart summaries that flag changes since last visit and open orders

- Draft discharge instructions in plain language that a nurse edits and signs

- Guideline lookup drafts that pull the right section, then the clinician confirms the plan

We map more of these workflow patterns in our guide to practical AI applications in healthcare.

Administrative Efficiency and Cost Leverage

Generative AI can draft appeal letters using documented chart facts. It can flag weak documentation before it turns into a denial. It can also clean up call notes into structured tickets and help assemble prior auth packets by pulling the right excerpts and sending them to the right queue.

You’ll see the impact in the same operational metrics your finance and revenue cycle teams already live in. Track how quickly notes get closed after visits, how long agents spend in wrap up, or whether first pass denials drop as documentation improves. Over time, those gains will reduce the overall cost per claim. For more front and back-office ideas, we shared real-world AI examples in healthcare operations.

Research and Innovation Acceleration

In research, generative AI models can help teams get through text-heavy work faster. It can summarize long papers, draft sections of study protocols, and produce first-pass clinical trial narratives that researchers then refine. In more data-driven settings, it can surface hypotheses from large datasets, such as patterns in compounds or targets, and help narrow the list of candidates. It can also suggest potential drug candidates from vast datasets, then scientists test those in the lab using traditional methods.

Inside hospitals, the same approach can support care teams in day-to-day clinical work. It can shorten the time it takes to review evidence or help clinicians organize key points into a draft plan or pathway. We cover those patterns in our take on how AI in hospitals is changing patient care, with a focus on assistive tools that help people think, rather than replace them.

Best Uses of Generative AI in Healthcare

The strongest use cases sit inside work your teams already do. Notes, messages, tickets, research. Not sci-fi robots that diagnose on their own.

Here's where AI pulls real weight in hospitals, clinics, and other healthcare settings across the industry:

Documentation and Clinician Admin

Hospitals can adopt genAI-enabled documentation tools that listen to a doctor’s visit or read from the chart. The tool drafts the note so clinicians simply review and edit.

Think of a busy primary care clinic. A doctor finishes a 20-minute visit. The assistant has pulled vitals, meds, and recent labs, then turns the talk into a structured SOAP note and a short summary for the patient. The doctor spends a few minutes editing, then signs.

This usually lives inside your electronic health records or dictation workflow. Some health systems report that tools like this cut documentation time by 21% to 30% while still keeping full human review. To keep this safe, teams lean on a few basics:

- Human sign-off on every record

- Clear links back to audio, transcript, and chart fields

- Audit logs so compliance and quality can review samples

When we design workflow automation for clinician documentation at Aloa, we start with one service line, track edit rate and note quality, then decide how wide to roll out.

Patient and Staff Communication

Think about a nurse triage inbox with hundreds of portal messages a day. The model reads the thread and the problem list, then drafts a reply that uses your approved education content. The nurse checks tone and safety, adds details that matter, and sends. Over time, you can see response times drop and after-hours work ease up.

In contact centers, an assistant suggests call scripts during live calls. A parent asks about a new inhaler. The agent sees a script that covers refills, warning signs, and when to call the clinic. Anything that sounds like diagnosis or medication change routes to a nurse or doctor.

Guardrails really matter here. Patient-facing text should stay informational and match approved materials. No new diagnosis or drug advice goes out without clinician review. This is where healthcare-focused chatbots and AI agents that plug into your portal or CRM help you handle more volume while your team keeps control.

Clinical Decision Support and Diagnostics

Clinical decision support and diagnostics carry higher stakes than most other GenAI use cases. The work needs clear limits, human oversight, and rigorous testing before it goes anywhere near patient care.

A common starting point is a one-page chart summary. The model scans notes, meds, labs, and imaging reports. It then surfaces items that deserve a second look, like a creatinine trend that’s drifting up or a lung nodule that never got a follow-up. It can also bring the relevant guideline excerpt into view at the moment it’s needed, instead of forcing the clinician to hunt through a long PDF.

Risk increases when the model begins making diagnostic or treatment recommendations. Output that names a condition, suggests a drug and dose, and lists side effects can sound authoritative even when it’s wrong. That’s how errors get copied into plans. Anything in this category needs strict boundaries, heavy evaluation, and clear accountability.

The safer approach is to treat the model as a “co-pilot.” Use it to guide clinicians through existing pathways and order sets, then have it pull answers only from approved references. Build the workflow so the clinician must review, confirm, and take the final action every time.

R&D, Imaging, and Synthetic Data

On the research side, generative artificial intelligence models act like very fast readers and first-draft writers. They help your experts move faster through long text and large image sets.

A trials team can use an assistant to scan charts for a new oncology study. The model pulls key fields, checks them against study rules, and flags likely matches. Staff still confirm each one. Similar tools draft protocol sections and turn stacks of papers into short briefs.

In radiology, a model can turn spoken findings into a structured report or help label thousands of images for training. Radiologists keep control of the final text and sign off.

Synthetic data adds one more tool. A model learns patterns from large datasets, then creates artificial patient records that feel realistic but don't copy any single person. Teams use those records to test analytics, share examples with vendors, or train models when live data access runs tight. You still run checks for privacy leaks and bias, but early tests move faster.

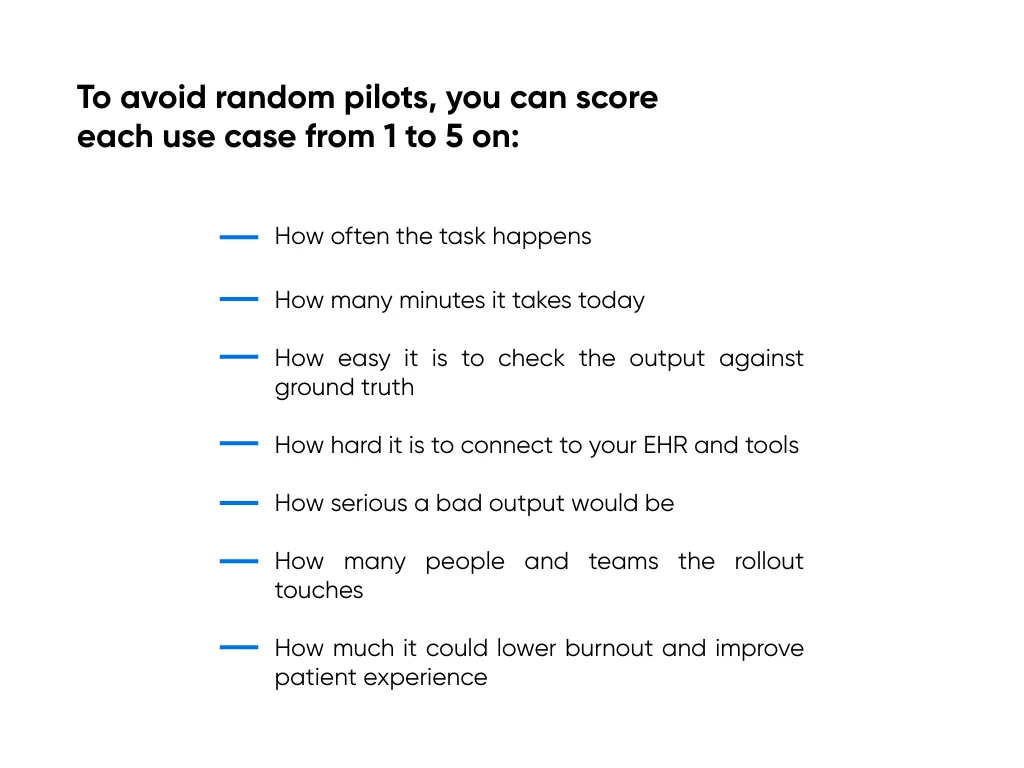

Quick Rubric To Choose Your First Use Cases

High volume, high time cost, clear ground truth, and medium- or lower-risk usually rise first. Those tend to be documentation and communication pilots, where staff can review each output and you can see clear wins before you step into higher-risk decision support or research work.

Limitations of Generative AI in Healthcare

Generative AI can shave minutes off notes and messages. It can also spread mistakes faster than any tired human. The goal is not to fear it, but to know where it can hurt you and what guardrails you need.

Hallucinations and Clinical Safety

Hallucinations happen when the model sounds sure but is wrong. Clinically, they usually show up when the model has to infer more than the data really supports. If the chart is sparse or ambiguous, it will still generate a confident answer by filling in the gaps with what seems statistically likely.

In a specialty clinic, for example, a GenAI note assistant might state that a patient is “on long-term anticoagulation for atrial fibrillation” because it sees warfarin on the med list, even though the drug was prescribed for a different reason. The note sounds polished and accurate, but it’s wrong. And that wrong detail can quietly propagate into future orders, refills, and decisions.

Safer patterns include:

- Grounded answers that pull from the chart or a curated library

- Narrow tasks like “extract medication changes from this note” or “draft a patient-friendly summary of today’s plan,” rather than broad prompts like “summarize this visit.”

- Required human review before anything affects orders, meds, or diagnoses

Wide-open medical advice with no grounding and no human check sits on the unsafe side.

Privacy, PHI Exposure, and Security

Every prompt, log, and training set can hold protected health information. That includes chat text, system logs, and any data sent to outside model hosts.

Risk grows when staff paste PHI into public tools or when vendors reuse data to train general models. Policy slides alone don't help if your system design still sends PHI to places it should never go. Your rules and your architecture need to match.

At a minimum, you need vendor agreements, clear PHI limits, tight access control, and logs that let you see who touched what and when.

Bias, Equity, and Data Representativeness

Models learn from medical data, and that data doesn't treat every group the same. Some groups appear less often. Some fields go missing more often. Where and how you deploy the tool adds another layer.

That mix can lead to weaker answers or worse suggestions for certain patients, especially those with rare diseases or less common medical conditions. Over time, small gaps can turn into patterns in who gets clear, accurate help.

You cut this risk with steady checks. Test outputs across groups, involve equity and quality leaders, and watch trends over time. You will not erase bias, but you can stop it from spreading quietly through your workflows.

Responsible GenAI Adoption in Healthcare

You don't need a huge AI program or complex machine learning stack. You need clear owners, clear rules, and one path from idea to pilot to everyday use. A four-step loop works well here: explore, prepare, launch, and sustain.

Governance Operating Model

Start with one small group that can say yes, no, or not yet. Include people from clinical teams, compliance and legal, security, data and IT, and operations.

This group picks which ideas you test, which pilots run, and which projects go live. They also match review effort to risk so high-impact ideas get more scrutiny.

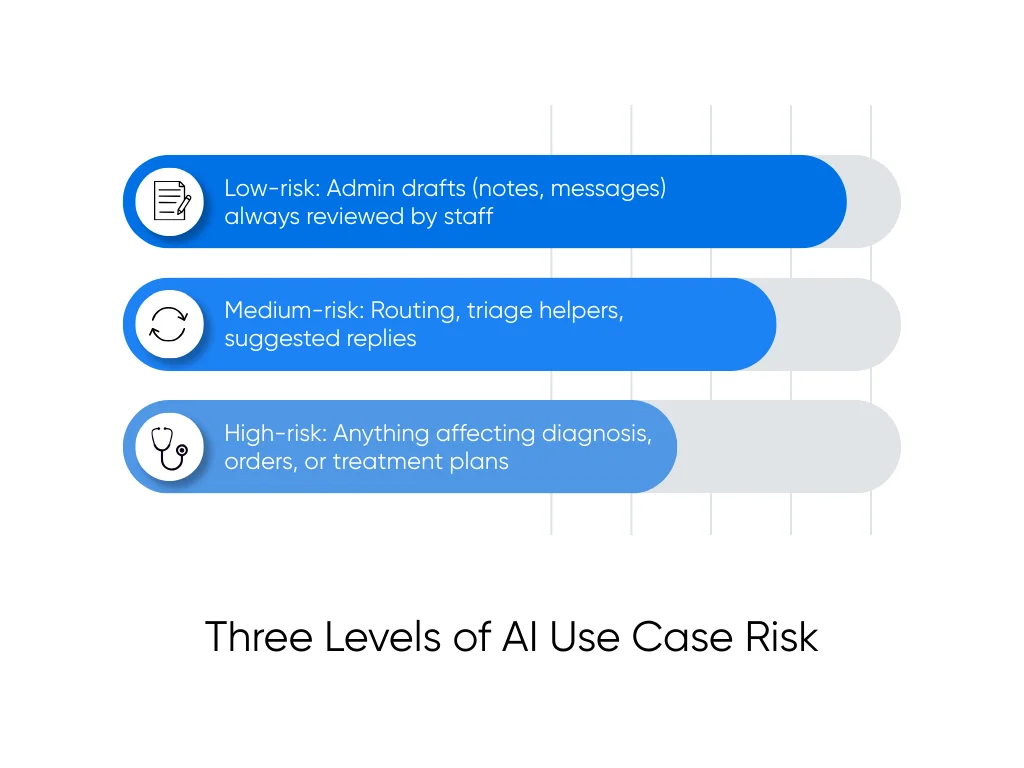

For each use case, use three levels:

- Low-risk covers admin drafts like notes or messages that staff always review

- Medium-risk covers routing, triage helpers, or suggested replies

- High-risk covers anything that can change diagnosis, orders, or treatment plans

Higher tiers need deeper testing, more sign-offs, and slower rollout. That keeps high-risk ideas from jumping ahead of your safety work.

HIPAA Aligned Data Handling

Data rules should feel as clear as your med safety rules. Everyone should know what is allowed and what is off-limits.

We treat these as non-negotiable for GenAI that touches PHI:

- PHI minimization in prompts, logs, and training sets

- Role-based access to models, consoles, and logs

- Detailed logging of prompts, outputs, and user actions

- Clear retention limits for all GenAI data

- Vendor BAAs and security checks where PHI is involved

- Secure environments for any system that touches live patient data

We break these into steps in our guide on making any AI model safe under HIPAA, which many teams use as a working checklist.

Transparency, Accountability, and Continuous Evaluation

Governance often gets blamed for slowing projects. But loose governance usually means you redo work after audits, safety flags, or pushback from clinicians.

So you need ongoing checks, not only a launch test. For each live use case, track workflow time, edit and error rates, safety incidents, bias signals, and how often people choose to use the tool.

Give every system a clear owner who watches those numbers and reports to your group on a regular basis. That habit helps you spot trouble early, retire weak pilots, and grow the GenAI projects that truly support your teams.

Deploying Generative AI for Healthcare with Aloa

In healthcare, even a basic GenAI prototype takes a lot of effort. You need access to the right data, tight control over where PHI flows, and a design that fits real clinical workflows. At Aloa, we start by solving that foundation (security, integrations, and governance), then move into small, testable builds that clinicians can validate quickly before anything scales.

Discovery and Use-Case Scoping

We start with one clear problem, not a model. Together, we map the workflow, who touches it, how long it takes, and how you plan to measure success. We also agree on hard lines, like “no PHI leaves this network” or “the model never changes orders.”

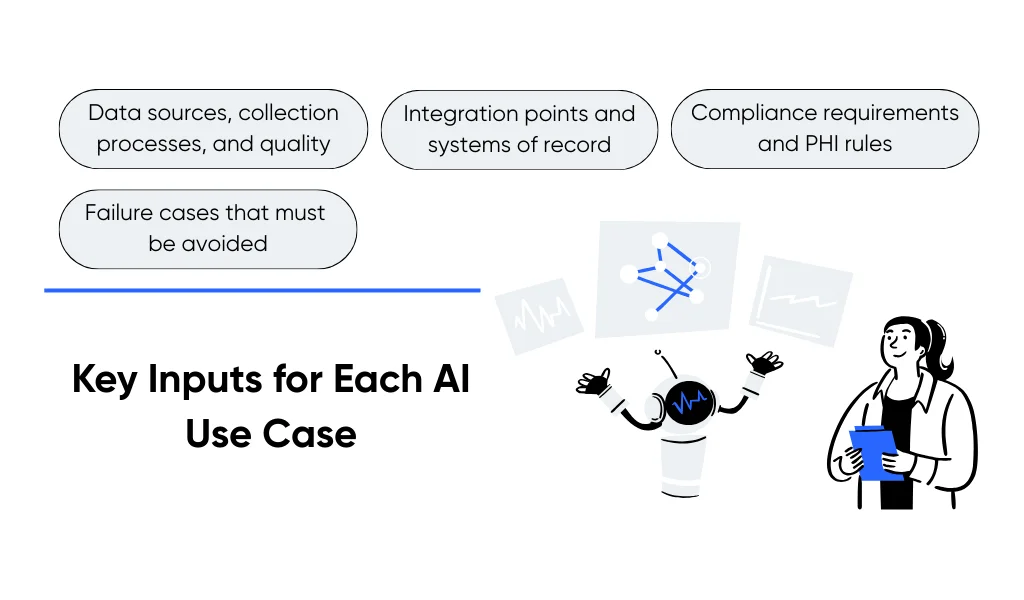

For each idea, we collect a short input set:

- Data sources, data collection processes, and data quality

- Integration points and systems of record

- Compliance and PHI rules

- Failure cases that must never happen

Our team runs this as AI consulting for healthcare workflows so strategy and build are tightly linked.

Rapid Prototyping and Evaluation

Next, we build a focused prototype with only the data it needs. Your clinicians and staff test it on real charts, messages, or images in a controlled environment, then flag errors, tone issues, and hallucinations.

We track time saved, edit rates, and error patterns, not model scores alone. Our generative AI development services follow your security, logging, and access rules from day one, so you won't need a full rebuild later.

How We Help You Scale

Pilot success doesn't guarantee scale, especially once you plug into the EHR and identity systems. We plan for growth early with monitoring, alerts, audit logs, and role-based access.

We then embed assistants into tools your teams already use, like the EHR or ticketing systems. Our digital transformation and workflow automation work updates the plumbing around the model, so your GenAI projects can move from pilot to a stable, supported part of care delivery.

Key Takeaways

If you remember one thing, let it be this: start with text-heavy workflows where humans can easily review and correct the output. That usually means notes and patient messages. Pick one workflow, agree on what “good” looks like in time saved and errors caught, and learn there before you touch anything high-risk.

There are certainly risks involved with this innovation: hallucinations, privacy leaks, and bias all mean that implementations have to be done in a precise manner. The good news is that by 2026, the tech is widely adopted, and it’s easier than ever to find teams with real, healthcare-specific GenAI experience.

At Aloa, we live in that mix of ambition and caution. Our team runs calm, focused working sessions, builds targeted proofs of concept, and then wires them into your EHR and tools with proper data and compliance guardrails for generative AI for healthcare. Talk to our team today!

FAQs About Generative AI for Healthcare

Is generative AI safe for healthcare?

It depends on how you use it. Low-risk uses, like drafting notes or messages, can be quite safe when you ground outputs in chart data, keep the task narrow, and require human review. Higher-risk uses, like diagnosis or treatment suggestions, need strict testing, clear limits, and close monitoring for errors and safety events.

Can generative AI be HIPAA compliant?

Yes, but only with strong controls. You need PHI minimization, role-based access, detailed audit logs, clear data retention rules, and vendor agreements when PHI touches outside systems. Governance has to cover prompts, logs, and training data, not only the main database. At Aloa, we help teams design HIPAA-aligned AI systems and share practical steps in our guide on making any AI model safe under HIPAA and related case studies.

What are the best use cases of generative AI in healthcare today?

The strongest uses right now handle language-heavy work that staff can check easily. Examples include visit notes, chart summaries, referral letters, portal replies, triage messages, and research summaries. Reviews and early deployments highlight documentation and admin support as leading use cases to reduce burnout and free up more time for care.

What are examples of generative AI in hospitals and clinics?

Common examples include AI scribes that draft visit notes, tools that summarize long charts before a consult, helpers that suggest patient-friendly portal messages, and contact center assistants that draft call scripts. On the research side, teams use generative AI to summarize papers, help screen for trial eligibility, and create synthetic patient data for testing.

How should GenAI roll out across a healthcare organization?

Treat it as a staged rollout. Start with a small pilot in one workflow, with clear success metrics, risk tier, and on-call owners. Evaluate accuracy, time saved, and safety signals, then harden your healthcare system with logging, monitoring, and training before you scale to more sites or teams. A cross-functional governance group should approve each step from pilot to wider deployment. Aloa’s generative AI services for healthcare help teams design this roadmap, build the pilot, and wire successful assistants into the EHR and operations with the right guardrails in place.

What are the biggest risks of generative AI in healthcare?

The main risks include hallucinations (where the model sounds confident but gets facts wrong), privacy and PHI exposure, weak or biased data, and unfair performance across patient groups. These risks can grow fast because AI outputs look polished and can reach many patients at once. That is why health leaders focus on strong governance, secure data design, and constant monitoring for errors, safety issues, and equity gaps.