Ever sat in a meeting where someone says, “AI is going to revolutionize healthcare,” and you’re left wondering… okay, but how? Yeah, me too. Because here’s the thing: AI in medicine can be transformative. I’ve seen it with medical transcription. We built a system at Aloa that could turn hours of spoken notes into accurate medical text using cutting-edge technology.

But for every win like that, there’s a project that can get stuck on HIPAA alone. HIPAA compliance is critical to every healthcare application, and as a decision-maker, it’s the first hurdle you have to clear. But it’s not the last: Building AI in medicine means working through a series of challenges, and I’ve been through many of them.

This guide provides a practical path for transforming AI from an idea into a working system. Here’s what you’ll get:

- Where AI is already proving itself in medicine

- A framework to move projects from pilot to production

- Compliance rules that protect patients without killing momentum

- The real cost tradeoffs behind healthcare AI

- How to future-proof systems so they last beyond version one

Think of this as a field guide from someone who actually works with healthcare AI.

The Current State of AI in Medicine

AI in medicine is used to speed up clinical note-taking, analyze medical images for earlier disease detection, support personalized treatment planning through genetic and risk data, and improve patient access with chatbots. These tools ease clinician burnout, shorten diagnosis time, and deliver more tailored patient care.

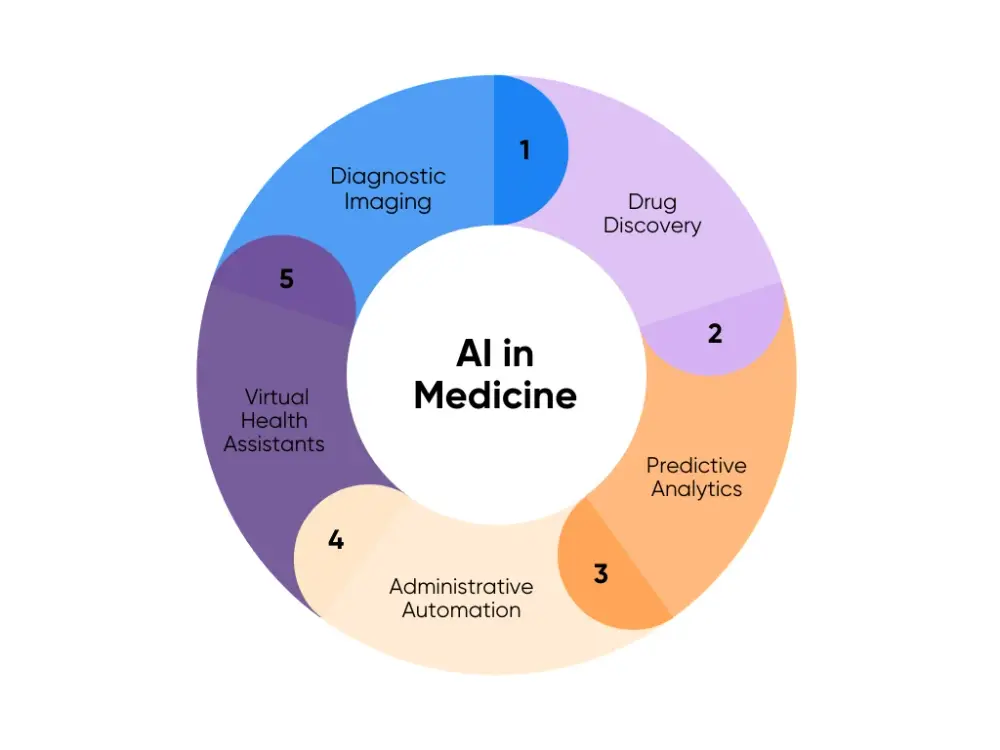

We’re past asking if AI can help. The real question is: where is it actually pulling its weight? Five use cases stand out and are working in daily care.

Current Applications

Here’s how AI is being used in hospitals and clinics right now:

1. Diagnostic Imaging: AI can read X-rays, MRIs, and CT scans using deep learning to flag tumors, fractures, or neuro issues. It acts as a second set of eyes, leading to a quicker disease diagnosis.

One study showed radiologists finished reports 15% faster with AI. Another review found AI reconstructions made medical images sharper while lowering radiation.

2. Drug Discovery: Machine learning models can predict how a new compound behaves before it ever hits a test tube. Toxicity, drug-target fit, and side effects are all mapped out virtually.

Biotechs now run thousands of molecules through these models to shorten medical research and drug development cycles. That levels the playing field a bit and sends only the best candidates into clinical trials.

3. Predictive Analytics: AI Algorithms trained on electronic health records and claims data can forecast who’s likely to bounce back into the hospital, how a disease might progress, or when a patient is about to crash. That way, your team can adjust treatment plans sooner.

Take Johns Hopkins’ TREWS system: it flagged 82% of sepsis cases early. When providers acted within three hours, mortality dropped. That’s huge. Hours matter. These tools flip the script to prevention and support public health programs.

4. Administrative Automation: If you’ve ever seen a doctor stuck at a laptop at midnight, you know the problem. Natural language processing (NLP) tools now take their words (spoken or typed) and turn them into structured notes, codes, or even scheduling inputs.

Abridge is already live in over 100 health systems in the United States. It captures natural language from the visit and spits out chart-ready notes. That’s cleaner data, fewer errors, and yes, doctors actually getting home on time.

5. Virtual Health Assistants: Think of these as the AI front desk. Chatbots and apps handle intake, symptom checkers, med reminders, and primary care follow-ups. They don’t replace healthcare professionals, but they take the edge off the volume.

Cedars-Sinai rolled out a digital health platform that’s already supported 42,000 patients. Routine questions get answered instantly. Staff stick to the high-stakes calls. Patients get faster responses and a better patient experience without adding headcount.

6. Ambient AI Scribes: Doctors lose hours every week to paperwork, and it wears them down. Ambient AI scribes are easing that load by listening in on visits and turning the conversation into ready-to-use notes.

Mass General Brigham researchers surveyed more than 1,400 physicians and advanced practice providers across their hospitals and Emory Healthcare who were using these tools. Burnout dropped by 21.2% in under three months, and documentation-related wellbeing jumped by 30.7% in just two. Every note was still reviewed, but the time saved gave care teams more room for patients.

Implementation Challenges

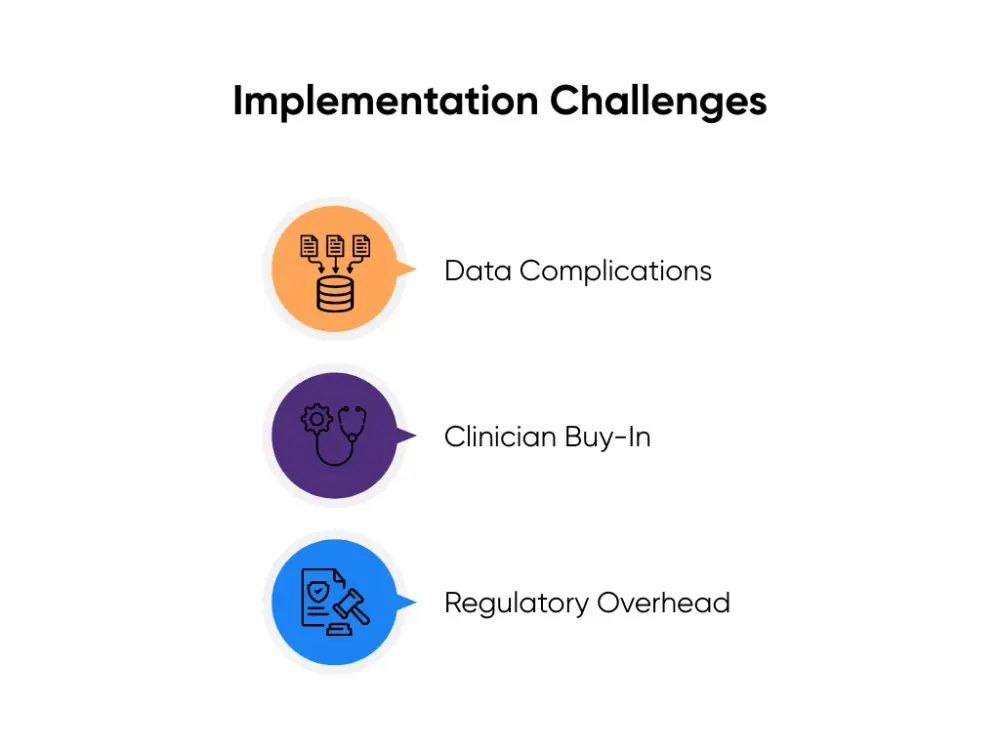

Of course, none of this runs smoothly out of the box. Here’s where I see things break, and how smart teams work around it:

- Data Complications: AI only performs as well as the data it’s fed, and most EHRs are messy. Fields are inconsistent, formats don’t line up, and pulling clean data feels like digging through a junk drawer. Start with a narrow use case and clean that slice of data in a sandbox. Prove the model works there before expanding.

- Clinician Buy-In: It goes without saying that the first features that you’re building should hit real pain points, like cutting down documentation time or reducing repetitive admin work. That’s how you win user trust. Keep the training light, let clinicians give feedback right in the workflow, and show the time savings quickly. Nothing builds adoption faster than a doctor seeing they get an hour back in their day.

- Regulatory Overhead: HIPAA reviews, vendor checks, or legal sign-offs can take months. And even once you’re live, someone has to own it: tracking accuracy, retraining models, handling false alarms. It’s not a one-and-done project. The teams that succeed budget for time and money.

At Aloa, we’ve shipped tools where the scope was deliberately contained: one workflow, one metric, one integration. You might know it as the “Minimum Viable Product” in software development. Always prove value in 6–8 weeks, then expand. That’s how you build momentum without wasting time and money.

So once you’ve cleared the first hurdle, how do you go from there? That’s when you need a framework, or a map that takes you from pilot to production. We’ll discuss that next.

Strategic Framework for AI Adoption

AI projects rarely fail because the algorithms aren’t good enough. They fail because nobody mapped the path. That’s when you get stuck in pilots that never scale, integrations that collapse under pressure, or staff who quietly stop using the tool.

A framework stops that slide. It gives you checkpoints so you know when to move forward, when to fix plumbing, and when to stop before wasting another quarter. This is the same playbook I’ve used at Aloa to take AI from pitch deck to production inside real healthcare systems.

I break it into three phases: assessment, roadmap, and change management.

Assessment Phase

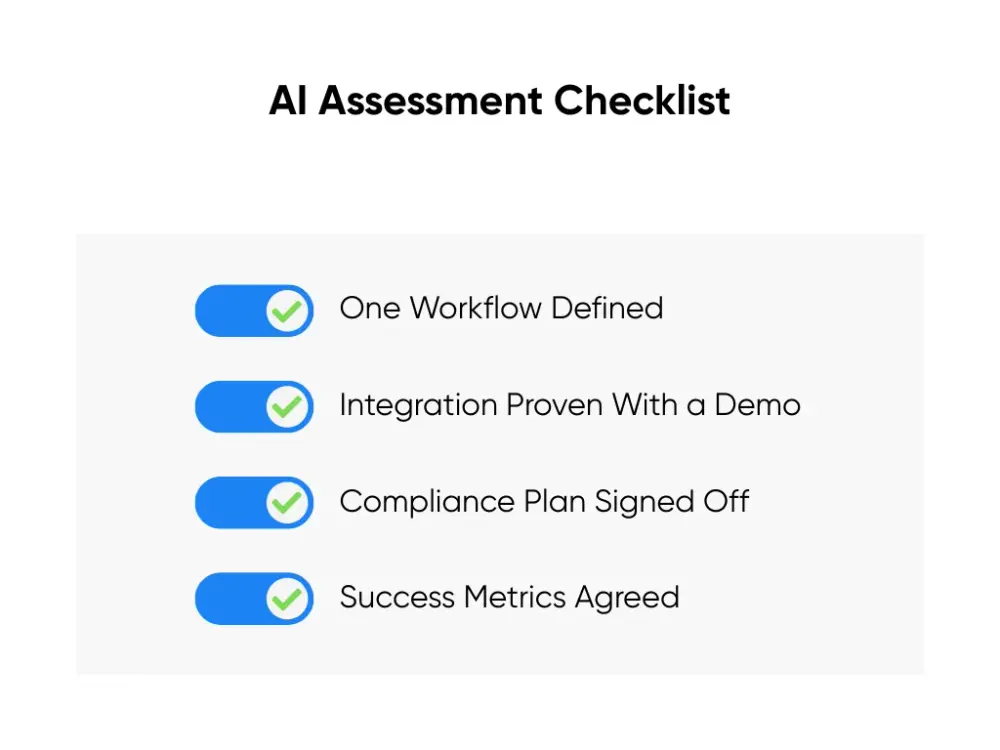

Before you even think about spinning up code, you’ve got to know what “good” looks like. Otherwise, you’ll build a shiny tool nobody wants to use.

Let’s start with the basics:

- Integration: Don’t believe the “we integrate with Epic” line on a sales deck. Ask the vendor to show you (live). If you don’t see data flowing in and results flowing back, assume you’ll end up paying your engineers to duct-tape it later.

- Workflow Fit: If you make people open another tab, forget it. That readmission risk score might be brilliant, but if it’s buried in a dashboard nobody checks, it’s dead on arrival. Put it right in the discharge screen the nurses already live in, and now it’s useful.

- Compliance: This is the boring part everyone wants to skip. Don’t. BAAs, PHI scoping, audit logs. If you wait until go-live to ask legal for a blessing, you’re toast.

- Business Case: Accuracy stats look great in a pitch, but accuracy alone doesn’t pay salaries. What pays is hours saved, denials avoided, beds freed up. An imaging AI that cuts turnaround by 40%? That’s real money and better patient flow.

Here’s the gut-check list I keep taped on the wall:

If you can’t tick all four, you’re not ready. And if you’re not ready, don’t start. You’ll just burn time and goodwill.

Implementation Roadmap

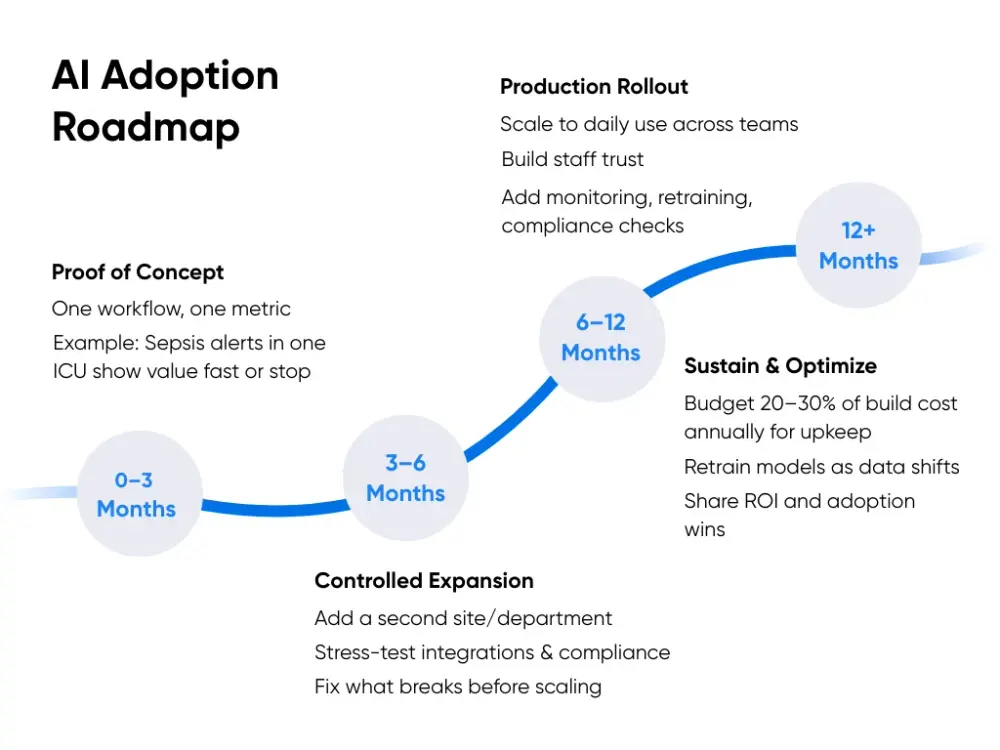

Pilots are fine for testing applications of AI. Living in pilots is not. The roadmap is what moves you from “let’s test it” to “this runs every day.” Without it, projects stall. With it, you know what to expect at each stage and when to call it done.

Here’s how I’d structure it:

0–3 Months

The first phase is proof of concept. You start with one workflow and one metric. Keep it narrow so you can measure it cleanly. For example:

- A sepsis alert in one ICU

- A scheduling tool in one clinic

- An NLP note-taker in a single department

The goal isn’t perfection, but proof. Does the tool connect to your system? Does it show hours saved, denials avoided, or turnaround cut?

By month three, you should have:

- A defined workflow with a clear metric

- A live demo showing real integration

- Early numbers you can take to leadership

If the tool can’t clear that bar, stop. It’s cheaper to pull the plug than drag a weak pilot through another nine months.

3–6 Months

If it passes, you move into controlled expansion. Roll it into a second site or a larger department. Test it with a different patient group. Expect friction here:

- APIs stall

- Data feeds choke

- Compliance flags issues

But that’s the point. Better now than later. Don’t take “we integrate with Epic” as proof. Demand a live FHIR demo with data flowing both ways. Scope PHI so the tool only sees what it needs.

By the six-month mark, you should have:

- Logs that prove integration is real

- A compliance review signed off

- Early ROI numbers that justify going further

6–12 Months

This is the production rollout. By now, staff trust the system because they’ve seen it work. You scale across more workflows and sites. You also add safeguards. Monitoring dashboards track performance. Audit logs let compliance trace every action. Retraining plans keep models from drifting.

Generative AI can help here as decision support, but keep it safe. Use it for discharge notes drafted for nurse review. Use it to pull internal protocols with retrieval-augmented search. Don’t let it diagnose.

By the one-year mark, you should have:

- Daily use across multiple sites

- Monitoring and audit logs live

- Retraining scheduled and documented

- Adoption above 70%

- Compliance fully signed off

Beyond 12 Months

This is sustainment. AI is not set-and-forget. If you don’t plan for upkeep, you slide backwards. Budget 20–30% of build cost each year for retraining, monitoring, and compliance.

Keep staff bought in by showing results. Publish the wins:

- Hours saved

- Denial rates down

- Throughput up

By this stage, you should also expect:

- Quarterly ROI and adoption reviews

- Annual retraining and compliance checks

- Documented improvements in efficiency and patient flow

This roadmap keeps momentum when the project is working and allows you to stop fast when it’s not. If you want to see what that looks like in real hospital ops, from imaging workflows to scheduling backlogs, I covered it in AI in Healthcare: 3 Ways AI is Transforming Operations in 2025.

Change Management

AI often fails because people don’t use it. A tool that adds clicks, creates doubt, or feels forced will get ignored no matter how good the model is. Change management is what turns “a pilot that works on paper” into “a system staff rely on every day.”

Here’s what works:

- Training: Keep it short, practical, and tied to real tasks. A nurse doesn’t need to know the math behind an algorithm, but she needs to see how a sepsis alert shows up in her workflow. A scheduler needs to see how the AI rebooks a no-show. Training should look like their workday, not a lecture hall. Aim for quick sessions that can be repeated as new staff come on board.

- Communication: AI loses trust when it looks like a black box. Show staff why the tool flagged something and how it fits into the decision process. And don’t wait months to share impact. Celebrate the small wins early and loudly, like “Charting time down 25% this week.” Those numbers travel fast in a hospital and turn skeptics into adopters.

- Resistance: Pushback is normal. Treat it as data, not as defiance. Side-by-side pilots let staff compare their judgment with the AI’s. When they see the tool catch what they missed, or speed up a task without cutting corners, trust grows. Forcing adoption from the top down rarely sticks. Proving usefulness does.

- SMB vs. Enterprise: Large health systems have whole departments for change management. They also move at the speed of committees. SMBs in the healthcare industry don’t have that luxury, which is actually an advantage. A 200-person hospital can test, decide, and adapt in weeks instead of months. Use that speed. Keep communication direct, feedback loops short, and rollouts incremental.

- Sustainability: Change sticks only if staff feel the tool saves them time. If adoption feels like overhead, people find ways around it. Bake in regular check-ins, share visible impact, and adjust workflows quickly when staff point out friction.

And remember: a 200-person hospital doesn’t need the same machinery as a 20,000-person system. Your advantage as an SMB is speed. You can roll out faster, gather feedback sooner, and adjust without red tape. Lean into that.

The point of the framework is to give you guardrails so you don’t stall in pilots, lose trust, or burn cash. Assessment keeps you honest, the roadmap keeps you moving, and change management keeps your team with you. Get those three right, and you’ve got a real shot at turning AI from idea into impact.

Regulatory Compliance and Ethics

AI in healthcare is also about trust. A model that reads scans faster or drafts notes in seconds won’t matter without data privacy. Weak compliance erodes that trust fast. Patients pull back, regulators step in, and projects stall. That’s why compliance and ethics are the foundation that keeps everything else standing.

HIPAA and Legal Requirements

HIPAA sets the baseline for protecting patient information in the U.S. When AI enters the picture, the same rules apply. But the risks multiply if the basics aren’t handled correctly. In practice, that comes down to four things:

- PHI Handling: Any data that can identify a patient has to be protected. Training an AI model on clinical notes or lab results is only allowed if the data is properly de-identified or covered under formal agreements.

- Business Associate Agreements (BAAs): If a vendor touches PHI at any point, a BAA must be signed. It’s the legal contract that ties them to HIPAA standards and makes them accountable for how data is managed.

- Breach Notifications: If data is exposed (whether through human error, a system flaw, or misuse), organizations are required to notify both patients and regulators quickly. Using AI doesn’t reduce that responsibility.

- Audit Obligations: Regulators expect traceability for health information. That means keeping detailed logs of who accessed what, when, and how PHI was used. Without that documentation, proving compliance is almost impossible.

At Aloa, we design around these requirements from day one. Every healthcare AI model we ship is built to operate within HIPAA standards, with BAAs signed, audit trails in place, and PHI safeguards applied. That way, clients aren’t left trying to bolt compliance on after the system is live.

Beyond Compliance: Protecting Patient Data

Meeting HIPAA is table stakes. To really build trust, healthcare leaders have to go further. Patients don’t care if you’ve checked the legal boxes. But they care about data protection that holds up.

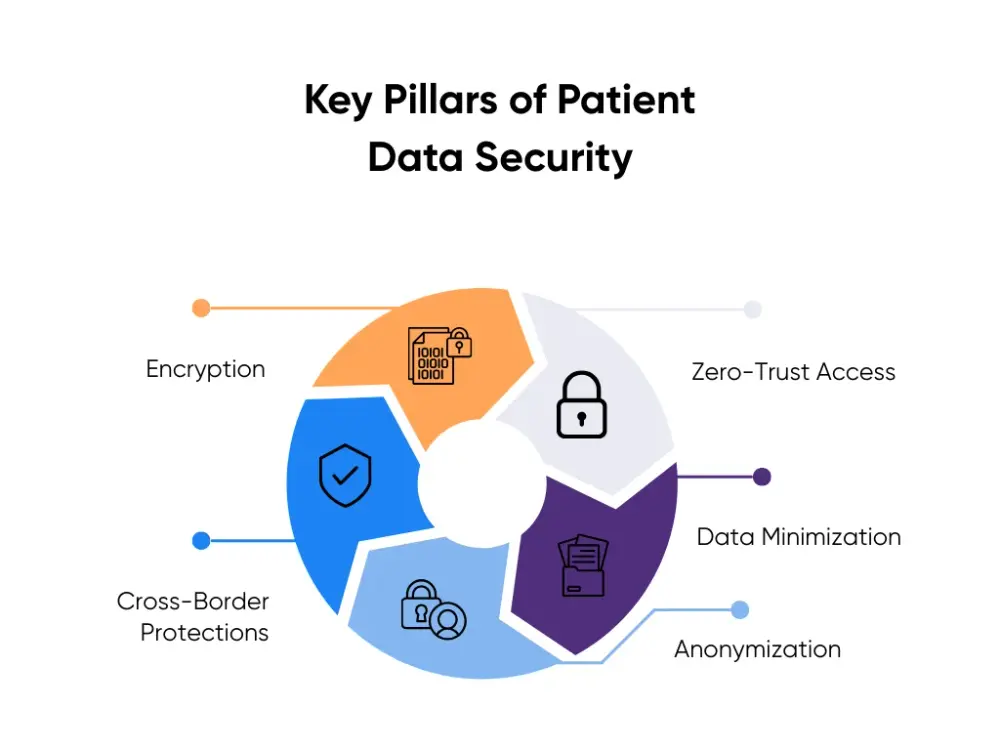

You should adopt best practices that apply globally, not just locally:

- Encryption: Protect data both in transit and at rest. Even if someone intercepts it, they shouldn’t be able to read it. Modern encryption standards (AES-256, TLS 1.3) are what regulators and security teams expect.

- Zero-Trust Access: Nobody should have access by default. Not staff, not vendors, not even system admins. Every request to view or use data must be verified and logged.

- Data Minimization: Don’t collect what you don’t need. Limiting the scope of PHI reduces exposure risk and makes compliance easier to maintain.

- Anonymization: Before training or testing models, strip out identifiers. This protects privacy while still giving AI systems the data they need to learn.

- Cross-Border Protections: If data moves outside the U.S., it must still meet international standards like GDPR. Many countries apply stricter requirements, and ignoring them can block projects entirely.

These practices go beyond avoiding penalties. They reduce risk, reassure clinicians, and give patients confidence that their information is being treated with care. More importantly, they make adoption smoother because people are quicker to embrace tools they trust.

Safe Experimentation and Responsible Innovation

Compliance doesn’t pause just because a project is “in testing.” If anything, pilots are where mistakes are most likely (and where a single misstep can shut everything down). Running AI experiments safely means building with compliance in mind from the start:

- De-Identified Datasets: Pilots should never use raw PHI. Strip identifiers before training or testing models so the system can still learn patterns without risking patient privacy. If you can’t de-identify the data effectively, the pilot isn’t ready.

- Sandbox Environments: Keep test systems isolated from production networks. A sandbox setup means bugs, bad outputs, or early misconfigurations don’t touch live medical records or clinical workflows.

- Documentation: Track what data you used, how it was handled, and who accessed it. Regulators don’t just want to know that you stayed compliant; they want proof. Good documentation also makes it easier to fix problems when they show up.

- Scalable Safeguards: Don’t build compliance measures you’ll have to throw out later. Controls like access logs, encryption, and role-based permissions should be designed so they move with you from pilot to production to protect patient safety.

Pilots done this way don’t slow innovation. They protect it. Guardrails let you experiment confidently, knowing you can prove safety and compliance when it’s time to scale.

Cost-Benefit Analysis and ROI

AI in the medical field sounds exciting. But leaders care about one thing: is it worth it?

There isn't a neat price tag. Every system, vendor, and use case is different. What you can do is break costs and benefits into buckets. That’s how you see if the math holds before you burn months in pilots.

Direct Costs

Start with what hits the budget on day one. These are the checks you cut before the AI even sees a patient chart:

- Licenses and Subscriptions: Vendors love the “$50 per user” pitch. Sounds fine until you multiply it by every clinician, nurse, and admin who touches the system. And that’s just base access. Want the features you actually need, like EHR integration or analytics? That’s usually in the “premium” tier. Suddenly the cheap tool isn’t cheap.

- Hardware and Infrastructure: Training your own models means GPUs. Lots of them. Even if you’re smart and start with prebuilt models, you still pay for compute and storage. Cloud services like AWS or Azure feel convenient until the monthly bill shows up because your data pipelines weren’t tuned. On-prem gear avoids the variable bills, but then you’re the one paying for racks, cooling, and maintenance. Pick your poison.

- Implementation Services: Here’s the part everyone underestimates. No AI system drops neatly into Epic or Cerner. Someone has to map the FHIR APIs, clean the data, and adjust workflows. That means engineers, compliance folks, and usually external partners. Skip this, and you’ll have a shiny demo that crashes the second it touches production.

Direct costs are easy to count. The hard part, and where teams stall, is proving those checks will come back as real value instead of sunk cost.

Indirect Benefits

This is where AI actually pays off. You won’t find these on an invoice, but they’re the levers that matter long-term:

- Improved Clinical Outcomes: Catch sepsis hours earlier. Flag cancers before they spread. Predict readmissions before they happen and lift patient outcomes. That’s better quality of care, sure. But it’s also fewer penalties, shorter stays, and lower costs. I’ve seen a 10% drop in readmissions swing millions back into a hospital’s budget. Health outcomes and margins go hand in hand.

- Operational Efficiency: An AI scribe that chops 25% off charting time isn’t just saving clicks. It’s giving healthcare workers back their evenings. Less burnout means fewer resignations. Every doc who leaves costs six figures to replace, and that’s before you count the disruption. Same goes for predictive scheduling: fewer no-shows, smarter staffing, smoother healthcare delivery. Quiet wins, big savings.

- Competitive Advantage: Healthcare may be mission-driven, but it’s still a market. If your imaging turnaround is same-day and your virtual intake doesn’t make patients want to scream, you stand out. For SMBs, that differentiation matters more than squeezing out another 2% efficiency. It’s how you stay on the map against systems ten times your size.

These indirect gains are what keep projects alive after the first budget shock. Clinicians see the value. Boards see the returns. That’s when AI shifts from “another experiment” to “part of how we work.”

ROI Timeline

Even the best projects don’t pay off overnight. Here’s how the math usually plays out:

- Short-Term (3–6 Months): Admin automation is the quick win. AI scribes, claim coding helpers, and scheduling bots start showing ROI within a quarter. I’ve seen teams cut charting time by 25% almost immediately. Hours saved per shift turn into budget protection fast.

- Mid-Term (6–12 Months): Predictive analytics and scheduling tools need a longer runway. You can’t claim success on week two. Give it months of data. At Cleveland Clinic, a readmission model dropped readmissions by about 10% in under a year. That kind of number makes leadership stop asking “why AI?” and start asking “where else?”

- Long-Term (12–24 Months): Clinical AI is the slow burn. AI models in healthcare can push diagnostic accuracy up 15–25% for specific conditions (cancer, diabetic eye disease, fractures). But proving that value means tracking patient cohorts, not just clean demo slides. The impact is real, but only if you measure and stick with it.

Here’s the pattern I’ve seen over and over: projects that pay back inside a year survive. Projects that stretch to two years without obvious wins get cut. That’s why you start small, prove it, and scale only when the math backs you up.

Once you know the numbers add up, the next step is keeping them that way. That means future-proofing, as in building AI that can flex with new rules, new tools, and the next compliance curveball.

Future-Proofing Your AI Strategy

An AI project that looks sharp today can age out before it ever scales. Future-proofing isn’t about chasing every shiny AI technology or swapping tools every quarter. It’s building flexibility into your stack, making sure your people are ready to use the tools, and keeping compliance as a living part of the plan. That’s how you keep momentum going when the tech (and the rules) inevitably shift.

Build on Flexible Infrastructure

Lock yourself into a rigid vendor or closed system, and you’ll regret it. Models change, APIs evolve, and standards shift. What works today won’t look the same in three years. If your stack can’t bend, it breaks.

Here’s what a flexible setup looks like:

- Modular Design: Don’t weld yourself to one vendor. Architect the system so you can swap models or add new services without ripping everything out. If a better imaging model comes out next year, you want to plug it in, not start from zero.

- Interoperability First: Your health records live in messy systems. The least you can do is speak their language. Standards like FHIR and HL7 aren’t perfect, but they’re the closest thing to a common tongue. Pick tools that can talk across systems, or you’ll spend more time building workarounds than improving care.

- Hybrid Hosting: Sensitive PHI? Keep it on-prem. Heavy compute? Let the cloud handle it. The future won’t be all-cloud or all-local. It’ll be a mix. Avoid vendors that force one path; you need the freedom to shift as costs, compliance, and workloads change.

Think of it like building a house with removable walls. The foundation stays solid, but the rooms can be reconfigured as your needs change. That’s how you avoid a setup that’s obsolete before it’s fully deployed.

Invest in People, Not Just Tools

The best AI project dies if staff won’t use it. Future-proofing is also about culture. You need clinicians, ops teams, and IT to see AI as part of the job, not another system bolted on top. If it feels like extra clicks, adoption collapses.

Here's how you keep it alive:

- Short, Role-Specific Training: Nurses don’t need a deep dive on data pipelines. Schedulers don’t care about clinical models. Keep training tight and tailored. Ten minutes that shows their workflow gets easier will land harder than a three-hour lecture no one remembers.

- Clear Communication: Don’t roll out with vague promises about “innovation.” Say what it does: less paperwork, safer care, or fewer errors. Translate the tech into outcomes staff actually feel.

- Visible Wins Shared Early: Charting time cut by 25% in the first week? Push that stat out to every inbox and huddle board. Numbers change minds faster than strategy decks.

This is where smaller systems have the edge. You don’t need six committees to greenlight a pilot. You can move faster, test quicker, and pivot based on feedback in weeks instead of quarters.

At Aloa, we’ve seen healthcare AI tools stick when they’re built around people. They fail when they’re forced on them. That’s why we pull clinicians into design from day one. It’s the difference between adoption and abandonment.

Stay Ahead of Compliance

HIPAA is just the starting line. State privacy laws add their own twists. The FDA keeps issuing new guidance for clinical AI and connected medical devices. If you’re working across borders, GDPR and other global rules pile on. A reactive strategy means you’re always scrambling.

Future-proofing here means:

- HIPAA From Day One: Don’t “retrofit” compliance once the model is live. Every data flow, every integration should assume PHI is in play. Build as if an auditor is already watching.

- Privacy-First Practices: Encryption at rest and in transit, strict data minimization, and zero-trust access aren’t “extras.” They’re table stakes. If a breach happens, you want to prove you had the strongest safeguards in place.

- Rolling Compliance Reviews: Don’t wait for the annual audit. Set up quarterly or semi-annual checks to catch drift early. Regulations shift. Models evolve. Continuous reviews keep you ahead instead of patching holes after the fact.

Think of compliance as a moving target. Treat it like a one-time checkbox, and you’ll miss. Build it into your roadmap, and you’ll adapt as the rules shift, without derailing projects midstream.

In our medical transcription tool, compliance shaped the build. We combined speech recognition, large language models (LLMs), and Rx databases to turn hours of transcription into formatted clinical notes in seconds. A workflow that could’ve been a liability became a safe, scalable tool clinicians actually trust.

If you future-proof right, you won’t stall after the pilot. You scale from one ward to the whole hospital. Staff keep using the tool instead of clicking past it. Regulators nod instead of knocking. And the ROI shows up on the balance sheet, not just in a slide deck.

Key Takeaways

Some days in healthcare tech feel less like “innovation” and more like juggling chainsaws. Budgets that squeak, staff who are exhausted, and compliance reviews that crawl. Add AI to that pile and, yeah, it can feel like one more thing. But here’s the flip side: AI in medicine and healthcare can actually take weight off if done correctly.

We’ve seen both sides at Aloa. The late nights trying to nudge a model into behaving. The HIPAA reviews that seemed to last longer than my patience. But we’ve also watched small wins stack up, like doctors finishing notes before dinner or pilots that actually stuck past version one. Those are the stories that keep me curious about what’s next.

So let’s keep this conversation going. Share what you’re wrestling with, drop by the AI Builder Community on Discord, or skim the Byte-Sized AI newsletter. Whatever works for you. The point is, you don’t have to figure this stuff out solo. Together, let’s see how far medical artificial intelligence can go when it’s built to last.

FAQs

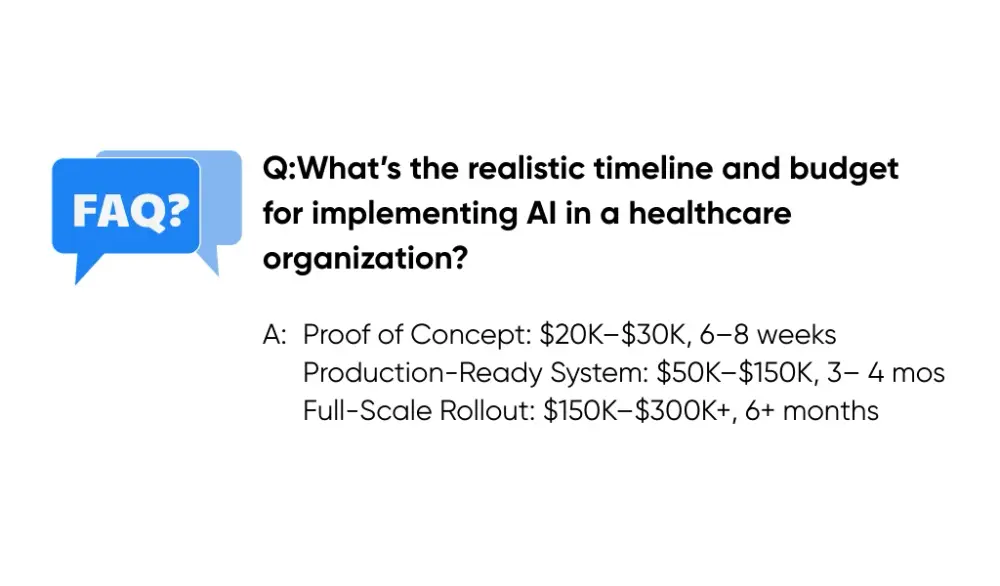

What’s the realistic timeline and budget for implementing AI in a healthcare organization?

It depends on scope, but here’s the ballpark:

- Proof of Concept: $20K–$30K, 6–8 weeks

- Production-Ready System: $50K–$150K, 3–4 months

- Full-Scale Rollout: $150K–$300K+, 6+ months

That’s why smart teams don’t start big. They run a $20K pilot, see if the math holds, then scale. Cheaper to learn early than bleed cash later.

What’s the best way to start an AI project in healthcare?

Don’t start with the tech. Start with the problem. I use a “three-pillar assessment”:

Readiness: Leadership aligned, sponsor in place.

Data: EHR clean enough to support AI.

Workflow: Clinicians can use it without extra clicks.

I’ve seen too many teams chase shiny tools and then scramble for a use case. Flip it. Find the pain point first, then ask if AI is the right fix. And start small: one or two pilots with clear metrics and clinical champions.

How do you navigate HIPAA compliance when implementing AI in medicine?

HIPAA touches everything. So you:

- Strip identifiers or cover them with a rock-solid BAA.

- Lock PHI behind role-based access.

- Log every AI interaction with patient data.

At Aloa, we don’t bolt compliance on later. We build it in from day one. That keeps regulators off your back and projects alive. Want the playbook? Here’s the step-by-step: How to Make Any AI Model Safe Through HIPAA Compliance.