Your hospital can’t exactly hit pause. The ER’s overflowing, clinicians are buried in notes, and the budget… well, it’s not doing you any favors. AI in hospitals sounds like the fix, but you’re caught between moving fast and not crashing the system. You need proof it’ll plug in cleanly, keep data safe, and actually make life easier.

At Aloa, we don’t show up with a generic app and call it a day. We roll up our sleeves and build AI that plays nice with your EHR, stays HIPAA-compliant, and frees up hours your staff would rather spend on patients than paperwork. And when it works (and it does), we help you grow it without blowing the budget or burning out your team.

That’s what this guide is about:

- Where AI is already working in hospitals

- Proof it’s improving care and easing workloads

- How to start small and scale smart

- Trends worth preparing for today

Forget the hype. This is about cleaner hand-offs, lighter shifts, and AI that finally pays its way.

The Current State of AI in Hospital Settings

Hospitals use artificial intelligence to spot patient risks early, flag high-risk outpatients for follow-up, monitor vital signs, suggest treatments, speed up billing, and streamline scheduling. In short, AI in hospitals is helping doctors focus on care delivery while keeping operations moving smoothly.

If you want the long version, we’ve covered it in AI in Healthcare: 3 Ways AI is Transforming Operations in 2025. For now, let’s only hit the highlights:

Clinical Applications

Diagnosis drags, care plans stall under data overload, and notes swallow hours that should go to patients. AI is starting to cut into that grind in four big ways:

- Diagnostic Support: Reviews medical images and labs quickly and flags urgent cases, like a possible stroke or tumor, so they jump to the top of the queue instead of getting lost in routine studies.

- Treatment Planning: Connects labs, imaging, clinical notes, and history to surface likely treatment plans. In oncology, it can flag therapies tied to a tumor’s genetics, which once took weeks for a team to piece together.

- Remote Patient Monitoring: Tracks vitals in real time and flags risks such as sepsis before they spike. Wearables stream updates so nurses don’t get blindsided by sudden drops.

Radiology reads now move faster, diagnostic accuracy improves, ICU transfers drop, and clinicians win back time. At Aloa, we’ve helped build tools that deliver these kinds of wins. Some of the most eye-opening healthcare applications are in AI Applications in Healthcare: 3 AI Benefits in 2025.

Administrative Functions

When administrative operations lag behind, AI can help healthcare providers ease that pressure:

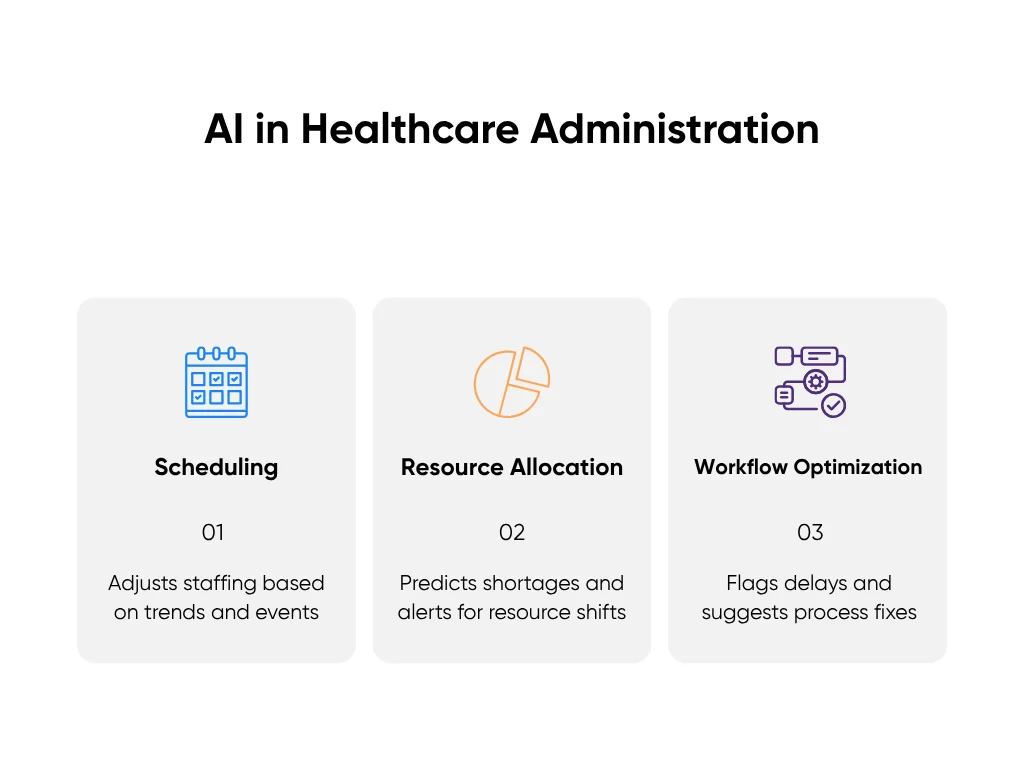

- Scheduling: Looks at admissions history, seasonal spikes, even local events, then adjusts staff rosters to lift operational efficiency before the rush.

- Resource Allocation: Tracks real-time occupancy and forecasts shortages of beds, imaging units, or medical devices like ventilators. Managers get alerts to reassign resources before a crisis hits.

- Workflow Optimization: Uses data analysis to time each step (admissions, labs, transfers) and flag bottlenecks. Suggests fixes like staggering discharges to keep patients moving. Intelligent solutions like AI VoiceBots can further reduce administrative load by automating appointment scheduling, patient reminders, and routine inbound calls—freeing staff to focus on high-value care.

Hospitals using these tools are already seeing results: One operating room scheduling system targeted $500,000 in annual savings per operating room, and mid-sized hospitals have cut 5–10% off costs through smarter staffing and less waste.

Implementation Challenges

If AI were truly plug-and-play, we wouldn't be discussing implementation as much. The truth is that adopting new technology is messy. Here are three main headaches that tend to show up:

- Technical Integration: AI must integrate with the systems you already use (EHRs, electronic medical records, scheduling, and labs). This makes it essential to have clean data to work with, which can be the very first challenge in medical systems.

- Staff Training: New AI tools don’t magically slot into a 12-hour hospital shift. Clinicians already juggle patients, charts, and nonstop alerts, so the training required to integrate AI tools into your current workflow needs to be hyper-efficient.

- Regulatory Compliance: Every AI system handles patient data, which means hospitals face strict HIPAA rules and zero room for error.

These aren’t dealbreakers. But they do separate pilots that deliver from projects that flame out. The hospitals that win build integration, training, and compliance into their plan from the start.

Impact on Patient Care Quality and Outcomes

Discuss AI long enough, and the question inevitably arises: Is this actually helping patients, or just providing us with prettier dashboards? Good news: AI’s impact has already been proven hard to ignore.

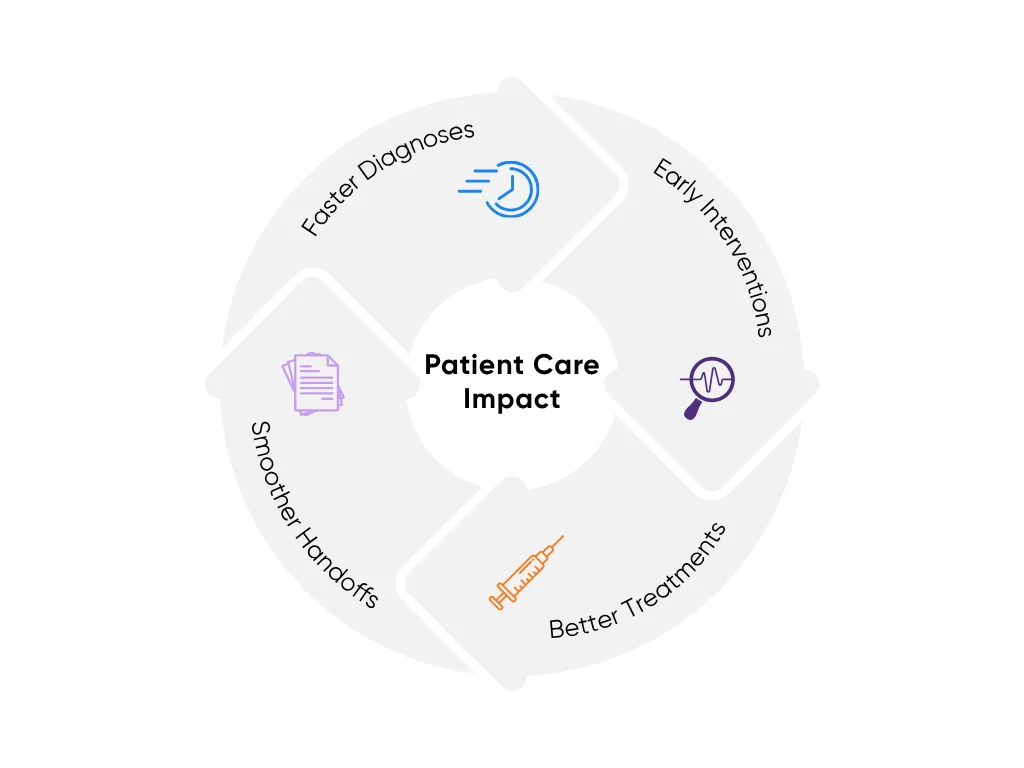

Take UC Davis Health. They used AI to coordinate stroke transfers and cut the time in half.

Or look at P&C Global’s work with a U.S. health system. They built predictive analytics into discharges and saw a 15% drop in 30-day readmissions.

And these aren’t unicorn cases. Hospitals everywhere are starting to see measurable gains, including:

- Faster Diagnoses: AI triage tools speed early detection by pushing critical scans, like pneumonia or intracranial bleeds, to the top of the queue so specialists act sooner.

- Early Interventions: Predictive models aid disease detection, flagging sepsis risk or likely readmissions before they blow up. That means fewer ICU transfers and shorter stays.

- Smarter Treatment Decisions: In oncology, AI pairs therapies to tumor genetics, which cuts down on trial-and-error cycles.

- Smoother Handoffs: Natural language processing (NLP) tools shave hours off documentation, which means fewer errors during transitions. And yes, clinicians actually get home earlier, without shortchanging medical care.

None of this makes AI a silver bullet. It only works when it’s monitored, stress-tested, and built to fit real workflows. The hospitals that implement it correctly are the ones that turn pilots into long-term gains.

Implementation Strategies for Hospital Systems

It takes a plan to turn a pilot into real results. And getting AI to work with your EHR, meet HIPAA requirements, and win over clinicians is where most projects stumble. Here are a few hands-on strategies to help you make it work at your hospital:

Planning and Assessment

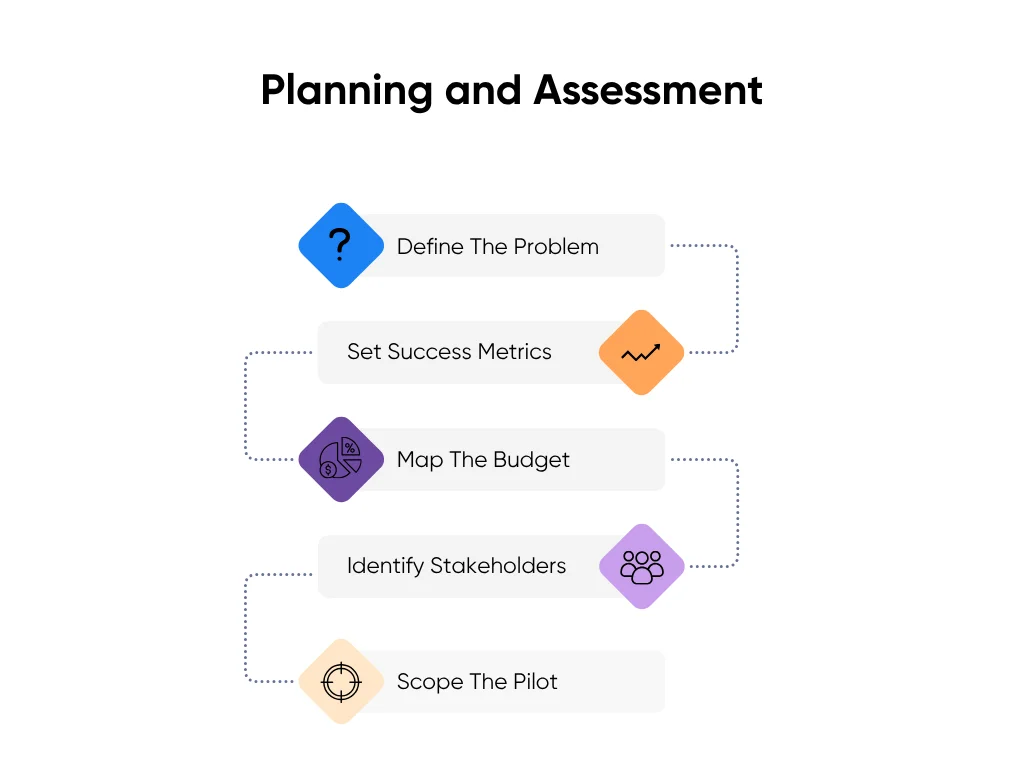

AI doesn’t crash on the tech; it crashes when nobody stopped to ask, “What problem are we actually solving?” The opening moves:

- Define the problem: Start with the pain, not the pitch. Zero in on 2–3 headaches draining time or money, like discharge delays, documentation, or OR scheduling.

- Set success metrics: Hours saved, wait times cut, readmission rates down. Call the shot early so pilots don’t drift.

- Map the budget: Licenses are the cheap part. Integration, training, and support are where the hidden costs live.

- Identify stakeholders: Every project needs a clinical champion, IT backup, and leadership air cover. Without them, even good tools stall.

- Scope the pilot: Keep it small and time-boxed. 90 days is plenty. Quick wins build trust and momentum.

If you want a hand setting up that kind of pilot, Aloa builds healthcare AI projects that start small, prove value fast, and scale only when the results are clear.

Technical Integration

Your AI system has to work with your existing EHR, lab systems, and scheduling platforms. If that connection fails, the pilot may stall and never scale. Focus on three things:

- EHR Compatibility: Don’t buy “we integrate with Epic” on a slide. Make vendors prove it with live data moving both ways. If results don’t show up where staff already work, the tool won’t stick.

- Data Quality: Hospital data is messy (duplicates, gaps, bad formats). Clean one slice, test it, then scale. Feed garbage in, and you’ll get garbage out.

- Security From Day One: HIPAA isn’t a box to check later. Build in role-based access, audit logs, and encryption before anyone logs in.

Get integration right, and AI shows up inside the tools staff already trust. Skip it, and your shiny pilot never makes it past the demo.

Staff Training and Adoption

Getting staff to use AI is the hardest part. Make it fast, practical, and part of daily routines. Here’s how:

- Keep it short and specific: Ten minutes on how a sepsis alert shows up in a chart beats three hours on machine learning theory.

- Show wins early: “Charting time down 25% this week” spreads faster in hallways than in memos. Visible gains flip skeptics.

- Make the AI transparent: If no one knows why it flagged a patient, trust evaporates. Context builds confidence.

- Treat resistance as feedback: Pushback usually means the workflow doesn’t fit. Side-by-side pilots let staff see the value without being forced.

- Play to your size: Regional and mid-sized hospitals can out-iterate the big guys. Shorter loops, faster fixes, smoother adoption.

At Aloa, we build training the same way we build pilots: lightweight, practical, and focused on quick wins. That’s how staff move past “checking the box” to actually using AI as part of their day. And if you want the extended cut on rolling out AI without the buzzwords, it’s in AI in Medicine, Without the Fluff: A Guide for Decision-Makers.

Regulatory Compliance and Ethical Considerations

An AI that reads scans faster or drafts notes in seconds still crashes if healthcare professionals and patients don’t trust it with their clinical data and health information. Weak compliance drains that trust fast. That's why guardrails and ethics are what keep projects alive.

Meeting HIPAA Requirements

HIPAA fine print isn’t anyone’s idea of fun. That’s why we broke it down plain in How to Make Any AI Model Safe through HIPAA Compliance. And yes, there’s a video if you’d rather watch than scroll.

Four things matter most here:

- PHI Handling: De-identify or cover patient identifiers under the right agreements. Training on raw charts without this step is a compliance time bomb.

- Business Associate Agreements (BAAs): If a vendor touches PHI at any point, a BAA has to be signed (no exceptions). That’s what makes them legally accountable.

- Breach Notifications: HIPAA demands fast, transparent reporting if data is exposed. AI doesn’t change that obligation; it just makes the blast radius bigger.

- Audit Trails: Logs of who accessed medical records, when, and how are non-negotiable. Without them, you can’t prove compliance even if you did everything right.

Protecting Patient Privacy

Any implementation of AI in a hospital setting must comply with HIPAA. Here are some best practices:

- Encryption Everywhere: AES-256, TLS 1.3; if data gets intercepted, it’s unreadable.

- Zero-Trust Access: Nobody (staff, vendors, even admins) gets in by default. Every attempt is verified and logged.

- Data Minimization: Collect only what’s essential. Less data means less exposure when something goes wrong.

- Anonymization in Model Training: Remove identifiers from health records before using them to train or tune models. The AI learns patterns without ever seeing names or records.

Running Safe AI Pilots

You can apply the following to your pilots and ensure that your data is protected:

- Synthetic or Test-Ready Data: Instead of dipping into live charts, pilots should run on de-identified or synthetic datasets. Safer for patients, cleaner for testing.

- Sandboxes: Keep pilots off production systems. If something breaks, it stays contained, reducing the risk of adverse events.

- Documentation: Track what data was used, who accessed it, and how. Good records fix problems fast and keep auditors calm.

- Scalable Safeguards: Encryption, access logs, and role-based permissions baked in from day one, not bolted on later.

Future Trends and Emerging Applications

The future of AI in healthcare and digital health is happening before our eyes. Here are a few specific ways in which AI is expected to make a massive difference in the next two to three years:

Generative & Agentic AI in Workflows

Generative AI isn’t just cranking out patient letters anymore. The next wave is agentic AI: systems that don’t just suggest, they act. Intake forms that pre-fill. Charts that land pre-summarized before rounds. Chat agents that check in with patients and ping a nurse when something looks off.

By late 2025, most hospitals will have at least one of these running in the background. By 2026, expect them to start coordinating across departments like they’ve always been part of the team.

The trick: start small. Keep it in “suggestion mode,” lock down guardrails, and watch it closely. Skip that, and you’ll be explaining why the AI just ordered three sets of labs no one needed.

Embedded AI in EHRs & Conversational Interfaces

Clicking through ten screens to find test results in your electronic health systems? That’s on the way out. Oracle already launched an AI-driven EHR where you can ask in natural language, “Show me the last three MRIs and kidney function trend.” By 2026, conversational EHRs that surface relevant medical information will be standard across the healthcare industry.

Prep is less glamorous: clean up your data pipelines and be ready for when the voice assistant mishears you. (It will. Probably during rounds.)

Reinforcement Learning & Decision Intelligence

Prediction models are yesterday’s news. The next step is decision support that learns from clinician feedback. Think ICU models suggesting ventilator settings, then improving as staff tweak them. Or chronic-care tools adjusting meds based on live vitals. Pilots and clinical trials will pop up by 2026, with wider use by 2027–28.

The risk: if you train it on sloppy habits, it’ll copy them. Keep humans in the loop and install clear off-switches.

Operational Agents & Robotics

Not everything is charts and scans. Robots are already hauling meds and samples. Next up: AI agents managing lab schedules, predicting supply shortages, even wheeling patients around. By 2026, bots and scheduling agents will be a normal sight in bigger hospitals.

Start with low-risk tasks: linens, meds, supplies. And yes, give them collision detection and human overrides, unless you’re fine explaining a hallway pileup to the local paper.

Key Takeaways

The best way to approach implementing AI in a hospital setting is starting small, proving that it works, and then scaling:

- Pick one headache: Documentation, discharges, or OR scheduling.

- Set the win: Hours saved, wait times cut, readmissions down.

- Pilot tight: 90 days, narrow scope, clear owners.

- Build guardrails: HIPAA, clean data, staff training.

Do that, and adoption of AI pays its way and helps deliver more effective care.

If you’re experimenting with AI in hospitals or just figuring out where to start, give us a call. For quick updates, sign up for our Byte-Sized AI Newsletter.

FAQs About AI Use in Hospitals

What’s the typical ROI timeline for AI in hospitals?

For healthcare organizations, quick wins come fast if you pick the right spots. Automating notes or unclogging patient flow can show returns in under a year. Clinical tools like sepsis alerts or radiology triage usually prove themselves in 12–24 months. The big, system-wide rollouts? Expect 2–3 years. It’s less “instant jackpot,” more “steady compound interest.”

What’s the best way for hospitals to start with AI?

Don’t chase shiny tech; start with your headaches. Pick 2–3 high-impact problems, line up a clinical champion, and define what “good” looks like (hours saved, wait times cut, readmissions down). Run a 90-day pilot, show it works, then scale. Try to transform everything at once and you’ll just burn out your team.

How do hospitals keep AI compliant and protect patient privacy?

Same rules, higher stakes. Encrypt everything (in transit and at rest), lock down access by role, and keep airtight audit trails. Vendors need BAAs, training data needs de-identification, and sensitive workloads often live in private cloud or on-prem. Add regular security drills and staff refreshers so privacy isn’t just a policy; it’s muscle memory.