From personal assistant AIs that can automate your routine to knowledgeable chatbots, there are AIs for just about anything nowadays. You might be thinking: “That's pretty cool. I want to build my own”. The good news is you can, even without a huge budget. The catch is that the resources to get you started can give conflicting advice, overly technical guides, and involve complex tools.

At Aloa, we've helped hundreds of clients navigate the AI development process, from initial concept to production deployment. Our experience working with both technical teams and complete beginners has taught us that the key to successful AI development isn't just technical knowledge—it's having the right roadmap, understanding common pitfalls, and knowing when to seek expert guidance.

This guide cuts through the confusion by providing a clear, step-by-step roadmap specifically designed to teach beginners how to make an AI. We'll cover:

- Choosing the right tools

- Gathering data

- Training your first model

- Deploying it in the real world

Let's take the next step together and turn your AI vision into reality.

Defining the Problem and Setting AI Project Goals

Before we address how to make an AI, what does it actually mean to make an AI? It's not about building robots or complex algorithms from scratch. Making an AI means creating a system that can learn patterns from your data and make decisions that solve real business problems.

Why bother? Because manual processes are expensive. There are hidden costs associated with the errors, delays, and opportunity costs of having skilled workers perform repetitive tasks instead of strategic work.

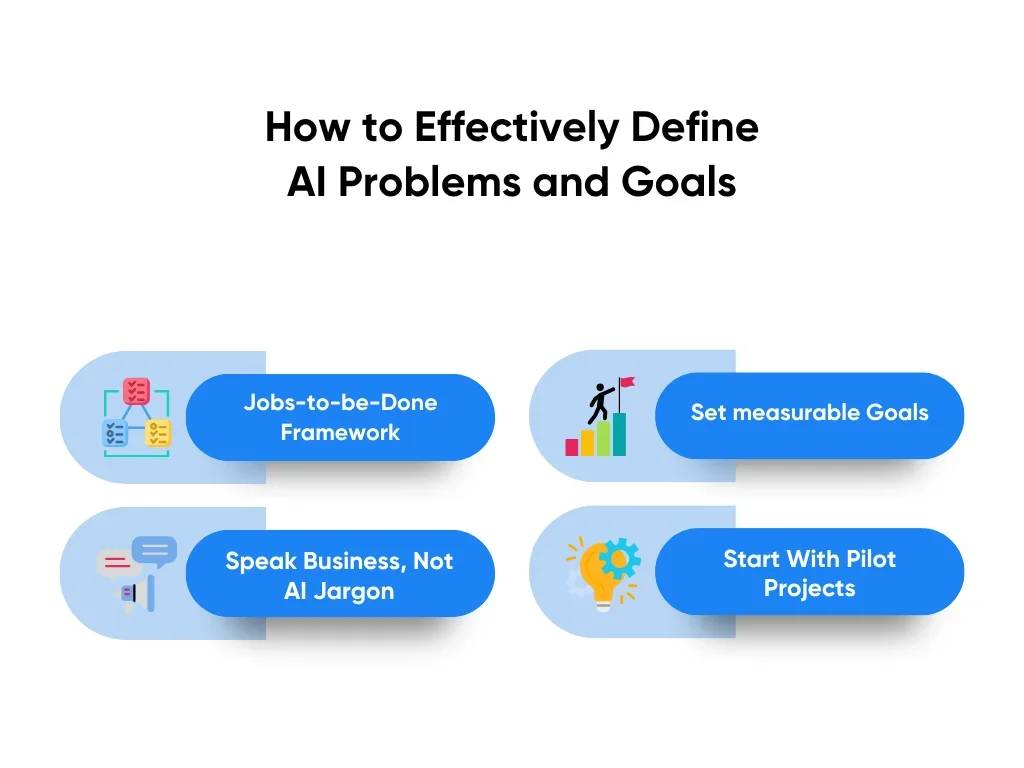

Learning how to make an AI starts with knowing exactly what problem you're solving (bear with me, this isn’t fun, but necessary). Start by defining the problem and setting your goals accordingly. You can:

- Set measurable goals: Start with business output and work backward. If you can't define how success looks in revenue, cost savings, or customer metrics, you're not ready to build. Establish 3-5 core KPIs tied directly to business objectives, with phased milestones allowing for course correction.

- Get specific about your pain point: Don't say "we need to automate our process." Say "our sales team spends 4 hours weekly answering the same 15 questions from marketing about lead quality, pipeline status, and conversion rates. Our marketing spends 2 hours weekly chasing down those answers. That's nearly $4,000 monthly in lost productivity."

- Check if AI is even the right tool: Sometimes the answer is better human training, not artificial intelligence. If your problem stems from unclear policies or inconsistent leadership decisions, trying to implement AI solutions would likely exacerbate those underlying issues.

- Start with pilot projects: Start small. Pick one specific process that's currently manual, repetitive, and costs you time or money. Choose pilots that can demonstrate value within 90 days, have clear success metrics, and solve a painful business problem that affects multiple stakeholders.

Building the Right AI Team & Required Roles

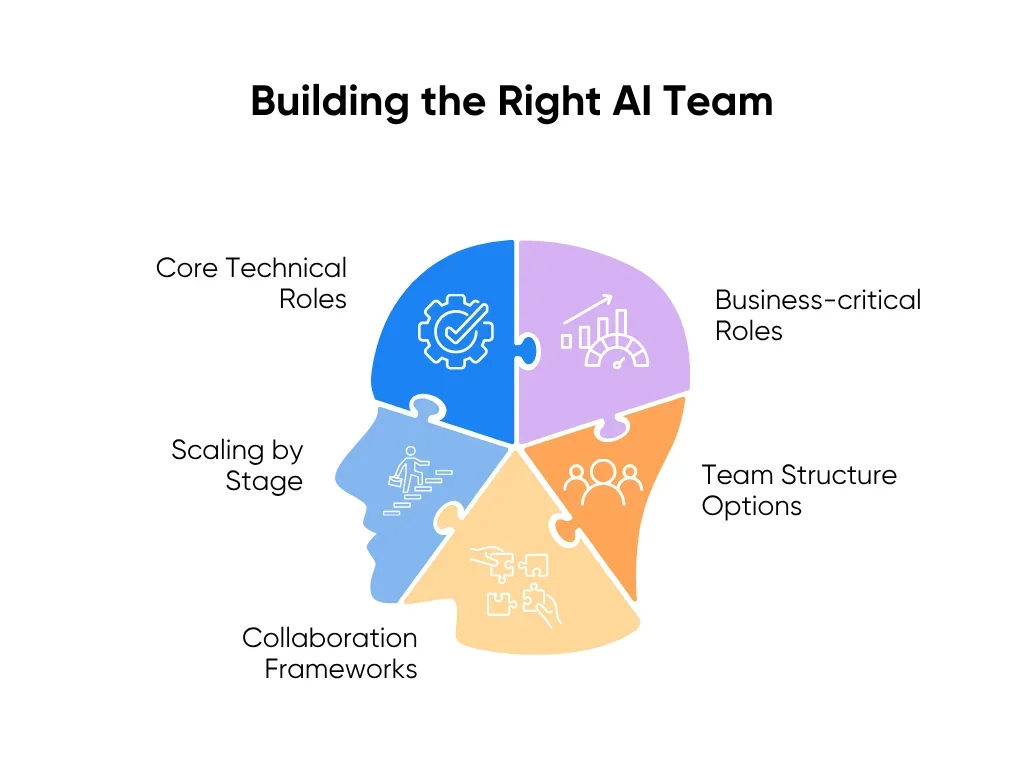

The composition of your AI team determines project success more than any technology choice. Here's how to build a team that delivers results, not just models:

Core technical roles

These positions form the foundation of any AI team:

- Data Scientists ($140K-$200K): Develop models and derive insights, requiring deep statistics knowledge and business acumen.

- Machine Learning Engineers ($160K-$250K): Deploy models to production, demanding software engineering excellence and cloud platform expertise.

- Data Engineers ($130K-$180K): Build the pipelines feeding everything, often the most overlooked but critical role.

- MLOps Engineers ($180K-$220K): Automate workflows and monitor performance, bridging development and operations.

- Product Owner ($115K-$150K): Bridges business requirements and technical implementation, defining project scope, prioritizing features, and ensuring AI solutions deliver measurable business value.

Business-critical roles

Often overlooked but equally vital positions include:

- AI Product Manager: Translates business needs to technical requirements and maintains stakeholder alignment.

- Domain Experts: Validate that solutions actually work in practice and catch industry-specific edge cases.

- Change Management Lead: Ensures user adoption and cultural transformation.

- Ethics Officer: Monitors for bias and ensures responsible AI deployment.

Team structure options

Choose the model that fits your organization:

- In-house teams: Maximum control and IP protection but requires $500K+ annual investment for minimal viable team.

- Outsourced teams: 30-50% cost reduction with access to specialized expertise but less direct control.

- Hybrid approach: Maintain 2-3 core in-house roles (Product Manager, Lead Engineer) while outsourcing specialized skills.

- Embedded model: AI experts work within business units rather than centralized teams.

The hybrid approach essentially delivers the best of both worlds, giving you strategic control without the 6-12 month hiring process and $500K+ annual costs. Aloa's AI development services provide exactly that, with pre-vetted ML engineers, data scientists, and domain experts who scale with your project needs.

Choosing the Right Platform, Tools, and Infrastructure

There are a lot of AI platforms, frameworks, and services to choose from. The good news is that you don't have to be a tech expert to make educated choices, especially with AI’s help. The bad news is that there are legitimately so many AI tools out there that choice paralysis is real. Exploring AI Certification Courses can provide a clearer understanding of these tools and how to navigate them effectively.

Here's where to start:

When to Build vs. Buy Your AI Solution

This is the biggest decision you'll make, and most companies get it wrong by defaulting to "let's build something custom."

Custom development makes sense when your competitive advantage depends on doing something completely unique from what’s currently in the market, where existing solutions can't handle your specific data or industry requirements.

Custom AI projects typically cost $150,000-$500,000 right out of the gate, and you’ll need to build in a 20-30% annual buffer for additional maintenance. If you start with existing solutions, a $200/month tool that could solve 80% of your problem is infinitely better than a $200,000 custom solution that might work in 18 months.

For the vast majority of us: buy first, build later.

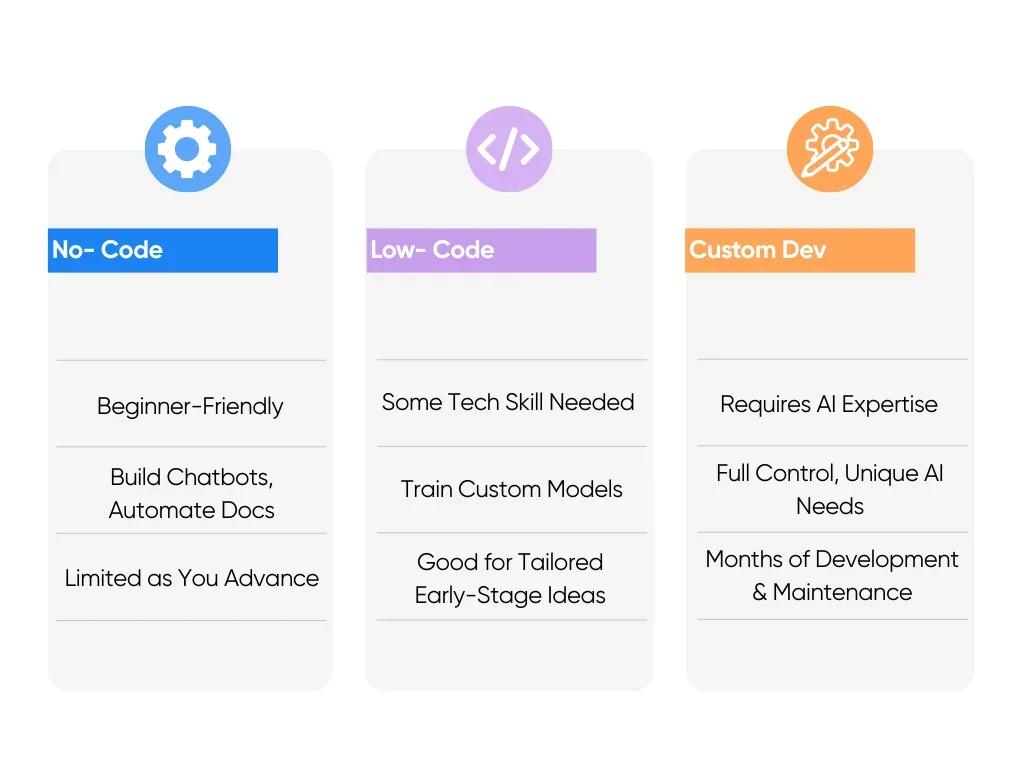

No-Code vs. Low-Code vs. Custom Development

Say you’re ready to move forward with your first AI solution, where do you start? We’ll list off three paths forward.

No-code AI platforms are like buying your car off the lot. ChatGPT's custom GPTs, Microsoft Copilot Studio, or Google's AI Builder let you build chatbots, automate document processing, or create predictive models using simple interfaces. This is a great entry point for beginners. The only downside is that once you’ve become a pro, you’ll discover limits imposed by the platform.

Low-code platforms are like further modifying a car that you bought off the lot. You’ll get more control, but it does require some technical knowledge. Tools like Microsoft's AI Builder, Google's AutoML, or AWS SageMaker Canvas let you train custom models on your data without deep technical expertise. Good for companies that need AI tailored to their specific industry and want to test out the early stages of a more complex idea.

Custom development is almost like building the car from scratch. You get exactly the AI behavior you want, but it requires AI expertise, months of development, and significant ongoing maintenance. Only choose this path if your AI needs are truly unique or provide major competitive advantage.

Here's the surprising part: even custom AI development is dramatically faster than traditional software development. What used to take 1-2 years to build from scratch can now be done in 3-6 months using modern AI frameworks and pre-trained models.

Custom development is still relatively more complex and expensive, but we're talking months, not years.

Technical Infrastructure and Security Considerations

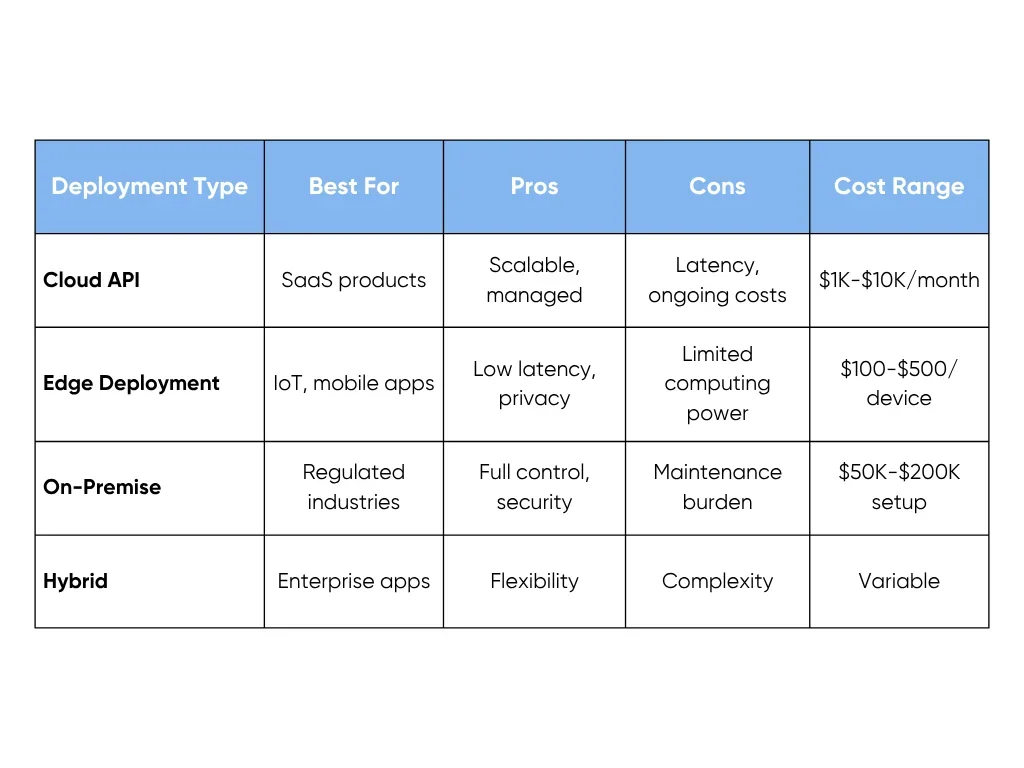

If you're going the custom development route or have technical team members handling implementation, here are the key infrastructure decisions you'll face:

Choose frameworks that align with your team's expertise and project needs. For specific use cases, consider specialized frameworks like Hugging Face for natural language processing or scikit-learn for traditional machine learning algorithms:

- TensorFlow: Production deployment champion with extensive ecosystem support but significant learning curve

- PyTorch: Dominates research and prototyping with intuitive debugging but fewer deployment options

- Scikit-learn: Unbeatable for traditional ML on smaller datasets with excellent documentation

Security requirements vary significantly depending on your industry and data sensitivity. Healthcare, finance, and government applications require strict data protection and regulatory compliance. And as agentic analytics—where AI tools independently explore, interpret, and act on data become more widespread, ensuring secure, governed access to data is more critical than ever. Regardless of domain, make sure to implement comprehensive protection in these areas from day one:

- Data protection: Encryption at rest (AES-256) and in transit (TLS 1.3)

- Access control: Role-based permissions with principle of least privilege

- Compliance framework: GDPR, HIPAA, SOC 2 certifications as needed

- Audit systems: Comprehensive logging of all model queries and data access

AI Project Cost Optimization Strategies

Cost management in AI projects requires careful planning and monitoring. Cloud AI services often charge based on usage, which can lead to unexpected costs if not properly managed. Consider these strategies to keep AI affordable at scale:

- Infrastructure tactics: Use spot instances for training (up to 90% savings), implement auto-scaling

- Data management: Archive old training data to cheaper storage tiers, implement data lifecycle policies

- Model efficiency: Compress models for deployment, use quantization for edge devices

- Vendor negotiations: Commit to annual contracts for 20-40% discounts, negotiate custom pricing at scale

Step-by-Step Process: How to Make an AI

Building AI involves systematically transforming business problems into intelligent solutions. Here's how you can do that, from initial concept to deployment:

Step 1: Understand the Basics of AI and Its Branches

Understanding AI fundamentals helps you communicate effectively with stakeholders, set realistic expectations, and choose the right approach for your specific use case. This knowledge prevents costly pivots mid-project and ensures alignment between technical capabilities and business objectives. Before setting out to build your AI, make sure to master these core concepts:

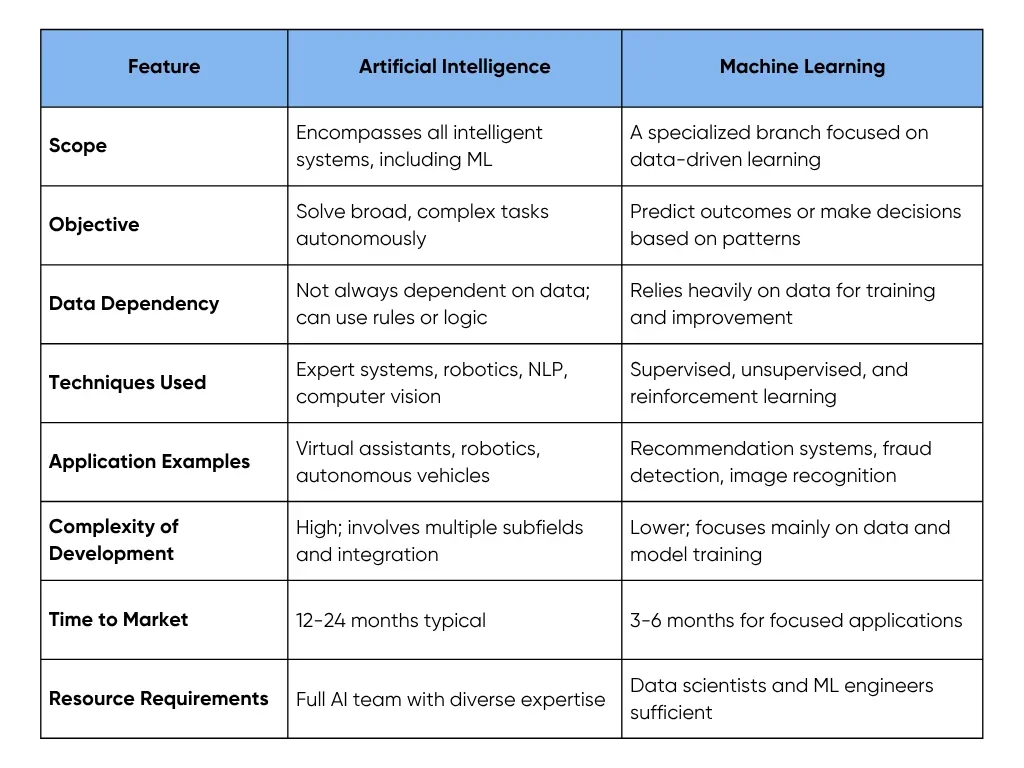

Artificial Intelligence vs. Machine Learning

While they sound very similar, artificial intelligence and machine learning are two distinct concepts. But as a rule of thumb: all ML is AI, but not all AI is ML. Let’s break down each of the key differences:

Types of AI

- Artificial Narrow Intelligence (ANI): 95% of current business applications. Focused on specific tasks like customer support chatbots or fraud detection. Lower risk, faster ROI.

- Artificial General Intelligence (AGI): Still theoretical. Not viable for business applications yet.

- Artificial Superintelligence (ASI): Purely speculative. Focus on ANI for practical business value.

Pre-Development Checklist

- Define the specific business problem AI will solve

- Identify measurable success metrics (accuracy, time saved, cost reduced)

- Assess data availability and quality

- Determine if simpler automation tools could suffice

- Set realistic timeline expectations with stakeholders

- Establish budget including 20% buffer for unforeseen challenges

Common Risks

- Choosing AI when rule-based systems would suffice

- Underestimating data requirements (minimum 10 times as many data points as the number of features in your dataset)

- Expecting AGI capabilities from ANI systems

During Aloa's Discovery Phase, our AI consultants conduct a comprehensive assessment to determine the optimal AI approach for your specific use case, saving weeks of research and preventing costly false starts.

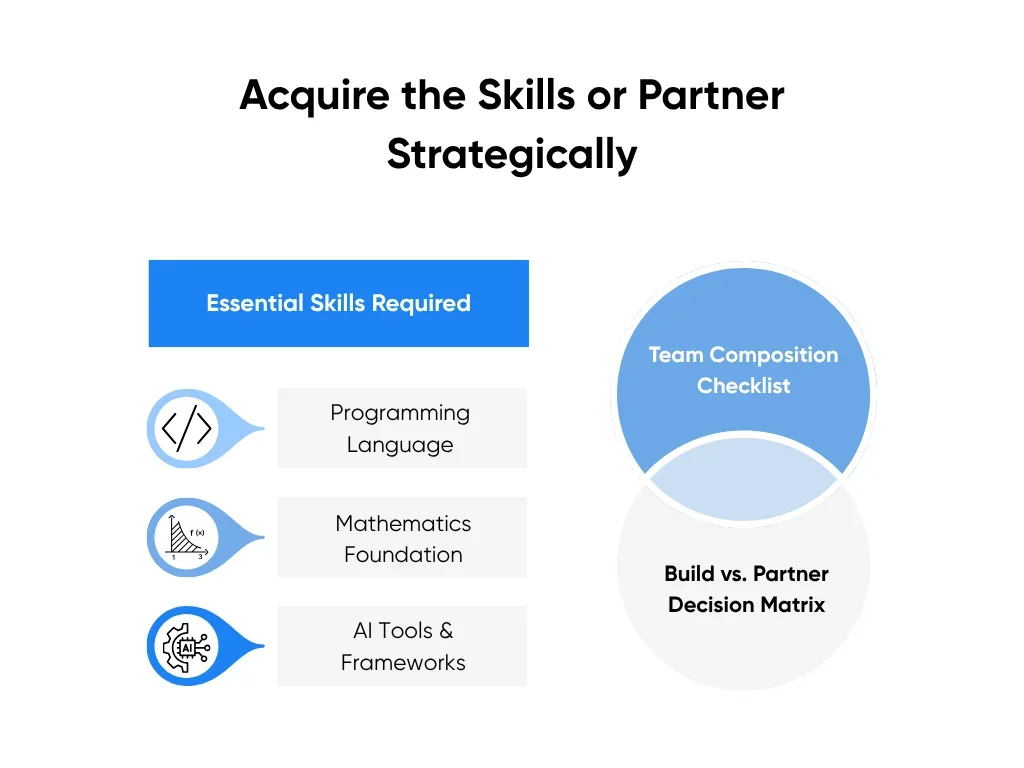

Step 2: Acquire the Necessary Skills (or Partner Strategically)

Building AI requires a unique blend of technical expertise that's rare and expensive to acquire internally. The global shortage of AI talent means hiring a full team can cost $500K-$1M annually. Strategic partnerships or hybrid models often deliver better ROI, especially for initial projects.

Essential Skills Required:

- Python programming: 80% of AI projects use Python with extensive libraries (TensorFlow, PyTorch, scikit-learn)

- Linear algebra and statistics: Neural network operations, model evaluation, and uncertainty quantification

- Machine learning frameworks: TensorFlow/Keras for production, PyTorch for research, scikit-learn for prototyping

- Data engineering: Pipeline development, data preprocessing, and database management

- Cloud platforms: AWS, Azure, or Google Cloud for scalable AI deployment

- Mathematics fundamentals: Calculus for optimization algorithms and gradient descent

- MLOps tools: Model versioning, monitoring, and automated deployment workflows

Aloa's pre-vetted team includes all necessary roles, allowing you to start immediately without the 3-6 month hiring process. Our flexible engagement model lets you scale expertise based on project phases.

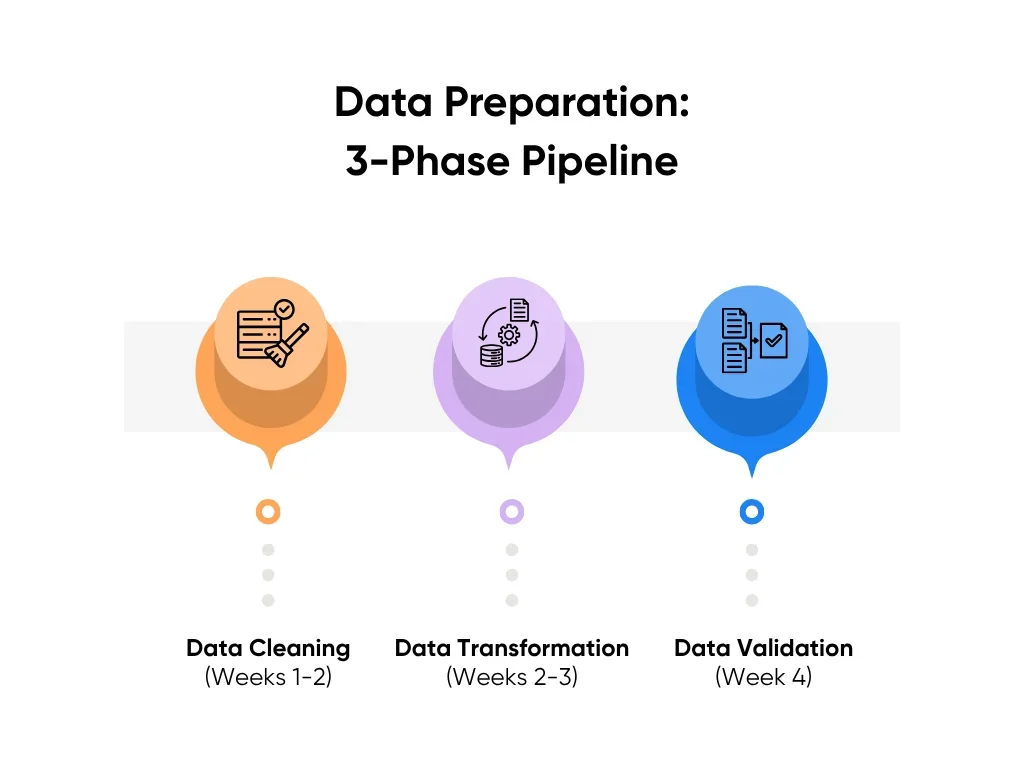

Step 3: Data Collection and Preparation

Data quality determines AI success more than any other factor—garbage in, garbage out applies exponentially to AI systems.

Poor data quality causes 32% of AI project failures. Although organizations can spend up to 80% of project time on data preparation, yet most underbudget this critical phase.

Data Requirements Assessment

Minimum Viable Data Thresholds:

- Classification tasks: 1,000 examples per class

- Object detection: 10,000+ annotated images

- NLP tasks: 50,000+ text samples

- Time series prediction: 2-3 years of historical data

Data Collection Strategy:

- Internal Sources: CRM systems, transaction logs, sensor data

- External Sources: APIs, purchased datasets, web scraping (with legal clearance)

- Synthetic Data: For privacy-sensitive applications or rare events

Aloa's data engineering team has processed 100M+ data points across industries. Our automated data quality assessment tools identify issues in hours, not weeks, while our partnerships with labeling services reduce costs by 40%.

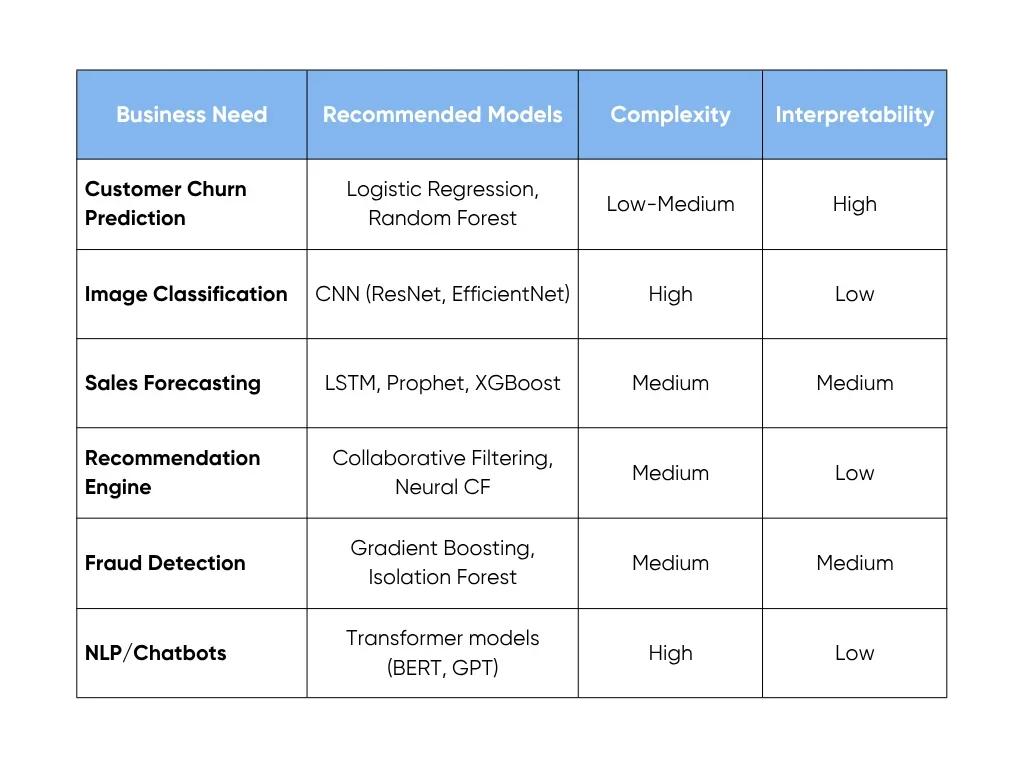

Step 4: Model Selection and Proof of Concept

Choosing the right model architecture balances performance requirements with practical constraints like interpretability and deployment costs.

The "best" model academically often isn't the best for business. A slightly less accurate but explainable model may be preferable for regulated industries or customer-facing applications. Follow this framework to find the right model for your business use case:

- Weeks 1-2 - Baseline Model: Implement the simplest viable approach to establish performance benchmarks and identify data gaps.

- Weeks 3-4 - Advanced Models: Test 2-3 sophisticated approaches, comparing accuracy versus complexity tradeoffs and computational requirements.

- Weeks 5-6 - Business Validation: Demo to stakeholders with real examples and gather feedback on accuracy thresholds while estimating production costs.

Aloa's model selection process includes business constraints from day one. Our POC phase includes production-ready code and infrastructure estimates, preventing the common "works in lab, fails in production" scenario.

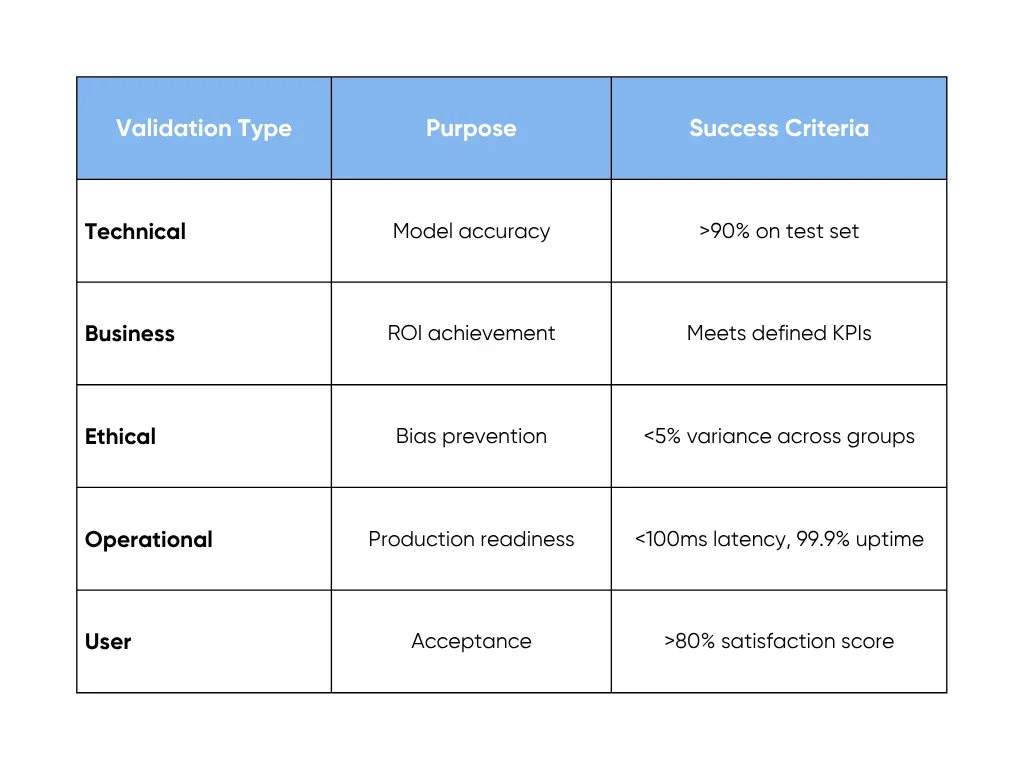

Step 5: Training, Testing, and Validation

Model training transforms your proof of concept into a production-ready system through rigorous iteration and validation. This phase determines whether your AI delivers consistent business value or becomes an expensive experiment.

Training Process (6-8 weeks):

- Weeks 1-2 - Initial Training: Hyperparameter tuning and cross-validation to prevent overfitting while tracking costs for budget management.

- Weeks 3-4 - Advanced Optimization: Implement ensemble methods and model compression while setting up A/B testing frameworks for deployment.

- Weeks 5-6 - Robustness Testing: Conduct adversarial testing across demographic groups and stress test with 10x expected load to identify failure modes.

- Weeks 7-8 - Final Validation: Complete hold-out test evaluation, business metric validation, and user acceptance testing with comprehensive documentation.

Aloa's testing protocols, refined across 200+ deployments, catch issues before they impact your business. Our bias testing framework exceeds industry standards, protecting your reputation and ensuring ethical AI deployment.

Step 6: Deployment and Integration

Deployment transforms your trained model into a business asset, requiring careful orchestration of technical and organizational elements. 87% of data science projects never make it to production due to underestimating the 10x effort gap between working models and deployed systems.

Deployment Process (5-6 weeks):

- Week 1 - Infrastructure Setup: Implement containerization (Docker), CI/CD pipelines, and security hardening with encryption and authentication.

- Weeks 2-3 - System Integration: Develop APIs, establish database connections, and integrate with existing business systems while building error handling mechanisms.

- Week 4 - Performance Optimization: Implement caching strategies, model quantization for faster inference, and horizontal scaling configuration.

- Weeks 5-6 - Go-Live Preparation: Set up blue-green deployment with rollback procedures, train teams on incident response, and plan customer communication.

Aloa's deployment methodology includes pre-built integrations for common platforms, reducing deployment time by 50%. Our DevOps team ensures zero-downtime deployments and provides 24/7 monitoring during the critical first month.

Step 7: Monitoring, Feedback, and Continuous Improvement

AI systems require active management to maintain performance and adapt to changing conditions. Deploy and forget leads to degraded accuracy and lost value. Model performance typically degrades 10-20% within 6 months due to data drift. Follow this framework to proactively maintain ROI and prevent costly failures:

- Real-time monitoring: Track model accuracy (hourly), inference latency (<100ms target), error rates, and resource utilization through automated dashboards.

- Monthly reviews: Analyze performance against KPIs, synthesize user feedback, detect data drift, and prioritize improvements.

- Quarterly updates: Retrain models with new data (5-15% accuracy improvement), optimize architecture, and conduct A/B testing with gradual rollout.

- Annual strategic review: Integrate technology advancements, make major architecture decisions, and refine scaling strategies.

- Feedback collection: Use in-app buttons, quarterly surveys, support ticket analysis, and usage analytics to capture user insights.

- Model governance: Implement version control, audit trails, quarterly bias assessments, role-based access controls, and comprehensive documentation.

Aloa's managed AI service includes proactive monitoring, automatic retraining triggers, and monthly optimization reports. Our ML engineers handle updates and improvements, ensuring your AI evolves with your business needs while you focus on leveraging its insights.

Business Integration: Deploying AI into Real Workflows

Effective AI deployment relies on careful integration into business processes and the human side of tech as much as it relies on building a working model. Keep these best practices in mind to upgrade your business rather than simply sit on a shelf.

Integrating AI into existing business processes

- Start integration planning before development begins.

- Map current workflows in detail, identifying where AI fits and what processes need to change.

- Plan gradual rollouts with pilot groups who provide feedback and refine the integration.

Build fallback mechanisms into your design. AI systems can fail, so workflows need backup processes.

Managing the Human Side of AI Adoption

- Change management: Address technical concerns and emotional resistance about job displacement through transparent communication about AI's role and limitations.

- User training: Develop role-specific programs that focus on how AI changes individual responsibilities rather than generic education.

- Stakeholder communication: Set clear expectations about AI capabilities and limitations. Be honest about accuracy levels and ongoing human roles. Celebrate early wins and share success stories.

Common Challenges and Pitfalls in AI Projects

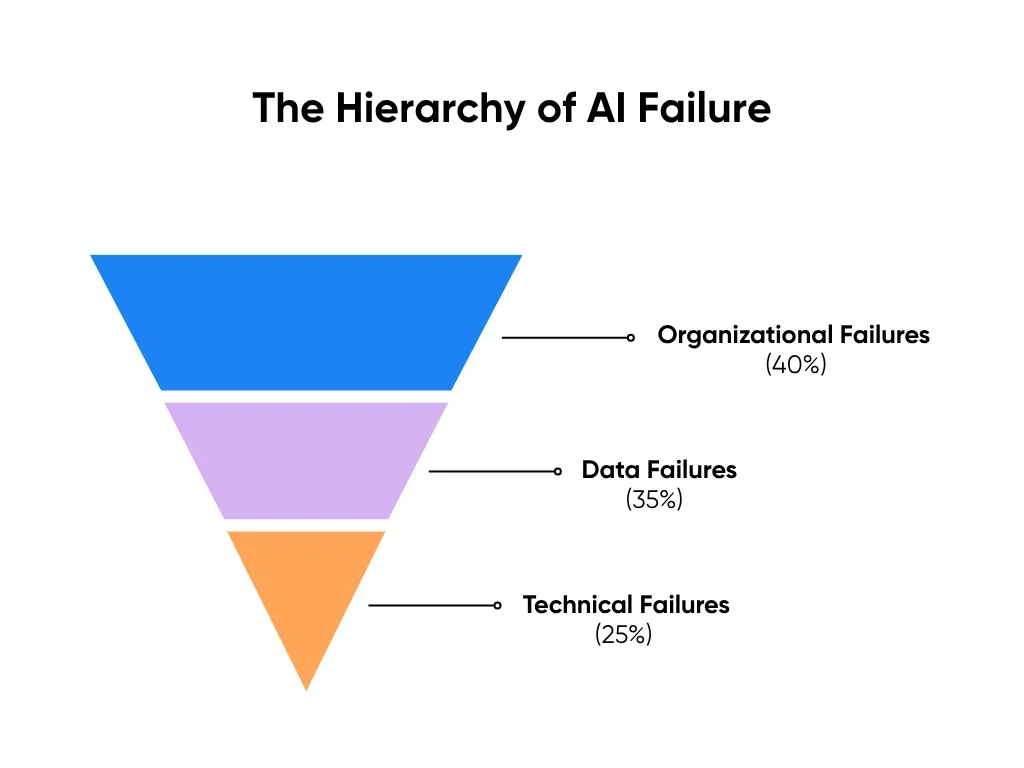

Understanding why 80-85% of AI projects fail provides a roadmap for success. By anticipating and preparing for these challenges, you can navigate around the pitfalls that trap most initiatives.

Data Quality and Bias

Poor data quality causes 35% of AI project failures, while undetected bias can lead to legal liability and reputational damage. This turns "garbage in, garbage out" into "garbage in, discrimination out."

Common Manifestations:

- Historical data encoding past discrimination (hiring, lending patterns)

- Customer data skewed toward certain demographics

- Sensor data with systematic measurement errors

- Labels reflecting subjective human biases

Mitigation Strategies:

- Conduct demographic analysis and statistical tests for representativeness before training

- Implement regular bias testing across protected categories during development

- Set up continuous monitoring for discriminatory outcomes post-deployment

- Establish feedback mechanisms for bias reporting and quick response protocols

Overfitting and the Lab-to-Production Gap

Models achieving 95% accuracy in testing often drop to 70% in production due to overfitting, like memorizing test answers instead of understanding concepts.

Why Overfitting Happens:

- Training on overly clean data that doesn't match real-world conditions

- Optimizing for wrong metrics or insufficient real-world variation

- Time-based data leakage in training sets

- Using extremely complex models for simple problems

Prevention Strategies:

- Use robust cross-validation (K-fold, time-based splits, stratified sampling)

- Add noise to match real-world data quality during testing

- Test with edge cases, adversarial examples, and production load simulation

- Watch for red flags like >5% gap between training and validation accuracy

Integration and Technical Debt

AI systems accumulate technical debt faster than traditional software. Google found only 5% of AI code is actual ML, with the rest being increasingly fragile glue code.

How Debt Accumulates:

- Pipeline complexity growing exponentially over time

- Dependency management becoming unmanageable

- Model versioning conflicts with data versioning

- Performance optimization conflicting with maintainability

Debt Prevention:

- Use modular design with clear interfaces and containerization

- Implement code reviews, automated testing, and documentation as code

- Standardize on single ML frameworks and consistent processing libraries

- Schedule regular refactoring sprints and maintain unified monitoring

Unrealistic Expectations and Scope Creep

Stakeholders often expect AGI capabilities from narrow AI, leading to disappointment and project abandonment when reality doesn't match inflated expectations.

Scope Creep Patterns:

- Feature creep: "Can it also do X?"

- Data creep: "Let's add this other dataset"

- Metric creep: "We also need to optimize for Y"

- Timeline creep: "Let's make it perfect before launch"

Expectation Management:

- Conduct AI capability workshops with realistic demo scenarios

- Provide weekly progress updates and early prototype demonstrations

- Define clear success criteria and written scope agreements

- Plan phased capability expansion with regular performance reviews

Resource and Skill Gaps

AI talent shortage means even funded projects struggle to find qualified team members, with AI skills having a 2-3 year half-life requiring continuous learning.

Resource Challenges:

- Very low availability of ML engineers and AI product managers

- High costs ($150K-$220K+ for specialized roles)

- Rapid skill obsolescence requiring constant retraining

- Competition for limited talent pool

Mitigation Approaches:

- Follow build-buy-partner strategy: build core advantages, buy commodities, partner for expertise

- Use pre-trained models and automated ML platforms when possible

- Implement internal AI literacy training and mentorship programs

- Share resources across projects and create reusable components

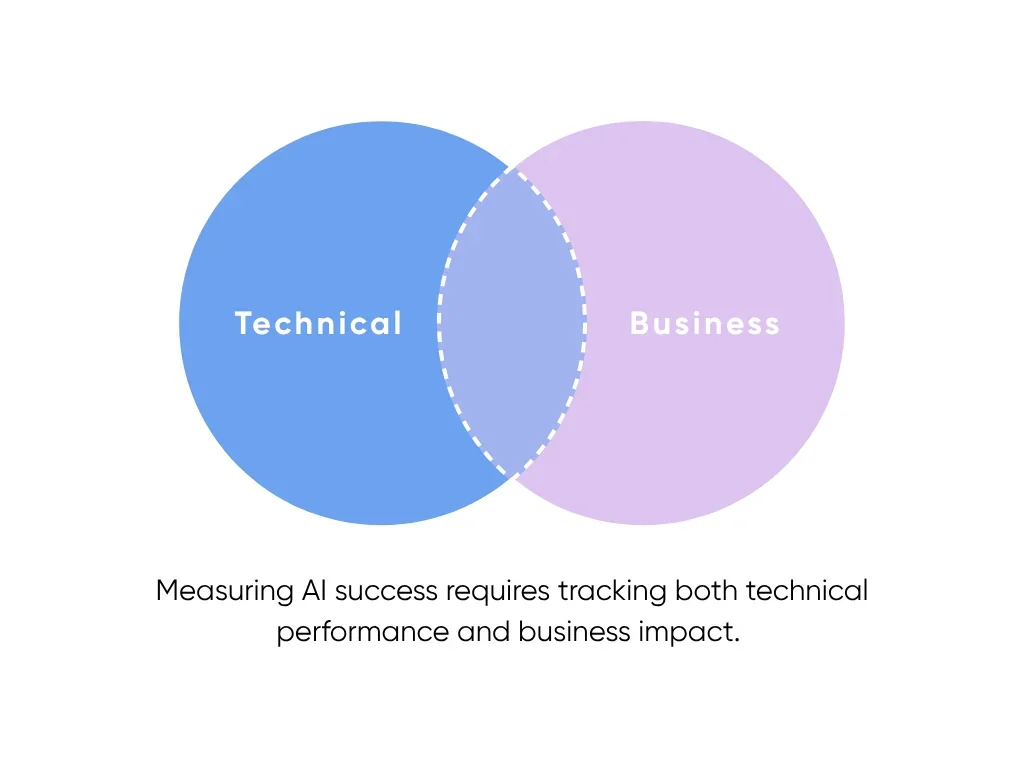

Measuring AI Success: KPIs and ROI

Measuring AI success requires a balanced approach that tracks both technical performance and business impact. Unlike traditional software projects, AI initiatives involve uncertainty, iteration, and evolving requirements that make simple success metrics insufficient for comprehensive evaluation.

KPIs and Other Success Metrics

Establish a multi-layered measurement framework that includes:

- Technical metrics: Measure accuracy, precision, recall, and response time to determine whether your AI system is working correctly. Make sure to align these with your specific use case requirements.

- User adoption metrics: Track active users, feature utilization, and user satisfaction to determine whether people find your AI valuable.

- Business outcome measures: Cost savings, revenue impact, and efficiency gains demonstrate ROI. Calculate direct savings from automation, efficiency gains from faster decision-making, and revenue increases from improved customer experiences. Don't forget to measure indirect benefits like employee satisfaction, customer retention, and competitive advantage.

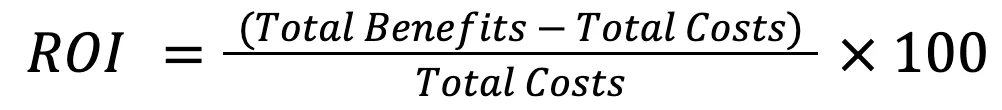

Calculating ROI

ROI calculation for AI projects requires careful attention to both costs and benefits over time. Total costs include:

- Initial development ($50,000-$500,000 for most business applications)

- Annual maintenance (15-20% of original project cost)

- Infrastructure expenses and personnel time

- Benefits spanning direct savings, revenue increases, and strategic value creation

Use this formula to calculate ROI:

Tracking adoption and business impact

Monitor user adoption through engagement metrics that indicate actual usage rather than just initial trial. Track adoption using these methods:

- Surveys, usage analytics, and support data: These will tell you how AI is performing and where to improve.

- Performance baselines: Setting these before deployment helps track cost, time, and quality to measure real ROI.

- Monitor early adoption, satisfaction, and performance: Note how these metrics fare during the first few months to predict success.

- Hold regular reviews: Keep stakeholders aligned and operations efficient with performance reviews at least once every few months.

- Benchmark results: See how you match up against industry standards. Most see 3.5X ROI in 14 months, top performers hit 8X in 2 years.

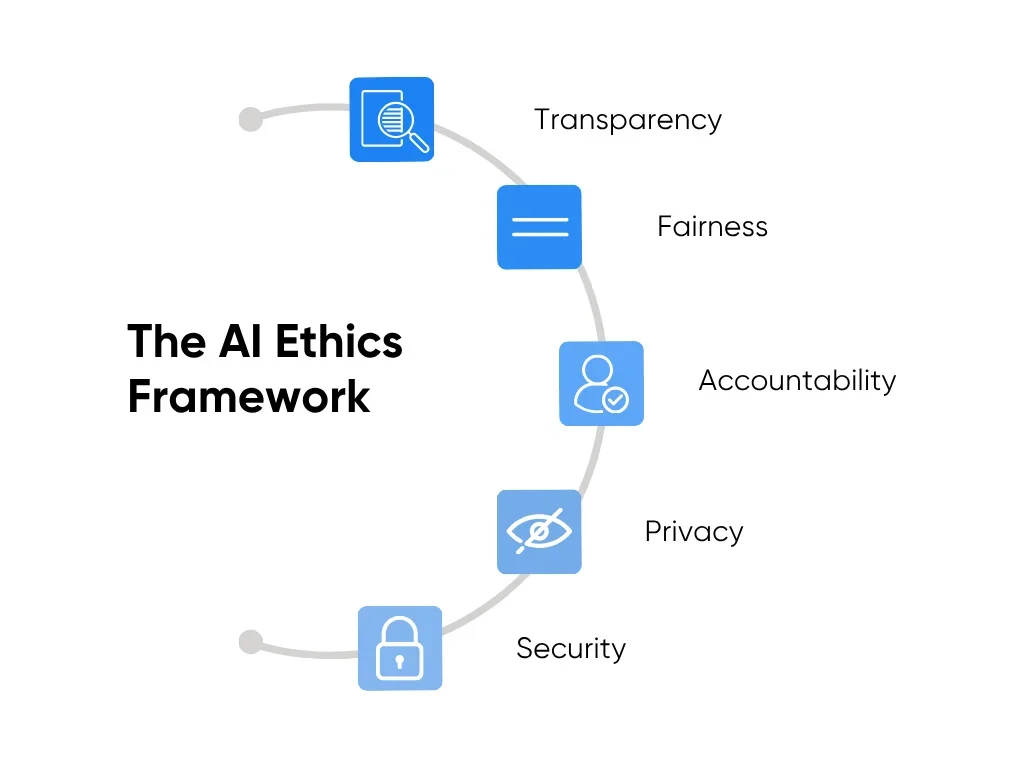

Ethical, Security, and Governance Considerations

Your AI project's success depends as much on responsible deployment as technical performance. Prioritizing AI governance from the start avoids costly legal battles, regulatory fines, and damage to your reputation while building sustainable competitive advantages.

Data Privacy, Compliance, and Responsible AI

Privacy regulations are evolving rapidly, and AI systems create new compliance challenges. Balancing business agility and legal requirements begins with being aware of the most common regulatory requirements across all AI regulations.

The most critical requirement across all privacy laws is obtaining proper consent and providing transparency about AI decision-making processes. Whether dealing with EU residents under GDPR or California consumers under CCPA, you must clearly explain how AI systems use personal data and give users meaningful control over their information. This includes informing individuals when their data trains AI models, ensuring automated decisions can be explained in understandable terms, and allowing users full control over their data.

Security

AI systems face unique security challenges that traditional cybersecurity doesn't fully address. Protect your data through encryption and access controls, while safeguarding your models against theft or tampering. Support this with continuous system monitoring to catch threats and unusual activity early, as well as role-based permissions to ensure that sensitive components are accessed by the right people.

Governance Frameworks for Enterprise AI

Create an AI oversight team with technical leaders, legal advisors, and business stakeholders to guide your AI projects. This team should classify projects by risk level, set approval processes, monitor for bias and performance issues, and handle problems when they arise.

Good governance actually speeds up innovation by giving teams clear rules to follow. When people know what's expected and feel confident their work won't cause legal problems, they move faster and take smarter risks. Start by forming your oversight committee, writing simple ethics guidelines, setting up bias monitoring tools, and creating response plans for when things go wrong.

Frequently Asked Questions

How do I know if my business problem is suitable for AI?

If your problem involves pattern recognition in large datasets, predictions from historical data, or automation of repetitive cognitive tasks, then it’s likely something AI will be able to help handle in some capacity.

Be cautious while applying AI to your database, as it would likely be much more complex to integrate if you require 100% accuracy or deep contextual understanding.

What are the most common reasons AI projects fail?

80-85% of AI projects fail due to misaligned expectations, poor data quality, and other issues that cannot be solved with technology. We recommend starting with pilots, allocating 40% of the budget to data preparation, and including business stakeholders at critical checkpoints.

How do I calculate the ROI of an AI project?

Use this simple formula: (Total Benefits - Total Costs) / Total Costs × 100.

Your costs typically include development cost, maintenance (15-20% of the development cost, annually recurrent), and infrastructure (monthly recurrent).

What ethical and legal issues should I consider?

Using AI can lead to unique liabilities that require GDPR/CCPA compliance, bias auditing, and adherence to industry-specific regulations. Regulations are changing as AI continues to evolve rapidly.

You should consider implementing basic frameworks that demand transparency, accountability, fairness, and privacy. Consider purchasing liability insurance if you’re just starting out as a company.

How can I ensure the security and privacy of my data?

Use encryption to protect the training data and model weights that power your AI system. Set up access controls so only authorized team members can access sensitive datasets or modify the AI model.

You should carefully vet any third-party AI services or cloud providers you integrate with. You can also use techniques like federated learning to train models without centralizing sensitive data, or implement on-device inference so user data never leaves their device.

What are the ongoing costs and support needs after deployment?

Costs vary depending on the project's scope. Typically, post-deployment costs account for 15-20% of the initial development costs annually.

Standard costs include: infrastructure, monitoring, and any support staff or system admins. Additional features based on new user feedback will incur additional costs. Contact Aloa and get your quote today!

How long does it typically take to build and launch an AI solution?

AI implementation can vary greatly depending on the problem you’re trying to solve. While some projects can be completed in weeks, complex systems can take upwards of 12 months. This is why we offer tailored AI consultation to identify the best solution for your business.

Key Takeaways

If you learn how to make an AI systematically and methodically, you can potentially achieve 8X ROI. Start with clear business problems, invest heavily in data quality, choose practical over perfect solutions, and plan for continuous improvement from day one.

Ready to accelerate your AI journey?Schedule a free AI readiness assessment with Aloa to discover how AI can solve your specific business challenges. In 30 minutes, you'll walk away with a clear understanding of your AI potential, implementation timeline, and investment requirements.

Don't let another quarter pass wondering "what if." The companies deploying AI today are building tomorrow's competitive advantages. Get started now!