9 am on a Monday. You open your calendar. AI vendor demo. Internal sync on “AI strategy.” Oh, another internal email asking, “What’s our AI plan?”

Your job now revolves around implementing artificial intelligence for financial services, but you are pulled in different directions at once. You need lower fraud losses and smarter analytics, but in a world where customer trust is hard-won and easily lost, you also need progress and control.

At Aloa, we build custom AI systems for finance teams like yours. We stay close to your data rules, legacy systems, and regulators, then build around those guardrails. This guide comes straight from that work across the finance industry. It focuses on how you move from “should we use AI” to “here is our plan for the next 12-18 months.”

In this guide, you can expect:

- Breakdown of where AI works in financial services today

- Use cases for fraud prevention, credit, customer service, and risk

- A roadmap for data, architecture, and vendor choices

- Examples of AI systems financial services teams have shipped

By the end, you can explain your AI plan in a few minutes and have work your team can start.

TL;DR

- AI fraud detection models reduce losses by 10–20% and cut false positives by 20–30% when tied into live payments.

- Service bots handle 20–40% of routine contacts, which lowers cost per interaction and wait time.

- Credit models that use behavior data help lenders approve 5–10% more borrowers.

- Document automation cuts review time by about half and frees thousands of staff hours.

Understanding AI ROI Fundamentals in Financial Services

Artificial intelligence for financial services uses software that learns from data to help your bank or fintech read markets, understand customers, follow their digital journeys, and respond in ways that feel human at scale, across channels inside your financial services platform. It runs fraud checks, supports credit decisions, and handles routine service tasks in the background.

When most teams look at AI, the first question is, “Will this pay off?” Many treat it like any other software project: add up build and license costs, compare them to saved hours, and call that ROI. In financial services, that is only part of the story. You also live and die by fraud, credit risk, service speed, and rules from regulators.

Because of that, AI ROI has two sides:

- Direct gains inside your business: fewer manual reviews, faster choices, lower call volume, smoother audits.

- Negative ROI prevention: the cost of not using AI while your competitors use it to spot fraud faster, answer customers in seconds, and run cheaper, smoother operations.

To see both sides, you need a simple “before” picture before any model goes live. Capture today’s numbers for:

- Fraud Losses: Money you lose when fraud gets through, like paying back stolen card charges or writing off fake or stolen loans.

- Customer Service Times: How long it takes to fully fix a customer issue.

- Compliance and Audit Costs: Staff hours and outside fees to pass audits and fix gaps.

After you launch AI, measure the same numbers again. The change shows whether AI is helping or just adding noise. For fraud, you can go deeper with our AI fraud detection guide for banking teams.

Every AI project in your plan should clearly support at least one money lever: lower process cost, lower loss from fraud or bad credit, or higher retention from better service.

Direct Cost Savings Measurement

Pick one workflow, like card disputes or loan processing. Count how many hours per month your team spends on it today and what those hours cost.

To find savings, take the old hours minus the new hours, then multiply by the hourly cost. That gives you the dollars saved each month.

Most banks do not cut people. Instead, they move staff from copy-and-paste work to higher-value tasks like handling tricky cases and talking to customers.

Risk Reduction Value Quantification

For risk, focus on dollars, not just rates:

- For fraud, compare monthly fraud losses for each product before and after AI. The gap, times 12, is your yearly fraud reduction value.

- For credit, track early missed payments and charge-offs before and after new models. Fewer bad accounts mean more money kept.

- For compliance, total the hours, project spend, and any fines from past audits. If AI lowers exceptions and speeds reviews, the hours and penalties you avoid are part of your ROI.

When you add these numbers together, you get a clear view of how AI affects your bottom line. That makes it easier to decide which AI projects to scale, pause, or stop.

High-ROI AI Applications Across Financial Services

Let’s look at some examples of where AI made a measurable impact:

Fraud Prevention and Detection

AI fraud detection trains on labeled historical data. In other words, the model studies card swipes, logins, and account changes, then uses fraud detection systems to score each new event in seconds and trigger extra checks only on high-risk cases.

IBM describes this as using machine learning to scan large transaction streams and spot suspicious transaction patterns that fixed rules miss. Google Cloud also highlights fraud monitoring as a core finance AI use case.

If your confirmed card fraud is $900K a year, a 15% reduction protects $135,000 annually and cuts false positives, so fewer good customers are blocked.

Our guide to AI-based fraud programs in banking shows how teams aim for that level.

Automated Customer Service Solutions

AI customer service tools use natural language processing to read what a person types or says and map it to an intent, such as “card lost” or “update address,” during live customer interactions. The system either answers or hands the chat to a person with context when the issue is complex.

IBM notes that banks now use AI across chat, apps, and voice to support both self-service and human agents. Many teams aim to move 20 to 40% of routine contacts to AI during the first year.

Take a support team with 150,000 contacts a year at $4 each. If AI handles 50,000 of those at a lower unit cost (around $0.50), you save roughly $175,000 annually.

Risk Assessment and Credit Scoring

AI credit models learn from historic loan outcomes. They read bureau scores, income, collateral, and behavior data, such as deposits and spending patterns, then output a score that your policy converts to a decision or rate.

Google Cloud calls credit decisions a core use of AI because models process more signals than classic scorecards. For a lender with 40,000 applications a year, a 5% lift in approvals in your target band means 2,000 more good loans booked. And sharper signals can also lower early delinquencies.

That combination raises portfolio quality and reduces the time your collections team spends on preventable bad debt.

Operational Process Automation

Operational AI targets high-volume work such as onboarding, lending, and compliance checks. Tools read PDFs and forms, pull key fields, compare them to rules, and send clean data into your core systems.

IBM describes AI in finance as a way to automate processes and improve decision quality across the back office, including document handling and monitoring tasks. For a lending unit that reviews 30,000 files a year, cutting average review time from 40 minutes to 22 minutes frees more than 9,000 staff hours.

Those hours shift into exception handling, customer outreach, and advisory services that need human judgment. You also see better data quality, cleaner audit trails, and lower cost per loan or account opened. Additionally, enhanced loan servicing features help automate repayment tracking and account management, further improving efficiency and the overall borrower experience.

Overcoming Common ROI Challenges and Pitfalls

Here are the main blockers to watch out for:

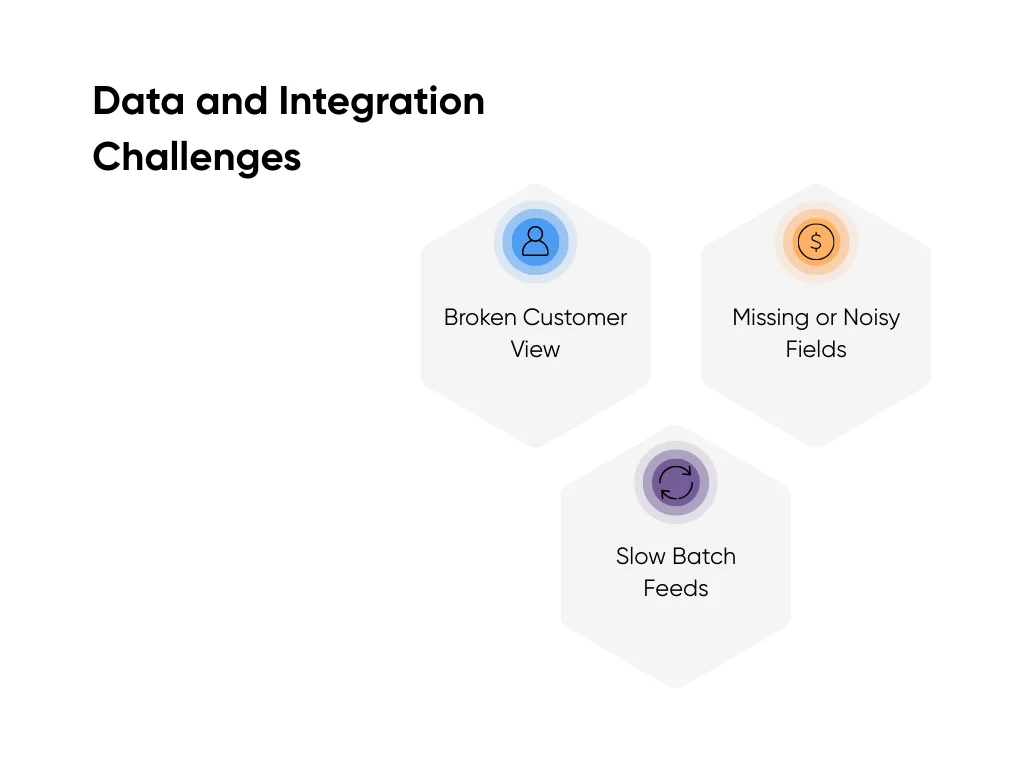

Data and Integration Challenges

Most AI trouble starts with data. Models need a long, clean history and steady live feeds. You often sit on several cores, loan platforms, vendor tools, and a CRM with unclear ownership.

Common issues are:

- Broken customer view with the same person under several IDs and details spread across cards, deposits, and loans.

- Missing or noisy fields where income, merchant codes, or device IDs arrive late, wrong, or not at all.

- Slow batch feeds where nightly files drive models that should score fraud or routing while the customer waits.

To fix these, start by agreeing on one shared customer and account schema. Then use data profiling to decide which fields you actually trust and which can stay optional. Finally, add a light API or event layer in front of legacy systems so your models see the right signals without needing a full core swap.

To protect your ROI, pick one priority flow, like card fraud scoring or digital onboarding. Wire it cleanly end to end, log everything, then reuse the same pattern for your next AI project.

Organizational and Change Management Issues

Your contact center, fraud desk, and credit team already work in familiar ways. A new AI tool often feels like a silent judge that might change targets or expose mistakes.

Frequent people hurdles:

- Fear of losing control where staff worry scores overrule their judgment or hurt metrics.

- Thin training and communication where teams hear about AI once, then see a new button with no practice time.

- Low priority in the queue where managers treat AI as extra work and push rollout to the side.

Your rollout should feel like you’re teaching your team a brand-new tool. Start by sharing a few short, simple videos that show the new workflow step by step. Ask your staff if the flow makes sense and what feels off to them. Then move to co-pilot mode: the AI suggests, and your team clicks approve or edit. Each week, share a couple of clear wins, like extra fraud cases caught or shorter hold times. Before you go fully live, give everyone a test environment with fake cases so they can try edge scenarios and see how the tool behaves.

Regulatory and Compliance Obstacles

Rules stay the same. Supervisors still expect fair outcomes, sound model control, and clear records. A recent report from the US Government Accountability Office on artificial intelligence for financial services highlights data quality, bias, and third-party oversight as key concerns.

Typical trouble spots:

- Thin model documentation where risk and compliance see a score but not purpose, inputs, or limits.

- Weak monitoring with no firm plan for backtests, bias checks, or drift alerts.

- Vendor blind spots where contracts skip service levels, transparency, or exit plans.

You protect ROI when governance sits inside the project plan. Use a standard model template in plain language, set a monitoring rhythm with clear triggers, and build due diligence and off-ramps into vendor deals. This keeps AI work moving instead of getting stuck in review.

Technology Architecture and Vendor Selection Strategy

You already know where AI should help. Fraud, service, credit. Now you need a setup that fits your stack, risk rules, and budget. Architecture and vendor choices decide if AI feels smooth or turns into another stalled project.

Architecture Decision Framework

Architecture is the shape of your AI stack. It covers where models run, how they connect, and how data moves.

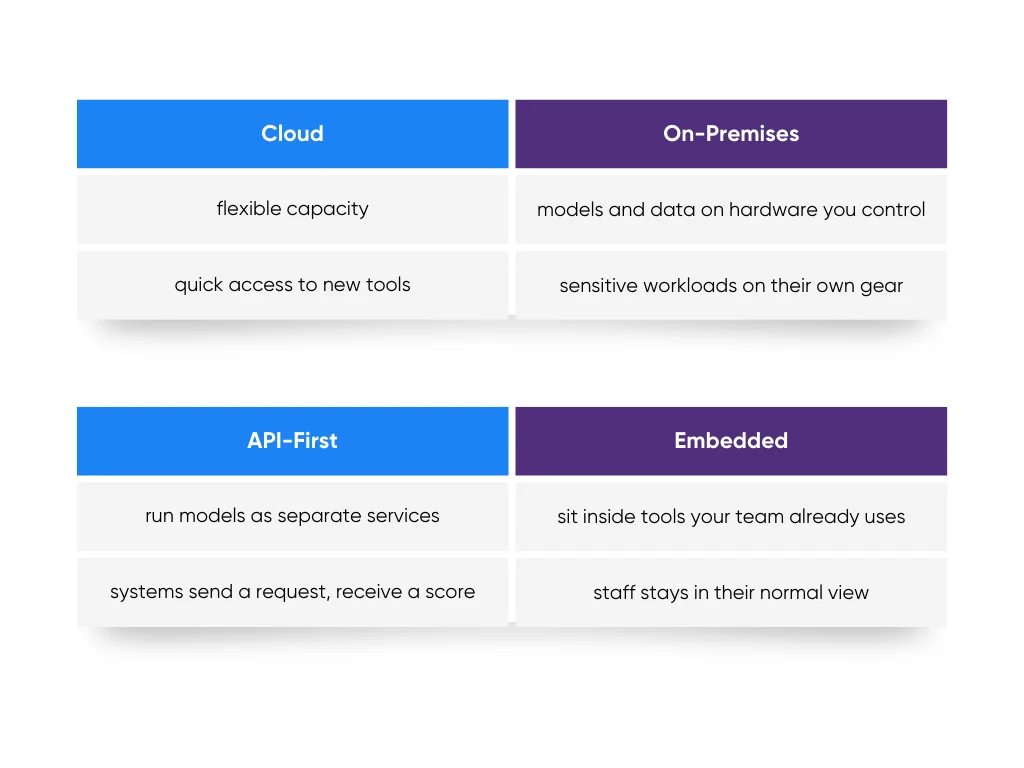

First decision, where models run. Cloud gives flexible capacity and quick access to new tools. On-premises keeps models and data on hardware you control. Many banks land on a mix, with sensitive workloads on their own gear and other work in the cloud.

Next decision, how AI links to your apps. API-first setups run models as separate services. Your systems send a request and receive a score or answer. Embedded setups sit inside tools your team already uses, like spreadsheet add-ins that read documents and match numbers while your staff stays in their normal view.

Data design sits under all of this. You need clear customer and account models, access rules that match your current policies, and logs for every AI call. MIT Sloan research also notes that finance leaders prefer partners with API-driven designs that plug into existing ecosystems instead of replacing them.

Vendor Evaluation Methodology

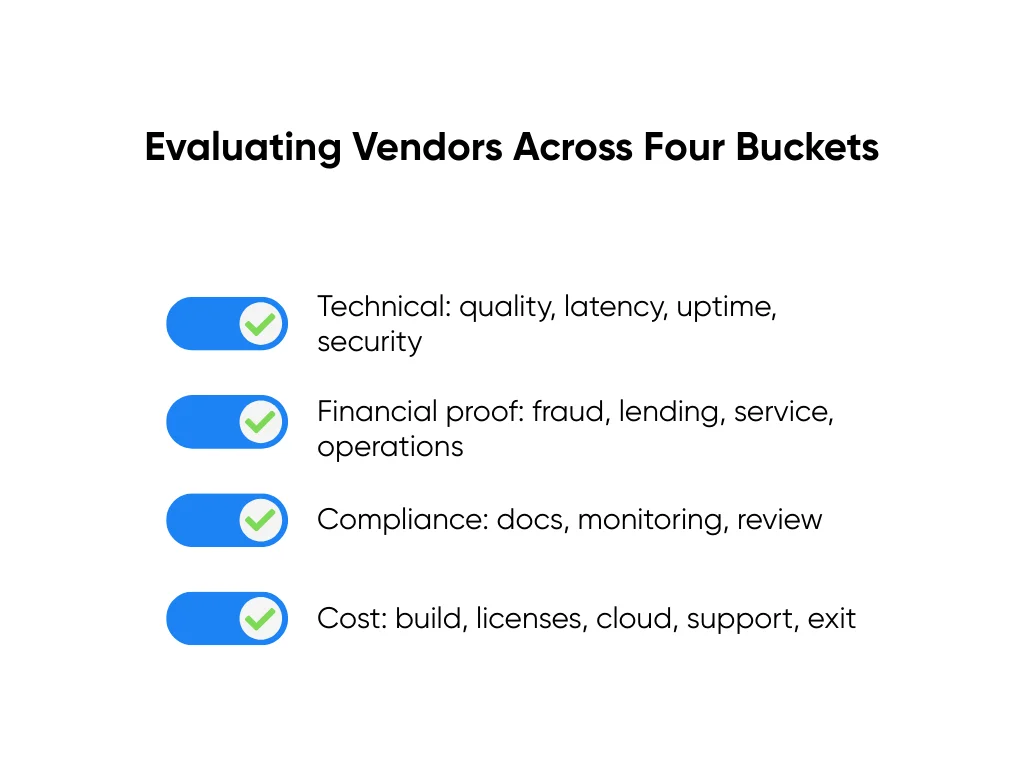

Vendor choice feels easier when you treat it like a scorecard. You set criteria, give each one a weight, and score every vendor the same way.

Useful buckets:

- Technical strength across model quality, latency, uptime, and security practices.

- Financial services proof, with projects in fraud, lending, service, or operations.

- Compliance support, with strong documentation, monitoring hooks, and help during reviews.

- Total cost, across build effort, licenses, cloud usage, support time, and exit costs.

With our team and our finance AI development services for banks and fintechs, we use this same structure. We design systems around your data rules and your 12- to 18-month roadmap, so your team receives tools it trusts and understands, not a black box that feels risky.

Integration Planning Strategy

Now let’s talk about how everything connects. A lean integration plan keeps risk, cost, and surprises under control.

A good plan should cover three tracks:

- Data mapping, where you map source fields to model inputs and define rules for gaps.

- Security setup, where you decide who calls each service, how you encrypt data, and how you log events.

- Testing and rollout, where you replay history in a sandbox, run a small live pilot, then expand volume with guardrails.

At Aloa, we follow this approach when we work with financial clients. It keeps engineers, security, and risk partners aligned.

Key Takeaways

AI is already threaded through everyday finance work: Fraud checks, credit decisions, service queues, and mundane tasks all lean on faster, sharper judgment. Slow movement on AI often leads to higher fraud losses, slower answers, and customers who feel more at home in someone else’s app.

You don't need a huge program on day one. Start with two or three clear use cases, measure where you stand today, and set targets for the next 12 to 18 months. From there, shape a lean architecture, clean data feeds, and a rollout playbook so each AI project follows the same route from idea to production.

This is where our team at Aloa can help. We work with banks, credit unions, and fintech teams to choose strong use cases, build prototypes, tune models on your data, and connect new services into current systems.

Take advantage of this wave of artificial intelligence for financial services and start a conversation with Aloa. We'll listen to your goals, review your stack, and design a roadmap for your unique circumstances.

FAQs

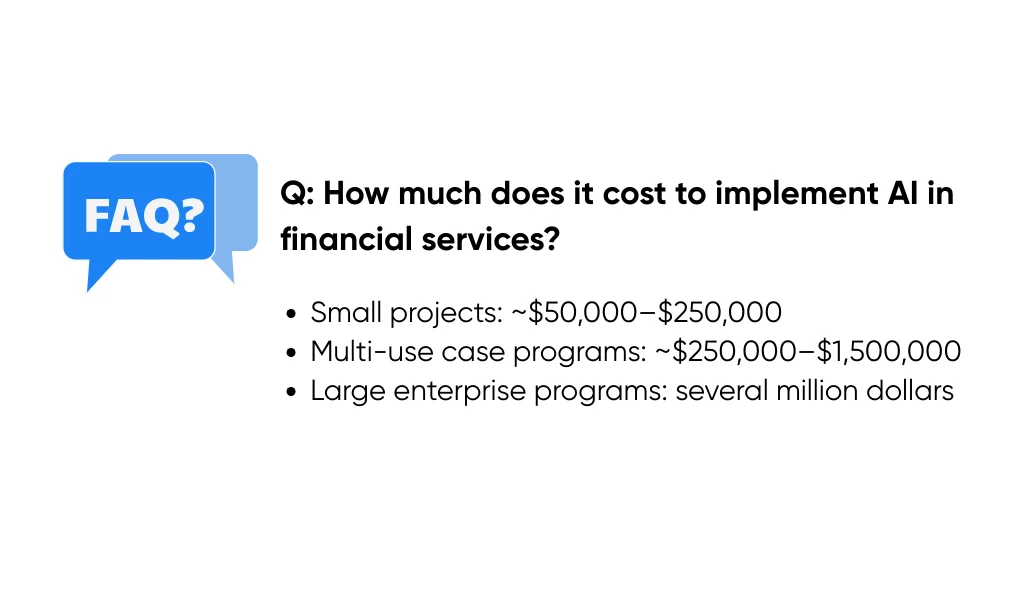

How much does it cost to implement AI in financial services?

It depends on what you’re building and how deep you go into your systems and risk rules. Smaller projects, like a service chatbot or basic fraud model, usually fall in the $50K–$150K range to design, build, and launch. Bigger, multi-use-case programs for regional or national banks can run $150K–$300K+, and full enterprise rollouts can reach into the millions.

If you’re aiming for a production AI system under $150K, Aloa’s hybrid model can be a good fit. You get US-based product and strategy plus a vetted global dev team, getting enterprise-quality work without Big Four consulting rates.

How do financial services regulations impact AI implementation and ROI?

Rules shape design from day one and guide your risk management approach to AI. They drive which data you use, how you explain decisions, and how long you keep logs.

Good model docs, testing plans, and live monitoring lower review friction and reduce the risk of pausing a project late in the process. That extra planning effort protects ROI over time.

How do I measure intangible benefits of AI in financial services?

Tie “soft” gains to numbers you already track. Helpful signals include first response time, average handle time, transfer rate, customer satisfaction scores, and staff turnover in key teams.

Capture a baseline, then watch those numbers for a few months after launch. Trends tell you whether AI improves service and work life, even before revenue lines move.

How do I select the right AI vendor or platform for financial services?

Start with your top use cases and target metrics. Score vendors on technical fit, proof in finance, security and compliance support, and cost over several years. Ask for a small pilot with your data, not only a demo.

Aloa offers clear tiers, from flexible AI consulting to proof of concept, production builds, and larger multi-system programs. For details on ranges and what each tier includes, check Aloa’s AI pricing page.

How can smaller financial institutions compete with large banks in AI implementation?

Smaller teams win with focus. Pick one or two high-impact areas, like a support chatbot, card fraud checks, or automation for a specific compliance review. Many community banks and credit unions find strong ROI in the $100,000 to $500,000 range when they keep scope sharp and run short pilots first.

Cloud tools, prebuilt models, and managed services reduce heavy lifting. AI handles routine checks and questions so your staff spends more time on complex needs and local relationships. For help shaping a right-sized plan, schedule a call with Aloa.