Fraud cost U.S. banks over $10 billion in 2023. In 2025, the attacks aren’t slowing down. AI-generated voices, synthetic identities, scripts that shift faster than your rules can keep up. And you need to stop it before it affects your customers.

That’s why we build AI-based fraud detection at Aloa. We work with financial institutions and fintechs that need AI to learn from your data, flag suspicious behavior as it happens, and adjust as tactics evolve.

In this guide, we'll walk through:

- What AI fraud detection really does

- The tools that catch fraud as it happens

- A rollout plan your team can actually follow

- Metrics that prove it’s working

Let’s get into it.

AI-Based Fraud Detection Fundamentals

AI-based fraud detection uses machine learning to scan transactions as they happen. It compares behavior against fraud patterns with anomaly detection in real time, flags anything unusual, and triggers a response in milliseconds. This helps banks and fintech teams stop identity theft, credit card fraud, transaction fraud, and other financial crime.

Legacy fraud tools rely on fixed rules: “If this, flag that.” Unfortunately, fraud behaviors are constantly changing. This is where AI can be helpful. It can learn from your data, whether it’s a synthetic ID, a login from a new device at 3 a.m., or a pattern your rules didn’t see coming. Let’s explore how exactly AI-based fraud detection systems work.

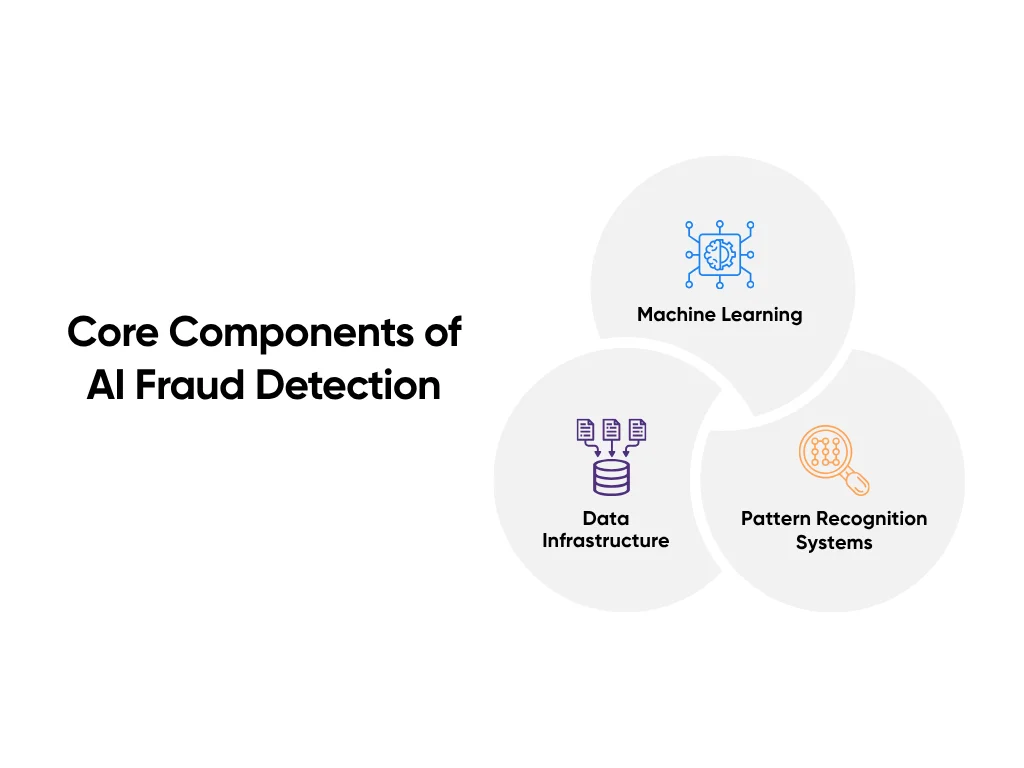

Core Components of AI Fraud Detection

AI-based detection systems flag risks fast by learning over time :

- Machine Learning Models: These detect fraudulent activities by learning from labeled data (known fraud), unlabeled behavior (unknown anomalies), and large datasets. Supervised, unsupervised, and deep learning each help score risk based on different patterns in your data.

- Data Infrastructure: Clean, current data is everything. These systems pull from devices, payments, logins, and more, processing the data in real time and structuring it for the models to work with.

- Pattern Recognition Systems: This is where behavioral analytics drives detection. The system compares activity to learned behaviors, like time of day, device type, or login habits, and flags anything outside the norm.

Together, these parts help AI find threats faster and with way less guesswork.

Traditional vs. AI-Based Detection

They might aim for the same goal, but they go about it in completely different ways. Here’s how they compare:

| Capability | Traditional Methods | AI-Based Detection |

| Accuracy | Static, rule-based | Learns from data, improves over time |

| Response time | Minutes to hours (batch) | Milliseconds (real time) |

| Maintenance | Manual rule tuning | Continuous learning |

| Adaptability | Low, reactive | High, proactive |

| False Positives | Often high | Reduced through behavioral context |

Unlike traditional systems, AI doesn’t wait for a rule. It sees what’s normal in your system, and when something’s off (even if it’s new), it flags it.

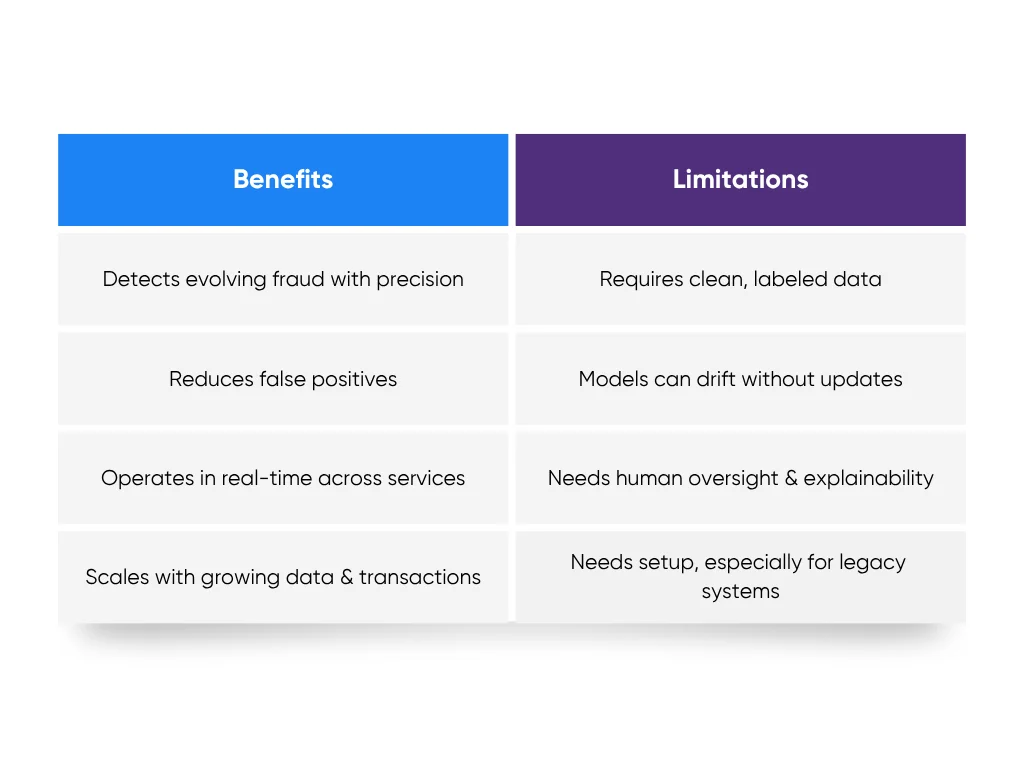

Key Benefits and Limitations

Here are some of the key benefits and limitations of AI-based fraud detection:

- Benefits:

- Catches evolving fraud with better precision

- Cuts down on false positives that block good users

- Works in real time across banking services and e-commerce

- Keeps pace as your data and transaction volume grow

- Limitations:

- Needs clean, labeled data to train well

- Models drift if you don’t keep them updated

- Requires human oversight and explainability tools for responsible use

- Takes upfront integration work, especially with legacy systems

What Are AI Detection Methods and Technologies?

AI-based fraud detection only works when the system knows what to look for, can flag it fast, and won’t need constant rule rewrites to stay sharp. That means using the right models, feeding them the right data, and making sure your decisions run in real time.

We broke this down across 7 real-time fraud detection systems in the banking sector. Let’s look at the core methods and technologies behind them:

Machine Learning Algorithms

Machine learning is how AI fraud systems learn from past behavior and improve over time. They don’t need someone to code every scenario; they spot patterns and adjust automatically.

Most systems use a mix of types of AI:

- Supervised Learning: You train it on labeled data (“fraud” vs. “not fraud”). The system learns what to watch for and flags similar cases moving forward. Think: reused stolen cards tied to past chargebacks.

- Unsupervised Learning: No labels needed; ideal for spotting new account fraud and suspicious activities. It identifies behavior that doesn’t fit established patterns, like a fake account that behaves nothing like your average user.

- Deep Learning: Useful for data analysis across different data points when behavior is complex or subtle, like how someone moves through an app, session flow, or multi-device usage.

Each approach catches different types of risk. Together, they give you broader coverage with fewer false positives.

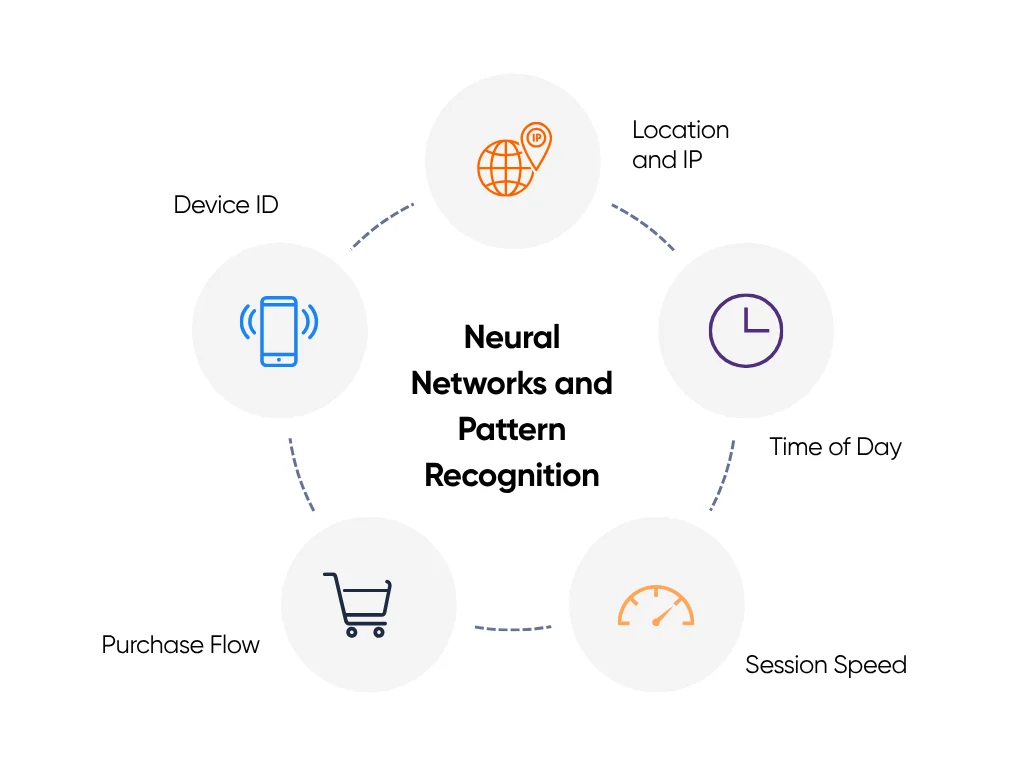

Neural Networks and Pattern Recognition

Neural networks process many signals at once:

- Device ID

- Location and IP

- Time of day

- Session speed

- Purchase flow

Instead of checking these individually, the system looks at them together to understand what “normal” looks like in your environment. When something steps outside that norm (say, a dormant account making multiple international transfers from a new phone), it raises a flag fast.

Some teams go deeper with graph neural networks, which connect the dots between accounts, devices, and transactions. That’s how coordinated fraud, like money laundering rings and social engineering campaigns, gets exposed.

Real-Time Analysis Capabilities

Real-time systems score transactions the moment they happen (before the purchase clears, before an account is accessed, before your team has to clean up).

For example:

- A login from a new country triggers MFA right away

- A saved card used outside typical spending behavior gets flagged before processing

- High-risk transactions hit your analysts with full context from multiple data sources

This is the difference between fraud detection and fraud prevention. Real-time decisions stop losses before they start.

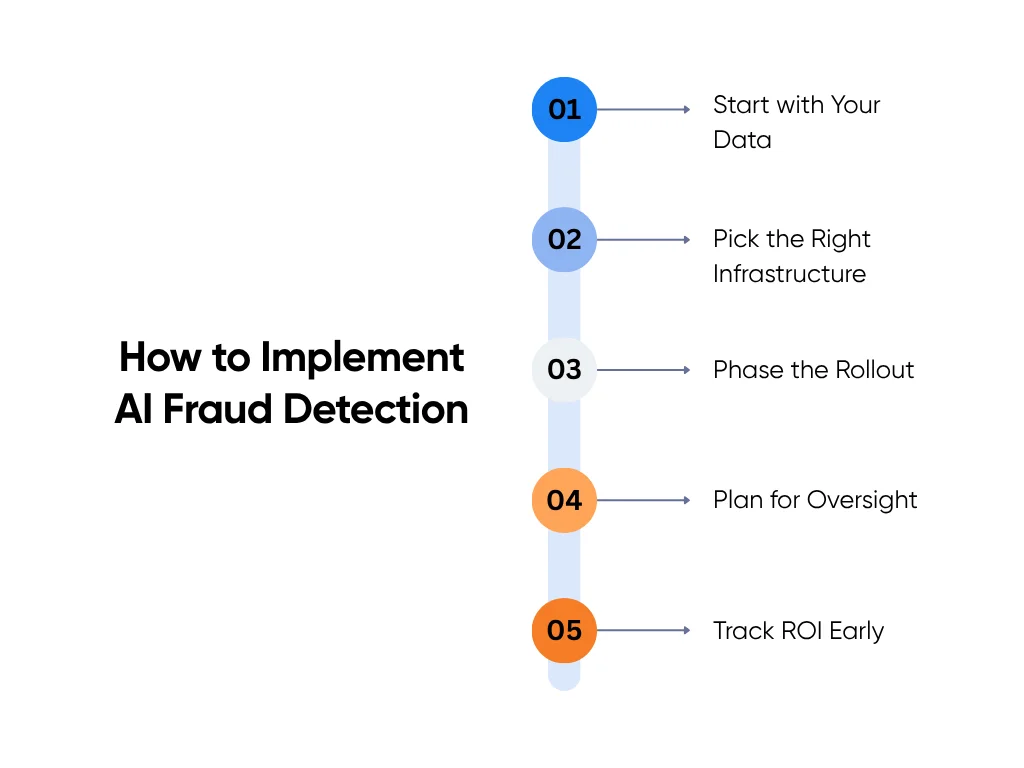

How to Implement AI Fraud Detection and Best Practices

AI only works when the setup does. You need clean data, systems that move in real time, and a rollout plan your team actually understands. Miss one, and the system will flag the wrong users or let the bad ones through.

Here’s how to roll it out without burning time or trust:

1. Start with Your Data

AI can’t detect fraud if it doesn’t know what “normal” looks like. Build that understanding first.

You’ll need:

- Confirmed Fraud Cases: Past chargebacks, blocked transactions, and verified scams.

- Behavioral Data: Logins, device fingerprints, transaction speed, and payment timing and legitimate transactions that set baselines.

- Labeled Outcomes: Every record tagged as fraud, false positive, or legitimate.

Most rollouts fail here. Data sits in silos, definitions don’t match, and old logs contradict new ones. The model ends up training on noise. Fix it before modeling. Run a short alignment sprint. Bring fraud ops, engineering, and product together to define what counts as fraud and how each case is tagged. Merge duplicates, clean timestamp mismatches, and remove test transactions that distort accuracy.

Make sure everyone understands how AI results will plug into existing review flows. Buy-in is easier when teams see how their work connects.

2. Pick the Right Infrastructure

Fraud detection isn’t batch work anymore for the financial services industry. If your pipeline processes data hours late, the money’s already gone.

Set up:

- Live data streams from payments, devices, and identity systems.

- Model hosting that scales automatically during traffic spikes.

- Audit logs that record every model decision.

Legacy systems usually choke here. Batch jobs fail, data queues lag, and alerts show up after the fact. So, start small: stabilize your live ingestion and make sure every prediction can be traced. Real-time visibility beats any “AI-ready” label on paper.

For a look at how financial teams have built AI systems that scale securely and efficiently, check out these 9 powerful applications of AI in finance. It’s packed with use cases that show what solid infrastructure looks like in action.

3. Phase the Rollout

AI earns trust; it doesn’t start with it. Don’t flip the switch until it’s proven in your environment.

Run the model in shadow mode: it scores transactions silently while your rules still decide. Measure precision, false positives, and latency. When metrics match or outperform your current setup, shift traffic gradually.

Go live too early, and you’ll spend the next sprint explaining to ops why good customers got blocked. Keep analysts reviewing output and feeding corrections back into training. Move decisions once you’ve seen consistent, real-world wins.

4. Plan for Oversight

AI performance fades without updates and human oversight. Fraudsters change tactics faster than models drift, and you need to stay ahead.

Maintain:

- Feedback loops so analysts can tag correct and missed calls.

- Retraining cycles that refresh the model with new data.

- Explainability tools to show which factors influenced each decision and the potential risks considered by the model.

You don’t need full interpretability; just traceability. When a regulator or customer asks “why,” you should be able to show the trail.

5. Track ROI Early

AI fraud detection costs upfront but strengthens risk management and financial crime prevention when you measure the right things. Most of the investment goes into data cleanup, infrastructure tuning, and model testing.

Track:

- Chargebacks avoided

- Manual review hours saved

- Approval rates improved

Baseline these before rollout, then track continuously. Teams that measure from day one see it pay off faster. A 10–15% drop in false positives is a solid start, but successful systems often see 40–80% reductions once tuned. Feedzai reports clients reaching an average ROI of 436 percent after implementation. McKinsey adds that companies with clear measurement frameworks not only see faster returns but also keep them as fraud tactics evolve.

Document the results early to support adoption of AI across teams and budgets.

What to Watch For

You don’t need to reinvent your stack. Get the basics right, keep your team in sync, and let the AI do the heavy lifting for once. That’s the kind of work we do at Aloa: helping teams build fraud detection that fits the systems they already trust.

How to Measure Success and ROI

Once AI is live, it’s not about how many alerts it fires off. It’s whether it catches real fraud, avoids false positives, and saves your team time. If it can’t do all three, it’s not working.

Here’s how to measure what matters and prove the ROI:

1. Catch Rate vs. False Alarms

Start with the core fraud metrics:

- True Positives: Confirmed fraud the AI caught

- False Positives: Legit users flagged by mistake

- False Negatives: Fraud that slipped through

If your false positives are high, your team ends up wasting time on clean traffic, or worse, you block good customers and frustrate them enough to leave. That’s not just a detection problem; it’s a UX, ops, and brand risk bundled into one.

Top-performing systems consistently hit 95%+ fraud detection accuracy and keep false positives under 2%. If you're far off, dig into your training data, labels, or feedback loop. Something’s off in the foundation.

2. Operational Load

AI should improve operational efficiency, not bury the team in alerts.

Track:

- Average case resolution time

- Alerts per analyst

- Analyst intervention rate

If case times stay flat or spike, your AI might be flagging too much noise, or your integration needs work. Either way, don’t wait for burnout to tell you something’s broken.

3. Business Impact

This is where the real value shows.

Track the before-and-after:

- Chargebacks avoided

- Financial losses reduced

- Manual review hours saved

- Approval rate lift

Tuned systems have cut fraud losses by 40–80%, and some teams have seen up to 436% ROI once models stabilize and feedback loops tighten.

Even small efficiency gains, like a 10% drop in false positives, can save thousands per month in manual review.

4. Benchmark Quarterly

Don’t wait a year to course-correct. Set a quarterly check-in and compare:

- Detection accuracy

- False positive rate

- Time to score each transaction

- Cost per fraud blocked

Leading banks using real-time systems aim for <100ms response time and <$0.50 per detection. With the right setup, those benchmarks are doable.

At Aloa, we’ve built similar systems outside of banking, too. The same AI models help insurance companies catch fraud earlier and speed up claims review. Here's how AI is changing fraud prevention in insurance for concrete examples of how teams measure success from day one.

Key Takeaways

AI can help you catch more fraud, waste less time, and stop tuning rules every Friday. But the longer you wait to implement it, the further ahead the fraudsters get.

You’ve got a roadmap now: what to build, how to test it, and where it breaks if you skip the basics. That’s most of the battle.

Start by auditing past fraud cases, mapping how AI fits your current flow, and running a shadow-mode pilot before anything goes live. That alone can save months of backtracking.

If you want to build AI-based fraud detection right the first time (and skip the duct tape), Aloa's got you. Let’s talk.

FAQs

How effective is AI-based fraud detection compared to traditional rule-based systems?

It’s not close. Rule-based systems rely on static “if-this-then-that” logic. AI adapts in real time. Detection accuracy jumps from 70–80% to 90–97% with machine learning. False positives drop from 10–20% to under 2%. AI catches new fraud patterns in hours; rules take weeks to rewrite. For most orgs, this means 50–80% lower financial losses from fraudulent transactions and 60–90% fewer false alerts in year one. ROI? Usually shows up within 12–24 months.

How quickly can AI fraud detection systems be implemented and show results?

It depends on how complex your setup is. Lightweight cloud tools go live in 2–4 months. Larger enterprise rollouts (with system integration, training, testing) take 6–12 months. Results often show up in the first 30–60 days. Full tuning takes 6–12 months, but you’ll likely see a meaningful bump in detection by the 90-day mark.

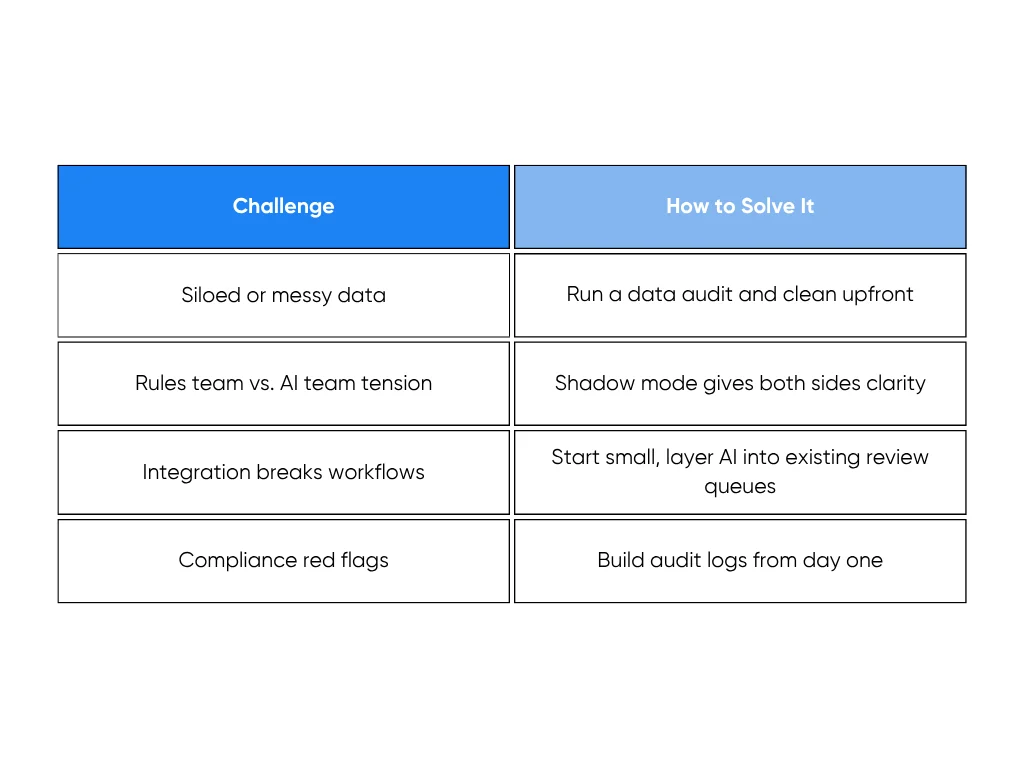

What are the main challenges in implementing AI-based fraud detection?

It’s not the AI; it’s your data and systems. Most teams hit roadblocks with poor data quality, disconnected systems, legacy tech, and data privacy constraints. Add compliance, explainability, and internal buy-in to the mix, and clearly, success depends on strong planning, clean feedback loops, and a rollout that keeps your ops team in the loop.

How do we balance fraud prevention with customer experience?

Use risk scoring to apply friction where it matters. Approve low-risk transactions, verify the medium ones, block only clear payment fraud. Watch customer satisfaction metrics alongside fraud stats. If good users are getting flagged, your thresholds are too tight. 98%+ legit approval should be the baseline.

Should we build AI fraud detection in-house or use external solutions?

Build it yourself only if you’ve got the time, talent, and budget to wait 12–24 months. Most teams don’t. External solutions get you started in weeks, not years, and include the latest version of detection tooling, including large language models for fraud investigations. Hybrid setups (external core, internal tuning) offer a smart middle ground. Unless you’re a big bank with custom needs, build-vs-buy is usually a false debate.