Welcome! I'm Chris from Aloa, and this is the first post in a series where we're building an AI medical transcription app from scratch. If you're interested in healthcare AI or just want to see some practical applications of technology, this series is for you.

Today we're focusing on the transcription engine, which is the core technology that converts a doctor's dictation into text.

The Challenge: Medical Dictations Are HARD

To give you a sense of the challenges, I've included a sample dictation in the video (at 0:27). A lot of people don't realize how hard some of this is to understand, even for a trained transcriptionist. The audio quality, medical terminology, and speaking patterns make it incredibly difficult.

If you listen to the sample (0:27-0:31), you'll hear something that sounds like: "The latter helped his mood but his sleepiness of the present period. He's been on Alexa..."

Try to figure out what the doctor is actually saying - I'd be very impressed if you get it right on the first try! At 0:41, you can see a side-by-side comparison of what various AI models transcribed from this same audio clip.

Why We're Building This

We're making this blog series and sharing what we're learning so other companies and organizations can hopefully take what we've learned and implement it in their own environments.

A little context: we were brought on by a medical practice to build a custom tool that would enhance the workflow of their existing transcriptionists. The medical practice wanted this tool to do a first pass at the transcription, which would then be handed to the transcriptionist so they can make further edits and corrections. Hopefully, this tool would not only save time but also reduce the cognitive load and fatigue of the team.

Quick Demo Overview

Before diving into the specifics of the transcription, here's how the tool works: You drag the transcription file into the tool, it runs through a series of steps, and the final output is the first pass at a transcription. We'll cover other steps like medication grading in future posts, but for now, we're focusing on how we tackled the transcription part.

The Research Phase: Finding the Right Model

To be honest, implementing the transcription was really easy. The bulk of the work was doing research and trying to figure out which model to use. We needed something that was:

- Very accurate, especially with medical terminology

- Cost-effective at scale

- Privacy-focused (specifically HIPAA compliant)

If you're not familiar with HIPAA, it's a privacy regulation that requires strict protection of patient information. In plain English, it means we need really strong encryption and a lot of mechanisms to make sure we're handling data properly. A non-negotiable for the project was that whatever model we chose had to be HIPAA compliant.

The Benchmark Test

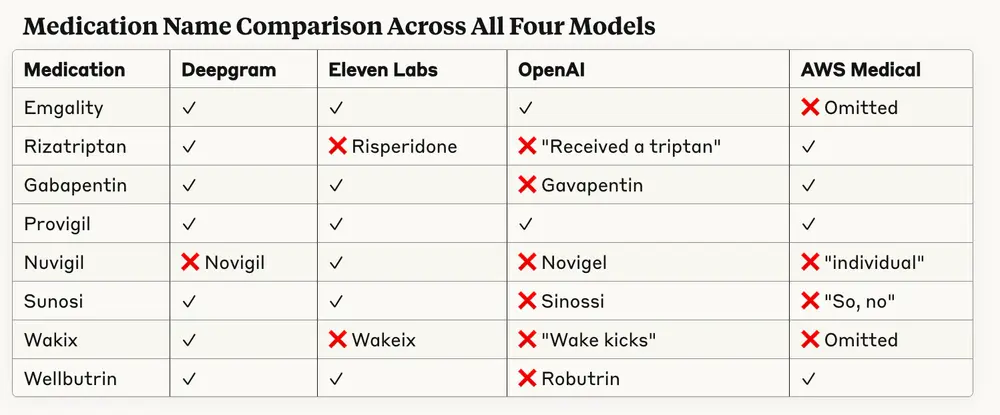

We ended up doing a series of benchmarks to test the models. One test in particular really showcased the differences - a single test file that had a bunch of medications thrown in to test how the models would handle specific medical terminology.

Let's go through the models we chose and how they performed:

1. Eleven Labs Scribe

Eleven Labs isn't usually known for speech-to-text - they're actually more known for text-to-speech capabilities. But we wanted to give them a shot because at the time of building, they recently came out with this model which was HIPAA compliant and they claimed had really good accuracy with medical terms.

Results: It got a lot of the correct terms like Emgality and Wellbutrin, but it made some mistakes like confusing Rizatriptan with Risperidone - which is extremely problematic because these are entirely different medications with different effects.

2. GPT-4 Transcribe

This is OpenAI's latest and most advanced speech-to-text model, actually outperforming their previous model Whisper, which a lot of medical applications use.

Results: GPT-4 Transcribe did get a couple terms like Emgality and Provigil correct, but tons of mistakes. It only got about 25% correct, which is definitely not good enough. But honestly, we weren't that surprised because this model wasn't specifically designed for medical transcription - it's more of a general transcription model.

3. AWS Medical Transcribe

We chose to look at this option because we were already using a bunch of AWS infrastructure for the backend. When you're already using AWS, it's just very convenient to use another AWS service that plays really well with the ecosystem. A lot of medical apps do use AWS, so I believe this is a pretty popular model in the medical space.

Results: It performed a little bit better than the OpenAI model, but honestly not by much. For a model that's supposed to be focused on medicine, this was a little disappointing to see.

4. Deepgram

Deepgram was the most interesting to us because at the time of recording, they were the latest model to come out, and we had heard very good things from people.

Results: Looking at performance, it actually lived up to expectations. In this test specifically, it only got one medication wrong, which is very good. Among all our tests, it scored about 75% accuracy, which compared to the other models is actually pretty high. The only mistake it really made was misspelling "Nuvigil" as "Novigil," which honestly isn't too bad.

The Most Concerning Finding

I want to call out one more time that the most concerning thing we saw was Eleven Labs confusing Rizatriptan with Risperidone. Rizatriptan is a medication for migraines, and Risperidone is an antipsychotic used to treat schizophrenia and bipolar disorder. That could have some pretty serious consequences if it made it into the final transcription.

Testing Challenging Dictations

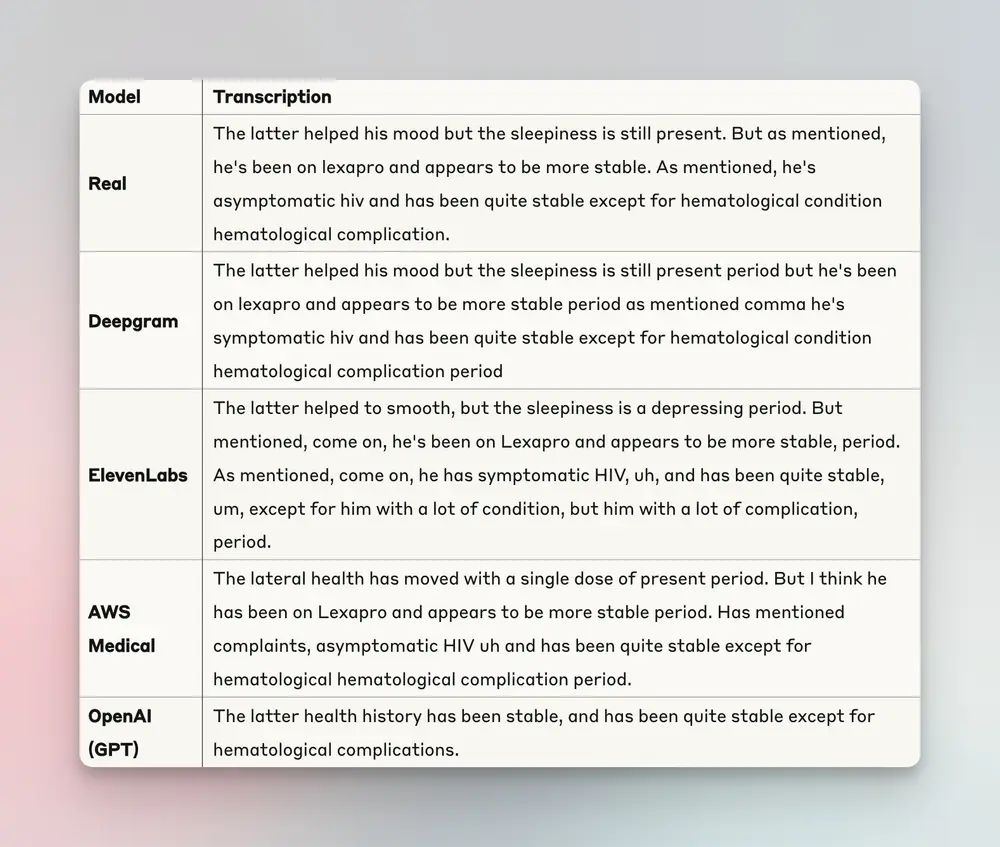

We wanted to test how the models handled particularly challenging dictations, which are actually very common depending on what dictation device the doctor is using. At 4:43, you can hear a sample clip and see how each model performed.

The transcription attempts included phrases like: "The latter health is smooth but the sleepiness of the present period has been less pro and appears to be more stable period as mentioned as symptomatic HIV uh and has been quite stable um except for hematological hematological complication period"

Most of the models got the base of the transcription except for OpenAI, which for some reason just didn't transcribe 90% of it. But the one that got the closest was Deepgram.

Here's an interesting exercise: If you have access to the video, listen to the dictation at 4:43 without looking at the transcriptions. Then listen again while reading the transcriptions on screen. You'll notice it's much easier to follow along with the first pass in front of you. Just imagine how much better a transcriptionist can work if they had a tool like this to do a first pass

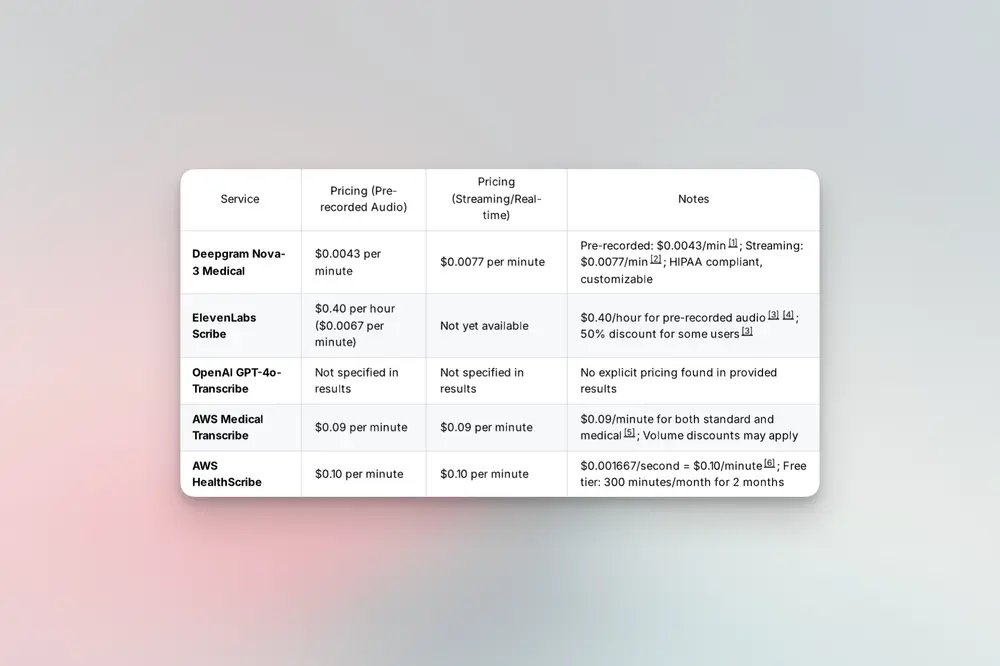

Cost Analysis: The Surprising Winner

While accuracy is crucial, let's look at the cost of the models. What surprised us most was that the most accurate model actually ended up being the cheapest among all of them.

The differences might seem like just a couple of cents per minute, but when you do this at a bigger scale, it actually adds up. For example:

- Transcribing 100 hours of dictation with Deepgram would cost about $26

- The same 100 hours with AWS Medical Transcribe would cost $600

A pretty big difference! If you're a hospital group with multiple doctors, this could be thousands of dollars per month in difference.

Our Choice: Deepgram

In terms of what we ended up choosing, I think it's pretty obvious. We went with Deepgram because it was the most accurate model AND the most cost-effective. This was a no-brainer for us.

Implementation: The Easy Part

Like I said in the beginning, implementation was actually really simple. Deepgram has a very well-documented API that was easy to use. It literally is just a couple of lines of code to implement this.

At a high level, here's how it works:

1. User uploads a dictation file to our frontend

2. It sends to our HIPAA-compliant and secure backend

3. The backend sends it to Deepgram for transcription (also HIPAA compliant)

4. Deepgram sends the transcription back to our backend

5. We do whatever further processing we need to get to the final format

Very few lines of code and really just took a couple minutes to implement.

What's Next?

That was the journey of us picking our transcription model and implementing it into the app. This is just one step in a whole line of steps that we went through to build this app. We'll cover future steps like building a HIPAA-compliant backend and building AI agents in future posts.

Again, we're sharing these resources so other companies can learn and hopefully bring it to their own environments. If you're interested in learning more, definitely check out our other content. There's a ton of free resources and also a really good newsletter which keeps you up to date on what's the latest in AI.

If you want to chat with me or someone else on my team to talk about how to build some of this stuff for your own businesses, feel free to reach out.

If you're building in the AI space, I hope this post was helpful for you. Thanks so much for reading, and I'll see you in the next post!