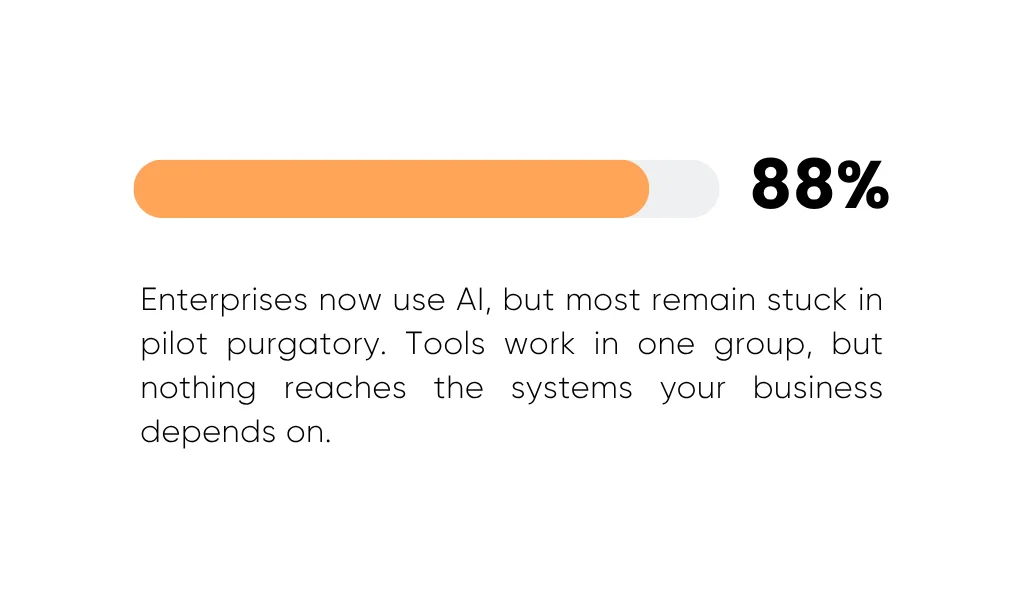

About 88% of large companies use artificial intelligence in their business operations, but only about 30% can scale. For business leaders like you, that gap hits hard. You probably already face pressure to prove progress fast, and the outdated systems that you are working with make it harder than expected.

We built Aloa to help teams turn early AI ideas into reality. We start by learning what you’re trying to fix, then test your ideas with quick prototypes. From there, you’ll get AI features that integrate with your existing tools and workflows.

In this guide, we’ll look at generative AI stats that shape enterprise adoption trends in 2025. You'll see:

- How adoption stacks up against readiness

- Why scale across teams stalls

- Where governance needs more attention

- Steps for your next quarter

You'll walk away with numbers you can use and a plan you can act on.

TL;DR

- 88% of enterprises report using AI, but only about one-third can scale it. This means that most teams are still stuck in pilot mode.

- 82% of executives use AI each week in everyday planning and communication.

- Code generation is the fastest-growing use case (growing about 53%) because it speeds up development and cuts mistakes.

- 72% of leaders now track ROI: time saved, costs reduced, and revenue impact.

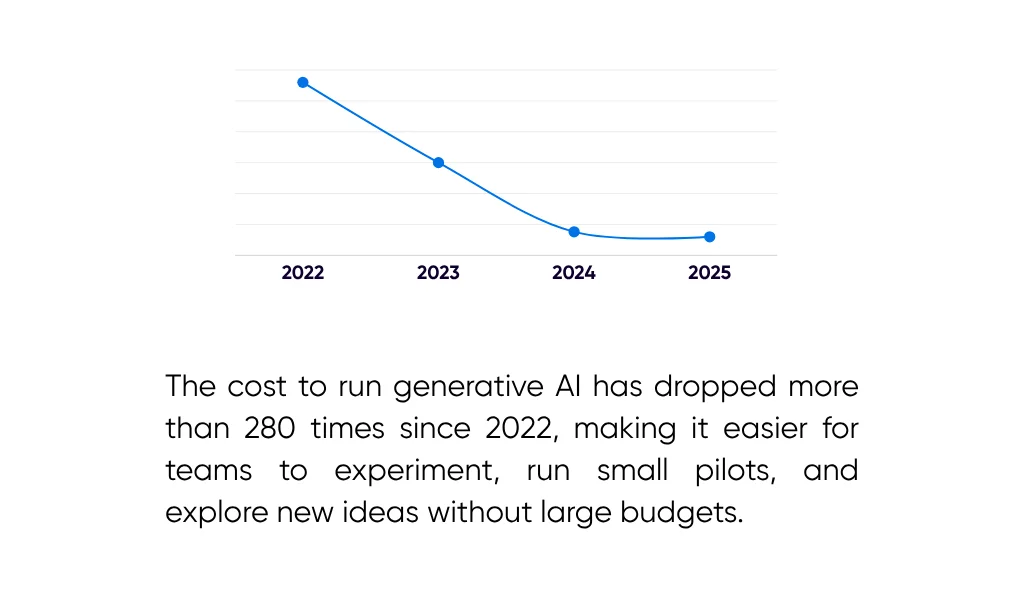

- Inference costs have dropped 280 times, making it easier for teams to test ideas and use smaller, focused models.

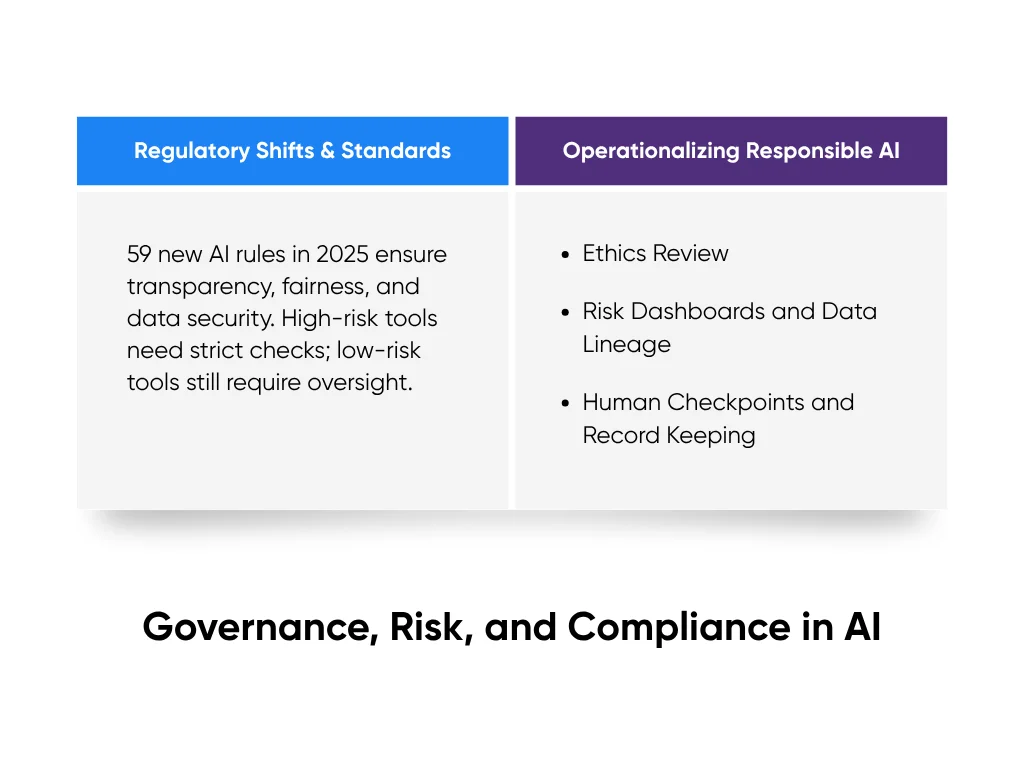

- 59 new AI rules were added in 2025, focusing on transparency, safety, and recordkeeping.

- The AI workforce has passed 944,000 people, increasing competition for deeper skills in MLOps, data, and evaluation.

What the Latest Generative AI Stats Reveal About Enterprise Readiness

Generative AI stats show strong adoption, but many companies still lack true enterprise readiness. Early wins are common, yet gaps in data, security, skills, and scaling stop AI from running safely. Leaders work to move from quick pilots to stable systems with clear owners and dependable performance.

Enterprise readiness means your company has what it needs to run AI smoothly. Your data stays clean, systems stay stable, rules protect the business, and teams know how to use the tools. You trust the results because nothing depends on luck.

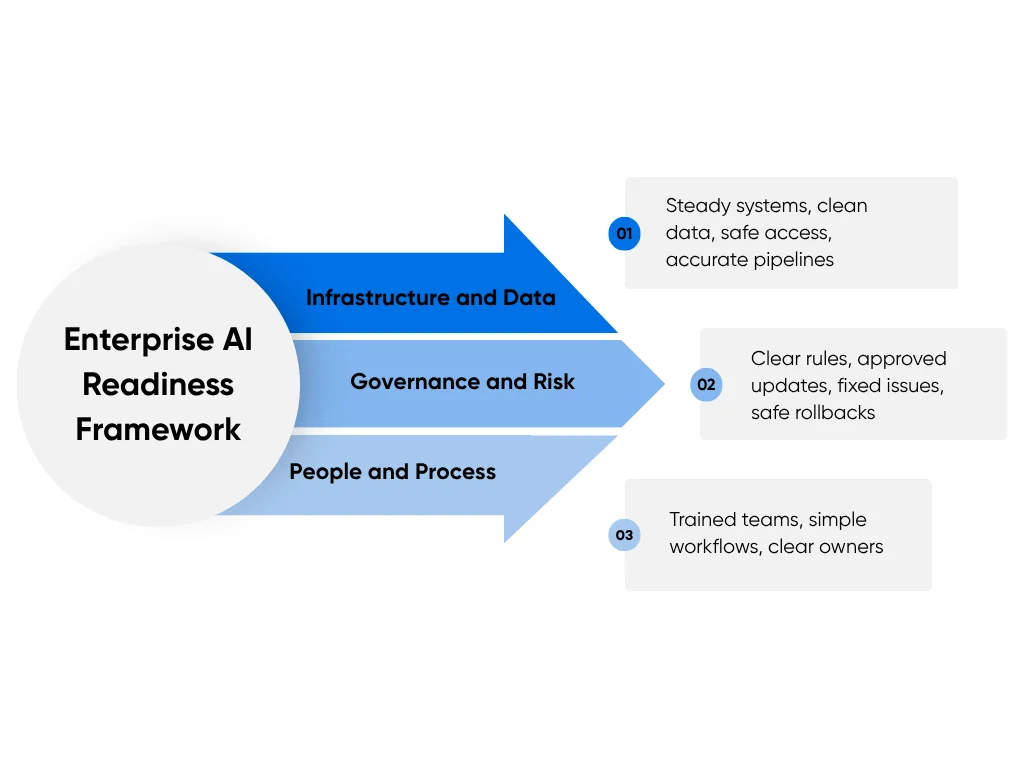

Readiness rests on three pillars:

- Infrastructure and Data: Steady systems, clean datasets, safe access, and pipelines that send accurate information to models.

- Governance and Risk: Clear rules for who runs models, how updates get approved, how issues get fixed, and how to roll back changes.

- People and Process: Trained teams, simple workflows, and clear owners who track results.

Recent generative AI survey results show strong adoption but weak maturity. Many companies run pilots, yet few meet basic production needs like monitoring, observability, or rollback plans. This pattern shows up across a full range of regions (from North America and Europe to Latin America, the Middle East, China, and India) in a 2025 state of AI study.

The gap is easy to spot. One team builds a chatbot, another builds a similar one, and neither connects to your CRM. That leads to repeated work, unclear data flow, and shadow AI. Without shared systems, measuring ROI becomes guesswork.

Readiness also varies by industry. A hospital group needs strict audit trails to protect patient data, while a SaaS company focuses on fast fixes and steady uptime.

Aloa supports this work by mapping each use case to real systems, setting clear owners, and tracking ROI from the start. This keeps progress steady instead of scattered.

Let's look at seven generative AI stats to see how far enterprises have come and where the gaps still sit, giving you clear signals for your next steps.

88% of Enterprises Now Use AI, But Few Scale Beyond Pilots

Many companies fall into what we call pilot purgatory. This happens when teams run small AI tests, get a few bright moments, then stop short of real adoption. Tools work inside one group, but nothing reaches the systems your business depends on.

Pilots are often an easy start. But problems arise when you try to scale them to multiple teams. This is when issues like outdated data, slow approvals, and weak training add up and raise costs.

Barriers to Scale

Here are the common blockers:

- Fragmented Data and Legacy Systems: When data is spread across different tools, AI outputs are siloed. A model might help one team, but it will not support a full workflow across sales, support, or clinical systems. This can lead to delays and extra work.

- Unclear Accountability: When no one is responsible for how a model works, security waits on product, product waits on data, etc., which pushes out deployment dates.

- Limited Training and Weak Change Management: When teams fail to learn how to use AI well, adoption suffers. You see low usage, poor output quality, and extra rework to clean mistakes.

These blockers can affect progress in any industry. But here is the good news: “pilot purgatory” is fixable.

Pathways to Scale

Here's a simple playbook leaders can use to move from pilots to production:

- Start with one workflow and clear KPIs: Pick a workflow with real business value. Set simple KPIs like cycle time, cost-to-serve, and error rate. Review progress every 90 days and adjust based on what the numbers show.

- Set cross-department governance with a clear RACI: Bring in security, legal, data, and operations. Define who owns decisions, who approves changes, and who supports the work. Clear sign-off rules speed up deployment and reduce confusion.

- Learn from companies that scale faster: High performers prepare early. They clean data before pilots, assign strong product owners, and set guardrails so teams know what is safe and allowed. This keeps projects moving without constant roadblocks.

These steps might look small, but they push real change once your teams start using them every day. The catch is that the details matter. You need someone who knows how to set things up so they stay stable as you scale. If you want help from a partner who handles this work across industries, our AI consulting service is a good place to start.

Generative AI Becomes a Daily Executive Tool

Leaders now use generative AI in daily work across planning, analytics, and communication. Reports show that about 82% of executives use AI each week, and around 47% use it each day. This steady use helps leaders make decisions faster, share updates sooner, and spot problems earlier. It also builds a culture where AI feels normal instead of experimental.

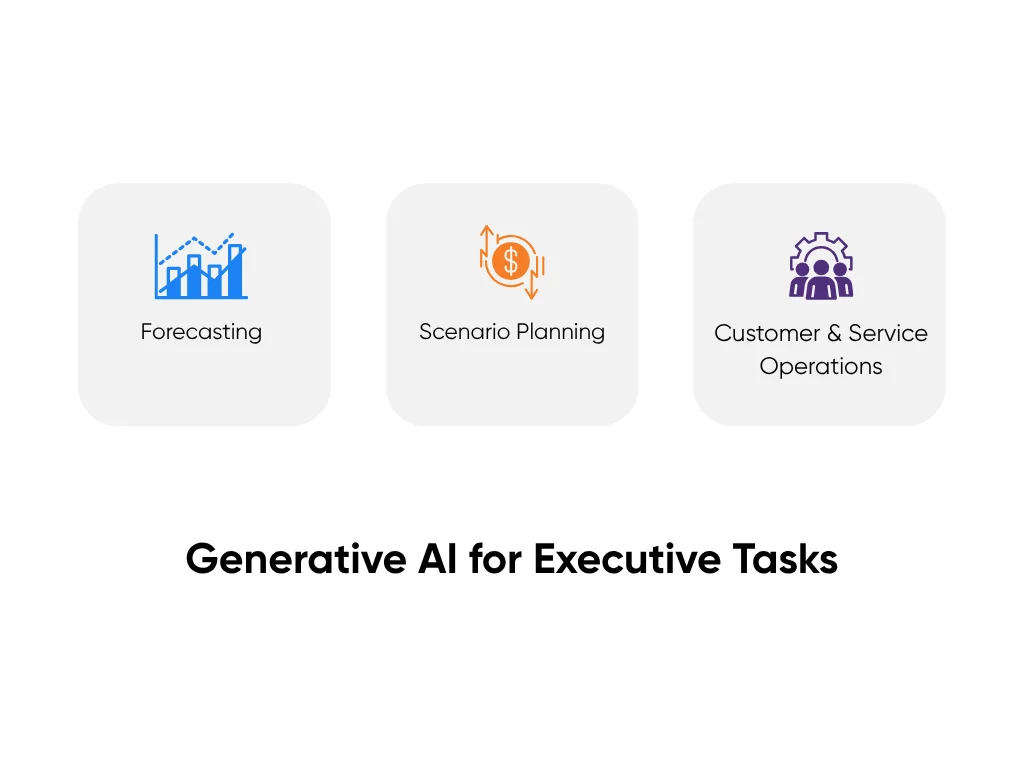

Executives use AI for tasks like:

- Forecasting: Generative AI reviews past numbers and points out early shifts in sales, patient visits, insurance claims, or ticket volume. Leaders get quick alerts when demand rises or when work slows down. This helps them plan staffing, supplies, or budgets without waiting for long reports.

- Scenario Planning: Leaders ask simple “what happens if” questions. AI lays out how a change in staffing, pricing, or policy could affect results. This gives them clear options within minutes. They can compare the paths side by side instead of waiting days for analysis.

- Customer and Service Operations: AI drafts customer service replies, summarizes long case notes, and points out recurring issues across customers or patients using core generative AI capabilities. Teams clear backlogs faster, reduce wait times, and keep response quality steady even during busy periods.

These tasks bring real benefits. Leaders show up to meetings more prepared. Teams save time they used to spend digging for data. Decisions become clearer because important information is already sorted and explained.

Daily use needs a few guardrails to stay reliable. To avoid biased answers, you should use clear prompts and policies that spell out what the model can access and what it should avoid. To maintain high accuracy, you should check outputs before sharing them and log each AI action so they can trace any mistakes quickly.

Code Generation Leads as the Fastest-Growing Enterprise Use Case

Code generation is now the fastest-growing use of generative AI. It helps software engineers and developers write code, fix bugs, and create tests from simple instructions. This cuts down the time they spend on repeat tasks and gives them more room to focus on harder problems. Big tech companies now rely on code generation more than ever. For example, Microsoft reports that 20-30% of its code is now written by AI, underscoring how much teams lean on this boost in speed.

Productivity Gains and Adoption Trends

Here is what companies see when they add code generation to daily software engineering work:

- Faster Release Cycles: AI writes starter code, suggests fixes, and creates tests. This reduces wait time between builds and shortens development sprints.

- Fewer Defects: AI tests catch missing coverage and common mistakes early. This lowers the number of bugs that reach staging or production.

- More Focus on Complex Work: Developers spend less time on boilerplate code and more time on design, reviews, and performance improvements.

Most teams begin with general tools like GitHub Copilot for day-to-day tasks. Then they add custom copilots trained on their own internal code. This helps the AI understand their naming rules, in-house libraries, and workflow steps. Companies use this mix to get broad help on common tasks and deeper support for their own systems.

These adoption patterns match what we see across industries. A finance team might use AI to explain old functions in a large codebase. A healthcare team might use it to write tests for clinical data pipelines. Both teams save time, reduce errors, and keep work moving with simple prompts.

Balancing Speed, Security, and Compliance

Code generation speeds up development, but teams need the right controls to keep that speed safe. These steps help protect security and quality:

- IP and License Checks: Every AI-generated snippet needs to be safe to use. IP scans check that the code is original. License checks make sure no snippet introduces restricted or conflicting licenses. Keeping a record of this inside the pipeline protects your legal position.

- Secure Model Routing and Data Minimization: Sensitive code, like payment logic or clinical workflows, stays inside internal models. Non-sensitive prompts can use outside tools. This reduces security risk and keeps private data safe.

- Phased Rollout with Baselines: Some teams see slow progress at first because developers learn how to write good prompts and review output. A phased rollout helps. Start with simple tasks, measure current cycle time and error rates, and expand once the team feels steady.

Aloa includes these controls in our rapid prototyping work. We connect AI code tools to safe environments, add tracking, and set simple review steps. This lets your team enjoy faster development without putting security or compliance at risk.

ROI Measurement Becomes Non-Negotiable for AI Leaders

Recent reports show that about 72% of leaders track ROI for their AI projects. Companies want straight numbers that show where AI saves time, lowers costs, or helps bring in more revenue. They also want this tracking inside the tools where the work happens, like ERP, CRM, or the data warehouse, so results stay tied to real tasks and not stand-alone reports.

Most teams track ROI using three groups of KPIs:

- Productivity KPIs: Teams track cycle time, which is the time it takes to finish a task. They also track hours saved when AI handles steps that used to take staff time. These numbers help leaders see if teams get time back.

- Cost KPIs: These show whether AI reduces waste. Teams look at the cost of running models, the time spent fixing mistakes, and the effort needed for support. Lower numbers mean AI is helping control costs.

- Revenue KPIs: Teams track how many leads become customers, how much current customers grow, and how many stay. These numbers show whether AI helps the business earn more from the work it already does.

Before launching an AI project, set simple “before and after” measurements. These baselines show how the workflow performs today. After AI goes live, compare the new numbers to the baseline to see real impact. Some teams also use control groups for work that changes a lot month to month. A control group uses the old process so leaders can tell if improvements came from AI or natural swings.

The Cost of GenAI Is Dropping, Fueling Broader Experimentation

The cost to run generative AI has dropped more than 280 times since 2022. This significant drop makes it easier for companies to test AI without spending much. Teams can try small pilots, build simple workflows, and explore ideas without waiting for a large budget.

Lower costs also make domain-specific generative AI models more common. These are smaller models built for one area, like claims review, patient summaries, or supply chain checks. Cheaper use helps everyone experiment, but you still need good rules and careful testing to stay safe.

The Economics of Democratized AI

Lower costs change how teams use AI inside a company:

- Lower costs make testing easier: Teams can try new ideas with small, fast models that do not need special hardware. This lets more departments test AI instead of waiting for approval from central IT.

- More pilots show up across the company: When testing gets cheaper, teams launch more pilots. This helps companies find good ideas faster. It also means results can get messy, so leaders need better ways to compare different models.

- Shared evaluation sets keep results fair: Teams often test models with different data, which makes the results hard to compare. Using the same evaluation set keeps the tests fair and helps leaders choose the best model for each job.

These changes let more people take part in AI work. Teams can test ideas, share results, and find AI use cases that solve real problems.

Designing for Sustainable Scale

Lower costs help teams start, but smart planning helps them grow:

- Start with small models and upgrade later: Small models are cheaper and usually strong enough for focused tasks. Teams can plan upgrades only when accuracy or speed drops below simple targets. This avoids spending too much too early.

- Use observability to keep systems steady: This means watching things like response time, accuracy, and error levels. Latency shows how long the model takes to answer. Drift shows when answers start getting less accurate. Error budgets set limits on how many mistakes are allowed before someone checks the system. These steps help leaders catch problems before they spread.

- Remember that inference cost is not the whole cost: Running a model is only one expense. Actual cost also comes from data work, testing, security checks, monitoring, and helping teams learn new processes. Leaders need to count all of these to understand the full cost.

Aloa builds AI systems with these points in mind. We design setups that balance cost, speed, and long-term maintenance so your team can grow AI use without losing control of quality or spending.

Governance, Risk, and Compliance Take Center Stage

Responsible AI is now a basic part of running a safe business. New rules push companies to protect customers, keep data secure, and avoid harmful mistakes. Strong governance shapes how AI tools are built, tested, and used. Without it, companies risk outages, fines, or losing customer trust.

Regulatory Shifts and Global Standards

AI rules are increasing quickly. Recent reports show that 59 new AI-related regulations were added in 2025, as regulatory compliance guidance from federal agencies becomes stricter. Most focus on three clear goals:

- Transparency so people know when AI is being used and how it makes decisions.

- Fairness so AI does not favor one group over another.

- Data security so sensitive information stays protected.

These rules affect everyday tools. A medical or financial chatbot is high risk because a wrong answer can harm someone. It needs strict checks, logs, and clear ownership. A simple internal meeting-note summarizer is lower risk, but it still needs oversight because it handles private company information.

Matching the level of control to the level of risk keeps teams safe without slowing down all work.

Operationalizing Responsible AI

Rules matter only when they show up in daily routines. Here’s how you make responsible AI part of normal work:

- AI Ethics Review: A small team reviews each new AI project before launch. They look at what the tool will do, who it affects, and what could go wrong. This catches unsafe ideas early and reduces rework later.

- Risk Dashboards and Data Lineage: Risk dashboards alert teams when a model’s accuracy drops, when results drift, or when the model pulls data it should not. Data lineage shows where the data came from, who changed it, and how it moved. These tools help teams trace mistakes, reduce inaccuracy, and pass audits.

- Human Checkpoints and Record Keeping: For high-impact decisions (like patient instructions, credit approvals, or claim outcomes), a human reviews the output before sending it. Teams also save prompts and outputs so they can investigate errors and fix the patterns behind them.

Rules vary by region, but the basics stay the same everywhere: know how the system works, know who owns it, and keep humans involved in decisions that matter.

Strong governance protects people, reduces risk, and helps companies scale AI safely. When systems are traceable, clearly owned, and checked by humans, teams can roll out new tools faster without worrying about surprises later.

The Enterprise AI Workforce Is Exploding

The generative AI workforce has now grown past 944,000 people worldwide. There are more skilled workers than ever, but hiring is still slow and competitive. Many candidates know the basics, but fewer have the deeper skills needed to work with real company systems, messy data, and important workflows. Leaders quickly learn that hiring for a job title doesn’t guarantee the skills needed for day-to-day AI work.

The roles that make the biggest impact focus on practical, hands-on work:

- ML Engineers: They build and adjust models, prepare training data, and keep AI systems running. Their work makes sure AI stays accurate, stable, and fast enough for everyday tasks.

- AI Ops Specialists: They watch model performance, handle updates, and fix problems when something breaks. They keep AI steady so teams don’t lose time to errors or outages.

- Prompt and Evaluation Engineers: They write clear prompts, test outputs, and check quality. They help reduce mistakes and keep AI answers consistent and safe.

These skills matter because enterprise AI depends on clean data, safe rollouts, and results that teams can trust. Job counts can be confusing because some companies rename old roles as “AI jobs.” It works better to hire and train based on the skills you actually need, not the titles people use.

To grow these skills while you hire, consider building talent from the inside. Invest in:

- Internal academies that teach AI skills using current systems

- Pairing programs where new staff learn next to experienced teammates

- Learning paths tied to live projects so training connects to actual work

These steps help teams build strong, reliable AI skills and understand how their own data and systems work. At Aloa, we work with your teams on real projects and give them simple patterns they can keep using long after the project is done.

Key Takeaways

Generative AI is everywhere now, but the teams moving ahead do a few things differently. They prepare their systems, set clear rules, and track outcomes instead of relying on intuition. They know how to go beyond the pilot mode.

A simple 90-day plan gives you a clean starting point:

- Pick one workflow that actually matters to your operations.

- Capture today’s time, cost, and error numbers.

- Build a small pilot with a clear owner and basic monitoring.

- Add lightweight checks with security, data, and legal.

Use the seven generative AI stats in this guide as a maturity check. Set targets for scale, safety, and ROI, and review them often. Small, steady wins stack up faster than big, unfocused pushes.

Aloa helps you put this into practice by wiring AI into the systems you already use, adding guardrails that keep things safe, and tracking performance so you know what’s working. If you want a smoother path from pilot to production, reach out to us.

FAQs About Generative AI Stats

What are the top enterprise use cases for generative AI?

The most helpful use cases take slow, repetitive work and make it faster. Code generation helps developers write and fix code quicker. Support teams use AI copilots to draft replies, summarize long cases, and pull up past answers. Many companies also use AI to review claims, contracts, or clinical notes, with a human doing the final check. These uses work well because AI gives teams a solid starting point and people finish the job.

How do I measure ROI from GenAI initiatives?

Start by measuring how the workflow performs today. Build a small pilot, compare the results to your baseline, and grow only when the numbers improve. Most teams track three types of metrics: how much time they save, how much cost they reduce, and how much revenue improves. These numbers make the impact clear.

What industries are leading in generative AI adoption?

Tech and SaaS move quickly and continue to lead the generative AI market landscape because their data is clean and their systems update often. Finance is close behind since they already follow strong rules around data and risk. Healthcare is growing fast too, especially for documentation tasks, though privacy rules slow some projects. These industries grow faster because they take data quality and governance seriously.

How is regulation expected to evolve next year?

Rules are shifting toward clearer transparency, better recordkeeping, and risk checks before launching new tools. Companies will need to show when AI was used, keep logs of prompts and outputs, and review possible impacts. Even with rules still forming, having clear owners, human review for important decisions, and solid logs will help you stay prepared.

How can enterprises stay AI-ready without overspending?

Start with one workflow that matters and use small models before moving to bigger ones. Stick with the data platforms you already have and avoid building new systems too early. Track results from the start so you know what is worth scaling. This keeps costs low while helping your teams build steady progress.